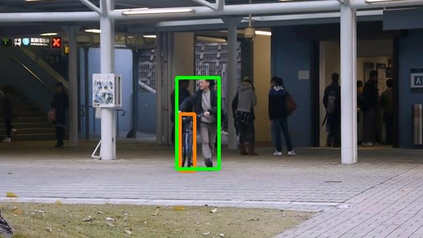

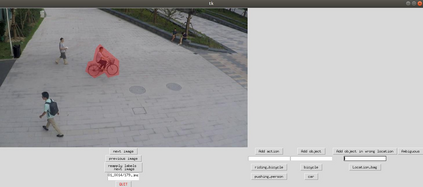

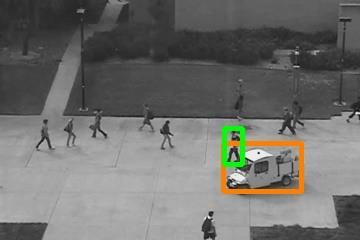

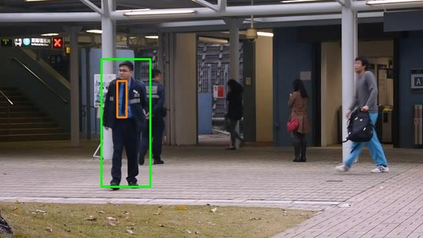

Our objective is to detect anomalies in video while also automatically explaining the reason behind the detector's response. In a practical sense, explainability is crucial for this task as the required response to an anomaly depends on its nature and severity. However, most leading methods (based on deep neural networks) are not interpretable and hide the decision making process in uninterpretable feature representations. In an effort to tackle this problem we make the following contributions: (1) we show how to build interpretable feature representations suitable for detecting anomalies with state of the art performance, (2) we propose an interpretable probabilistic anomaly detector which can describe the reason behind it's response using high level concepts, (3) we are the first to directly consider object interactions for anomaly detection and (4) we propose a new task of explaining anomalies and release a large dataset for evaluating methods on this task. Our method competes well with the state of the art on public datasets while also providing anomaly explanation based on objects and their interactions.

翻译:我们的目标是探测视频中的异常现象,同时自动解释探测器反应背后的原因。从实际意义上讲,解释对于这项任务至关重要,因为对异常现象的所需反应取决于其性质和严重程度。然而,大多数主要方法(基于深神经网络)不能解释,而将决策过程隐藏在无法解释的特征表中。为了解决这一问题,我们做出了以下贡献:(1) 我们展示了如何建立可解释的特征表,适合以最新性能探测异常现象;(2) 我们提议了一个可解释的异常现象探测器,该探测器能够用高层次的概念描述其反应背后的原因;(3) 我们首先直接考虑异常现象探测对象的相互作用;(4) 我们提议了一项新任务,即解释异常现象并发布大量数据,用于评估这项任务的方法;我们的方法与公共数据集的先进状态竞争良好,同时提供基于物体及其相互作用的异常解释。