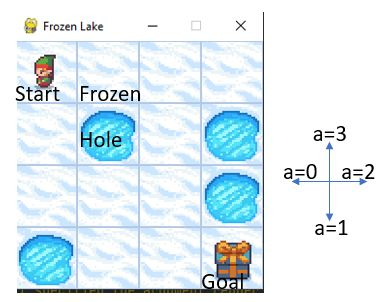

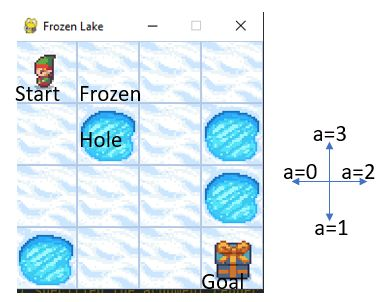

Future- or return-conditioned supervised learning is an emerging paradigm for offline reinforcement learning (RL), where the future outcome (i.e., return) associated with an observed action sequence is used as input to a policy trained to imitate those same actions. While return-conditioning is at the heart of popular algorithms such as decision transformer (DT), these methods tend to perform poorly in highly stochastic environments, where an occasional high return can arise from randomness in the environment rather than the actions themselves. Such situations can lead to a learned policy that is inconsistent with its conditioning inputs; i.e., using the policy to act in the environment, when conditioning on a specific desired return, leads to a distribution of real returns that is wildly different than desired. In this work, we propose the dichotomy of control (DoC), a future-conditioned supervised learning framework that separates mechanisms within a policy's control (actions) from those beyond a policy's control (environment stochasticity). We achieve this separation by conditioning the policy on a latent variable representation of the future, and designing a mutual information constraint that removes any information from the latent variable associated with randomness in the environment. Theoretically, we show that DoC yields policies that are consistent with their conditioning inputs, ensuring that conditioning a learned policy on a desired high-return future outcome will correctly induce high-return behavior. Empirically, we show that DoC is able to achieve significantly better performance than DT on environments that have highly stochastic rewards and transition

翻译:未来或返回受监督的学习是在线强化学习(RL)的新兴范例。 与观察的行动序列相关的未来结果(即返回)被用作对一项经过训练能够模仿同样行动的政策的投入。 虽然返回调节是决策变压器(DT)等流行算法的核心,但这些方法往往在高度随机化的环境中表现不佳,在这种环境中,由于环境的随机性而不是行动本身,偶尔会出现高回报。这些情况可能导致一种与其附加条件的投入不一致的学习政策;即利用政策在环境中采取行动,在特定预期返回时,导致真实回报的分配,而这种分配则与预期大不相同。在这项工作中,我们提议对控制(DOC)等受监督的未来学习框架进行二分立,这一框架将政策控制(行动)的机制与政策控制之外的机制(环境的任意性)产生分离。我们之所以实现这种分离,是因为将政策调整为未来的潜在变异性代表,而设计一种相互信息限制,从而消除任何与期望的回报的差别性回报。 在这种变化中,我们从一个高水平的回报政策中可以明显地显示我们所了解的结果。