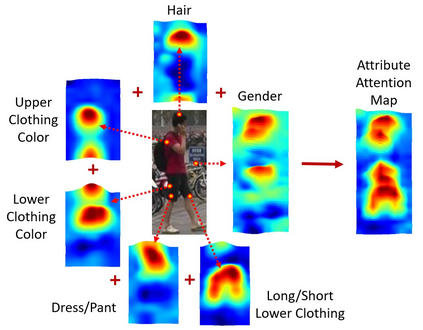

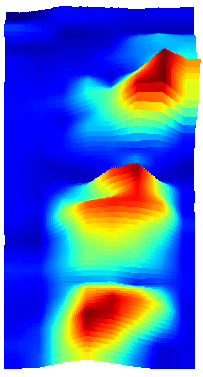

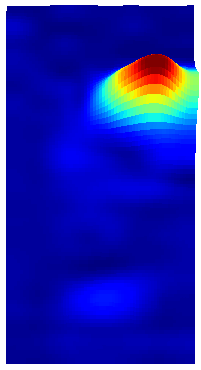

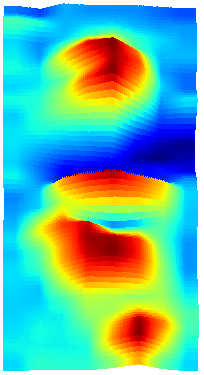

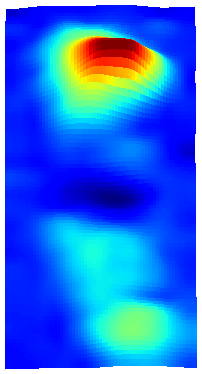

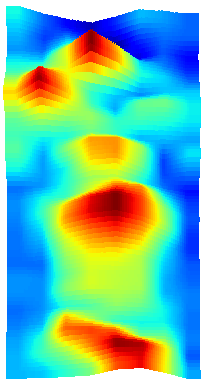

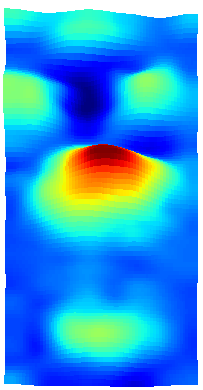

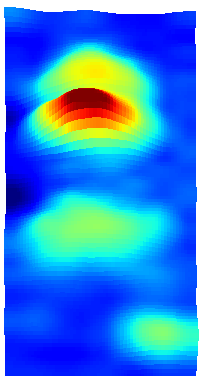

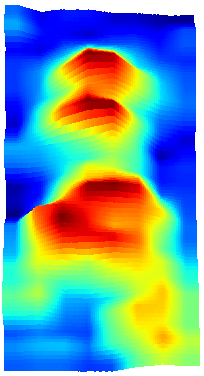

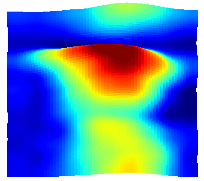

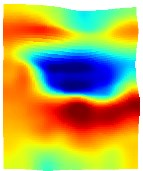

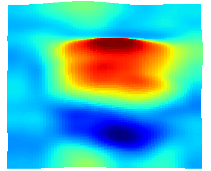

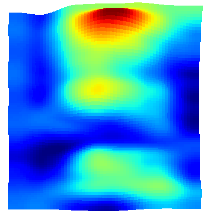

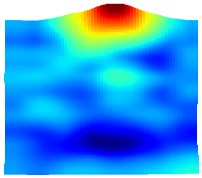

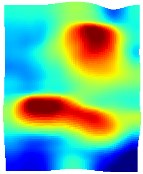

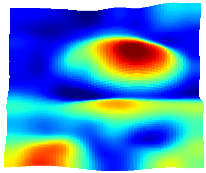

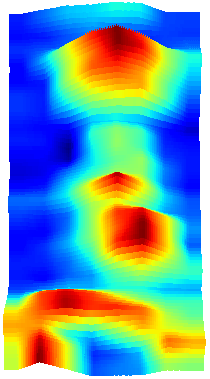

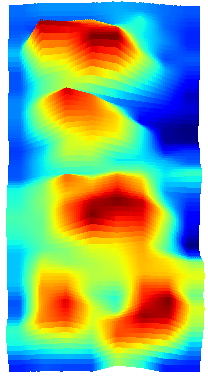

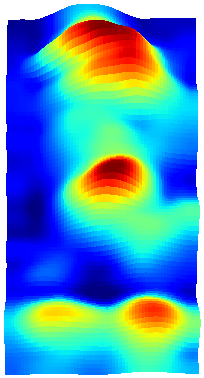

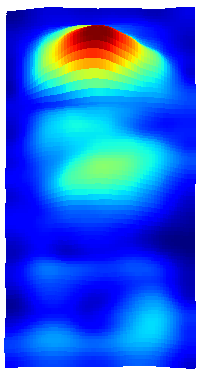

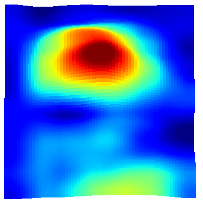

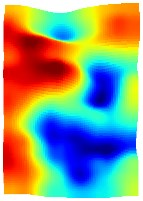

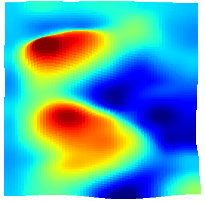

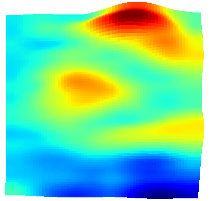

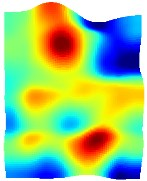

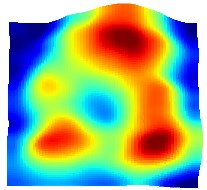

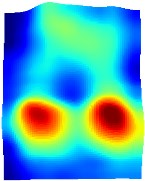

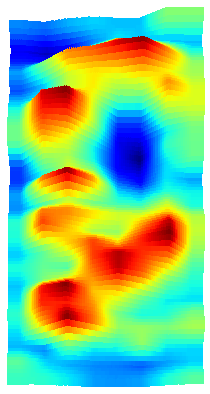

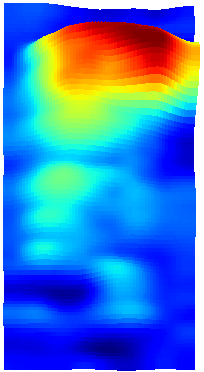

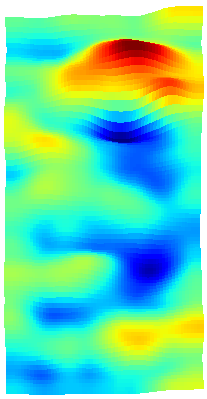

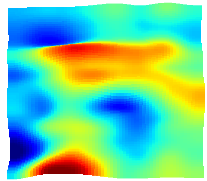

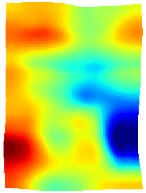

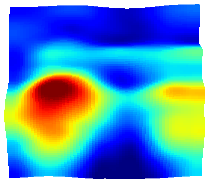

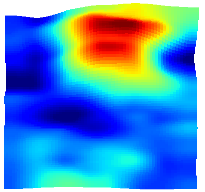

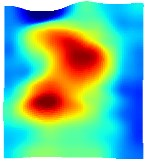

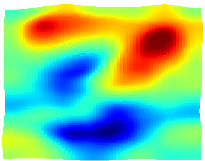

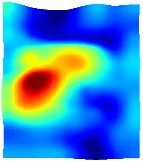

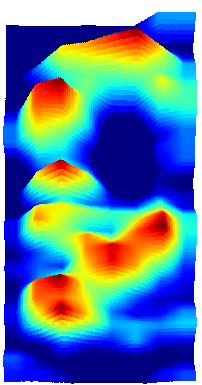

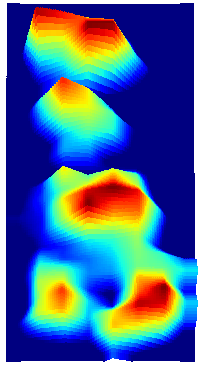

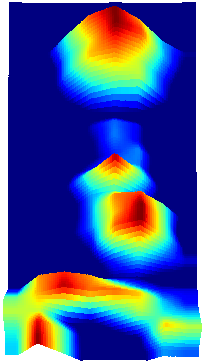

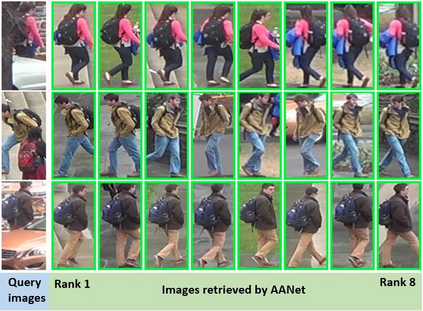

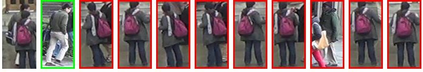

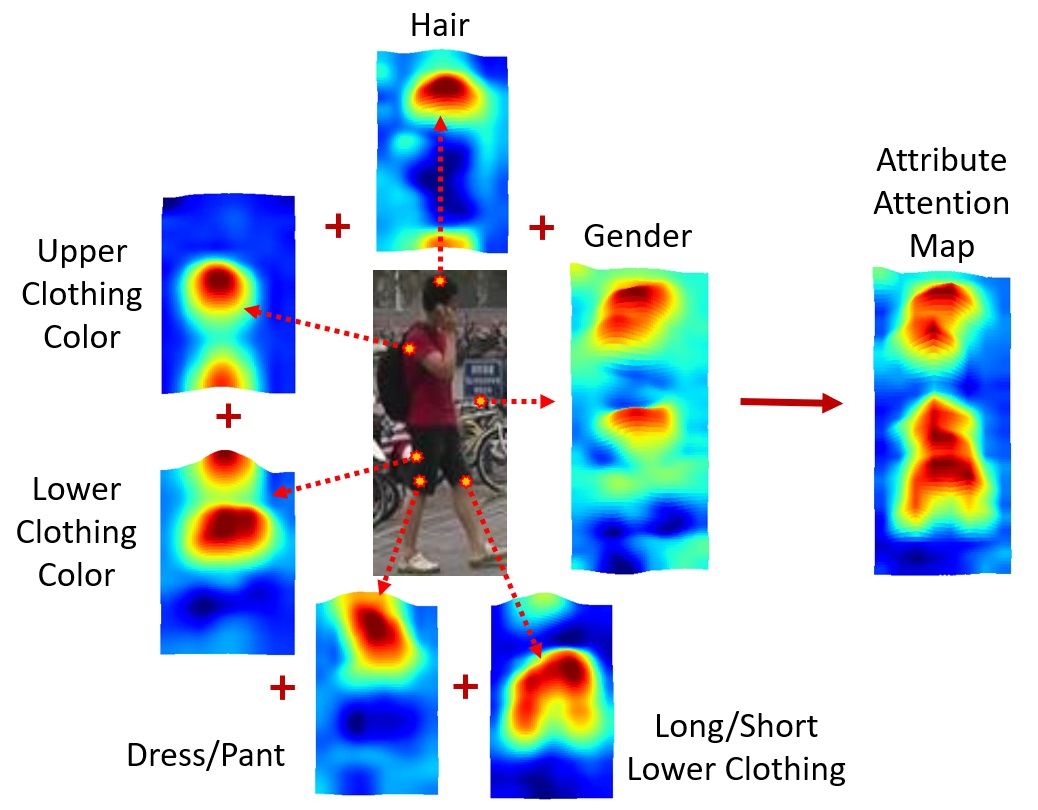

This paper proposes Attribute Attention Network (AANet), a new architecture that integrates person attributes and attribute attention maps into a classification framework to solve the person re-identification (re-ID) problem. Many person re-ID models typically employ semantic cues such as body parts or human pose to improve the re-ID performance. Attribute information, however, is often not utilized. The proposed AANet leverages on a baseline model that uses body parts and integrates the key attribute information in an unified learning framework. The AANet consists of a global person ID task, a part detection task and a crucial attribute detection task. By estimating the class responses of individual attributes and combining them to form the attribute attention map (AAM), a very strong discriminatory representation is constructed. The proposed AANet outperforms the best state-of-the-art method arXiv:1711.09349v3 [cs.CV] using ResNet-50 by 3.36% in mAP and 3.12% in Rank-1 accuracy on DukeMTMC-reID dataset. On Market1501 dataset, AANet achieves 92.38% mAP and 95.10% Rank-1 accuracy with re-ranking, outperforming arXiv:1804.00216v1 [cs.CV], another state of the art method using ResNet-152, by 1.42% in mAP and 0.47% in Rank-1 accuracy. In addition, AANet can perform person attribute prediction (e.g., gender, hair length, clothing length etc.), and localize the attributes in the query image.

翻译:本文建议“ 属性关注网络”(AANet), 这是一种将个人属性和关注图纳入分类框架以解决个人再识别( re- ID) 问题的新架构。 许多人再识别模型通常使用人体部件或人姿势等语义提示来改进再识别性能。 但是, 属性信息往往没有被使用。 拟议的 AANet在使用身体部件和将关键属性信息纳入统一学习框架的基准模型上进行杠杆作用。 AANet包含一个全球人身份识别任务、一个部分检测任务和一个关键属性检测任务。 通过估算个人属性的类别响应,并将它们合并成属性关注地图( AAM), 构建了一个非常强烈的歧视性代表。 拟议的 AANet 超越了最佳的状态方法 arXiv: 171.09349v3 [cs. CV] 使用 ResNet- 50 + 3.36% 和 DukMMMMC- reID数据集的3. 12% 。 在市场1501 数据设置, AANet 和 AA 388- m401 和 Restion a reviewal a rial a reviewal A.