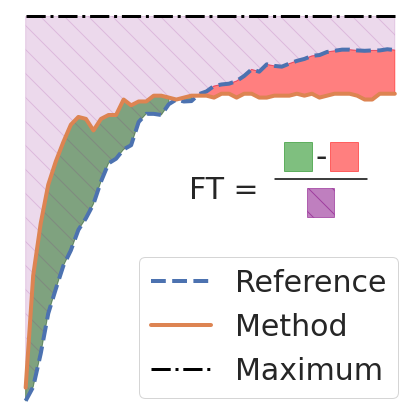

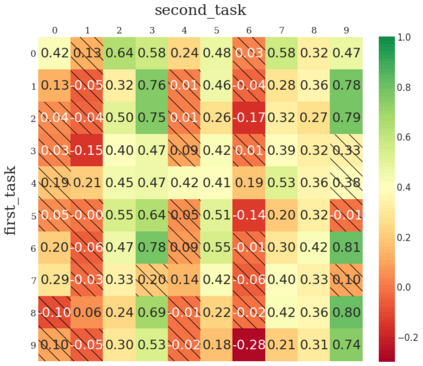

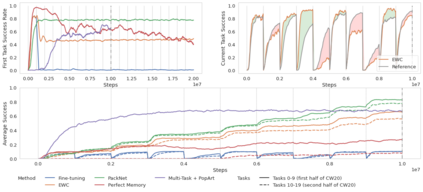

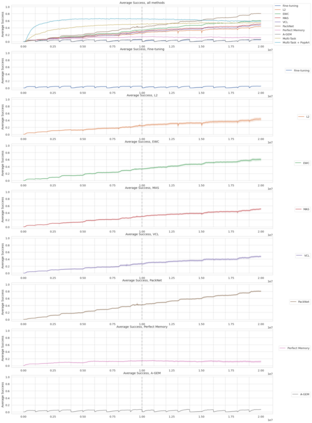

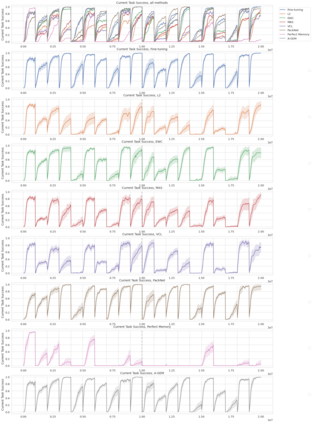

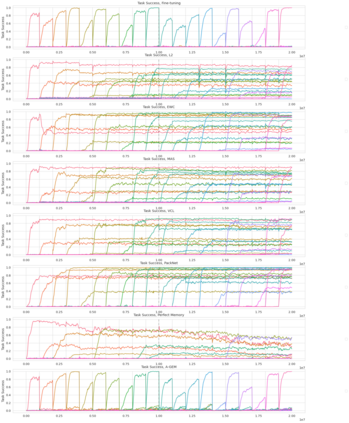

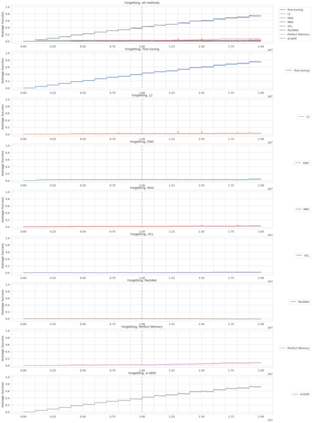

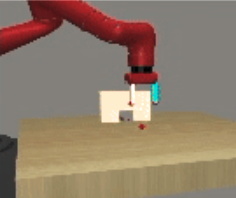

Continual learning (CL) -- the ability to continuously learn, building on previously acquired knowledge -- is a natural requirement for long-lived autonomous reinforcement learning (RL) agents. While building such agents, one needs to balance opposing desiderata, such as constraints on capacity and compute, the ability to not catastrophically forget, and to exhibit positive transfer on new tasks. Understanding the right trade-off is conceptually and computationally challenging, which we argue has led the community to overly focus on catastrophic forgetting. In response to these issues, we advocate for the need to prioritize forward transfer and propose Continual World, a benchmark consisting of realistic and meaningfully diverse robotic tasks built on top of Meta-World as a testbed. Following an in-depth empirical evaluation of existing CL methods, we pinpoint their limitations and highlight unique algorithmic challenges in the RL setting. Our benchmark aims to provide a meaningful and computationally inexpensive challenge for the community and thus help better understand the performance of existing and future solutions. Information about the benchmark, including the open-source code, is available at https://sites.google.com/view/continualworld.

翻译:持续学习(CL) -- -- 利用以往获得的知识不断学习的能力 -- -- 是长期自主强化学习(RL)的自然要求。在建立这种代理机构时,需要平衡对立的分层,例如能力和计算能力的限制、不灾难性地遗忘的能力、以及积极转移新任务的能力。理解正确的权衡在概念和计算上具有挑战性,我们说,这导致社区过度关注灾难性的遗忘。针对这些问题,我们主张需要优先进行前瞻性转移,并提出 " 持续世界 ",这是一个由以Meta-World为试验台的顶部所建的现实和有意义多样的机器人任务组成的基准。在对现有CLL方法进行深入的经验评估后,我们确定其局限性,并强调在RL设置中独特的算法挑战。我们的基准旨在为社区提供有意义和计算成本低廉的挑战,从而帮助更好地了解现有和未来解决方案的绩效。关于基准的信息,包括开放源代码,可在https://sites.google.com/view/continualworld网站上查阅。

相关内容

Source: Apple - iOS 8