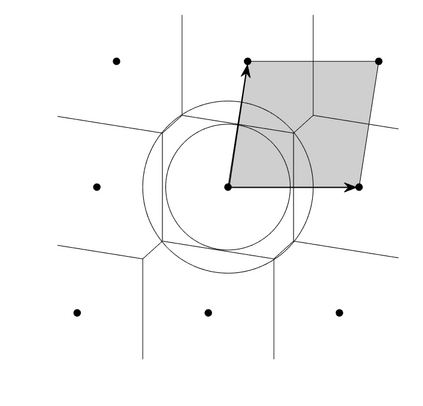

We characterize the complexity of the lattice decoding problem from a neural network perspective. The notion of Voronoi-reduced basis is introduced to restrict the space of solutions to a binary set. On the one hand, this problem is shown to be equivalent to computing a continuous piecewise linear (CPWL) function restricted to the fundamental parallelotope. On the other hand, it is known that any function computed by a ReLU feed-forward neural network is CPWL. As a result, we count the number of affine pieces in the CPWL decoding function to characterize the complexity of the decoding problem. It is exponential in the space dimension $n$, which induces shallow neural networks of exponential size. For structured lattices we show that folding, a technique equivalent to using a deep neural network, enables to reduce this complexity from exponential in $n$ to polynomial in $n$. Regarding unstructured MIMO lattices, in contrary to dense lattices many pieces in the CPWL decoding function can be neglected for quasi-optimal decoding on the Gaussian channel. This makes the decoding problem easier and it explains why shallow neural networks of reasonable size are more efficient with this category of lattices (in low to moderate dimensions).

翻译:我们从神经网络的角度来描述岩浆解码问题的复杂性。 引入了Voronoo降级基础的概念, 以限制二进制集的解决方案空间。 一方面, 这一问题被证明相当于计算一个限于基本平行的连续片断线( CPWL) 函数。 另一方面, 众所周知, 由RELU feed- toward 神经网络计算的任何函数都是 CPWL。 因此, 我们计算了CPWL 解码功能中毛毛片的数量, 以描述解码问题的复杂性。 在空间维度中, 美元是一个指数大小的指数化空间维度指数。 对于结构化的线条, 我们显示, 折叠是一种相当于使用深层神经网络的技术, 能够将这种复杂性从指数化的美元降低到聚度的美元。 因此, 与CPWL 解码网络中许多密密级的硬质块相比, 我们算算出数量。 这可以更容易被忽略, 高层次的线条线条线条的解码功能会更容易被解释为什么它能解释。