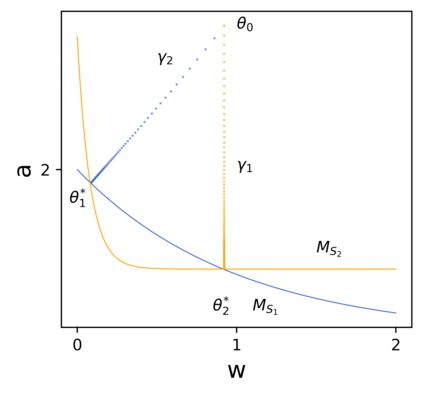

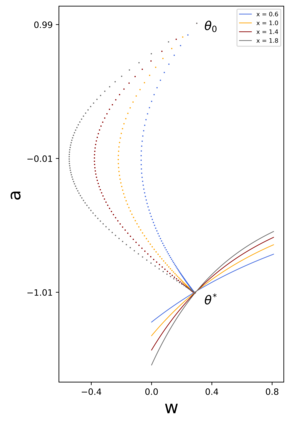

In recent years, understanding the implicit regularization of neural networks (NNs) has become a central task of deep learning theory. However, implicit regularization is in itself not completely defined and well understood. In this work, we make an attempt to mathematically define and study the implicit regularization. Importantly, we explore the limitation of a common approach of characterizing the implicit regularization by data-independent functions. We propose two dynamical mechanisms, i.e., Two-point and One-point Overlapping mechanisms, based on which we provide two recipes for producing classes of one-hidden-neuron NNs that provably cannot be fully characterized by a type of or all data-independent functions. Our results signify the profound data-dependency of implicit regularization in general, inspiring us to study in detail the data-dependency of NN implicit regularization in the future.

翻译:近年来,理解神经网络隐含的正规化已成为深层学习理论的一项核心任务,然而,隐含的正规化本身并没有完全界定和充分理解。在这项工作中,我们试图从数学上界定和研究隐含的正规化。重要的是,我们探索了一种共同方法的局限性,即以数据独立的功能来界定隐含的正规化。我们提出了两种动态机制,即两点和一点重叠机制,据此我们提供两种方法,用于生产一类单层中小的无核武器国家,这种方法不可能完全以某种或所有数据独立的功能为特征。我们的结果表明一般隐含的正规化在数据上的高度依赖性,激励我们详细研究未来非隐含的正规化在数据上的依赖性。