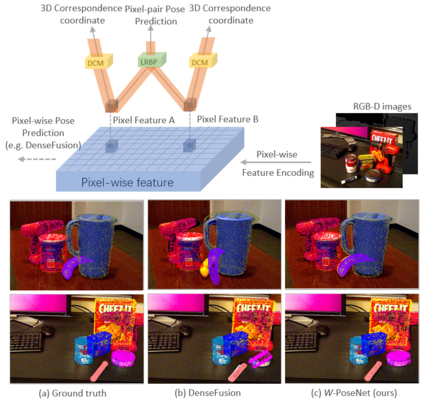

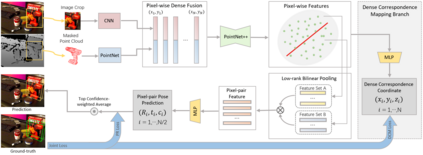

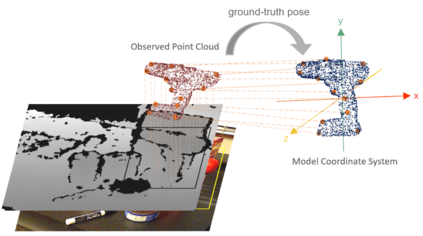

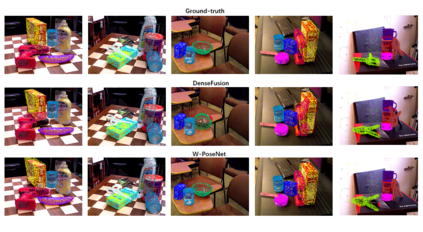

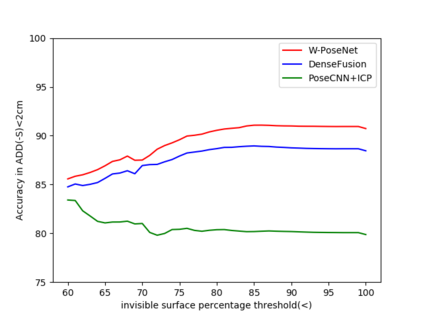

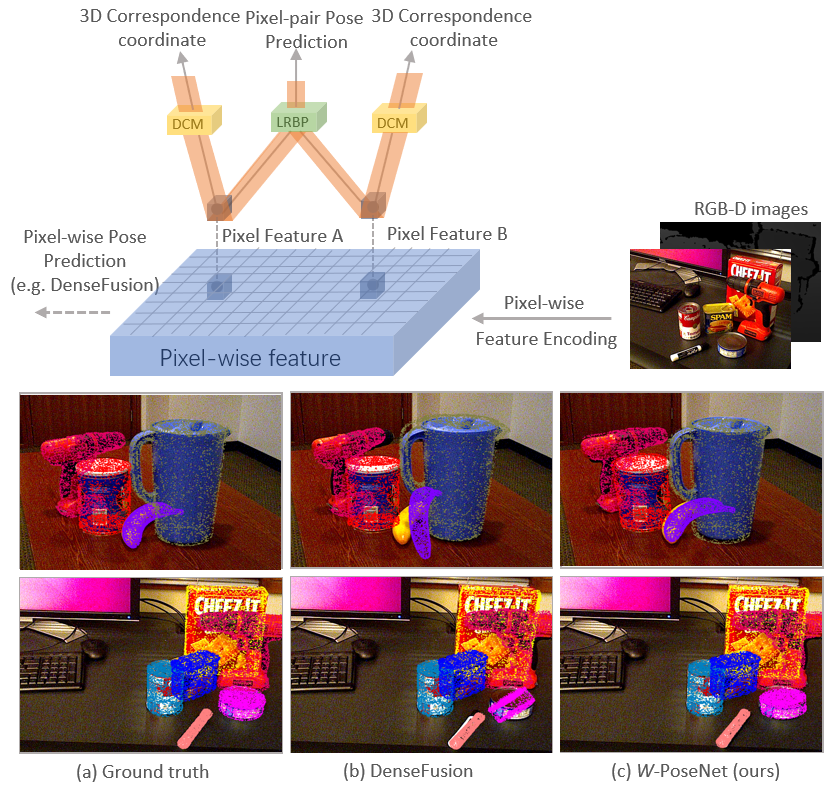

Solving 6D pose estimation is non-trivial to cope with intrinsic appearance and shape variation and severe inter-object occlusion, and is made more challenging in light of extrinsic large illumination changes and low quality of the acquired data under an uncontrolled environment. This paper introduces a novel pose estimation algorithm W-PoseNet, which densely regresses from input data to 6D pose and also 3D coordinates in model space. In other words, local features learned for pose regression in our deep network are regularized by explicitly learning pixel-wise correspondence mapping onto 3D pose-sensitive coordinates as an auxiliary task. Moreover, a sparse pair combination of pixel-wise features and soft voting on pixel-pair pose predictions are designed to improve robustness to inconsistent and sparse local features. Experiment results on the popular YCB-Video and LineMOD benchmarks show that the proposed W-PoseNet consistently achieves superior performance to the state-of-the-art algorithms.

翻译:解析 6D 代表的估算是非三重性的,以应对内在外观和形状变异以及严重的物体间隔绝,而且由于在不受控制的环境中外向性大照明变化和获得的数据质量低,因此更具挑战性。本文引入了一个新型的外向估计算法W-PoseNet,该算法从输入数据到6D 构成,以及模型空间的3D 坐标中大量回落。换句话说,通过明确学习像素的通信映射到3D 面容敏感坐标上,将我们深层网络中出现回归的本地特征固定化。此外,微弱的像素方法组合和对像素-Pair 姿势预测的软投票旨在增强对不一致和稀少的地方特征的稳健性。关于流行的YCB-Video 和 LineMOD 基准的实验结果显示,拟议的W-PoseNet始终能够取得与最新算法的优异性。