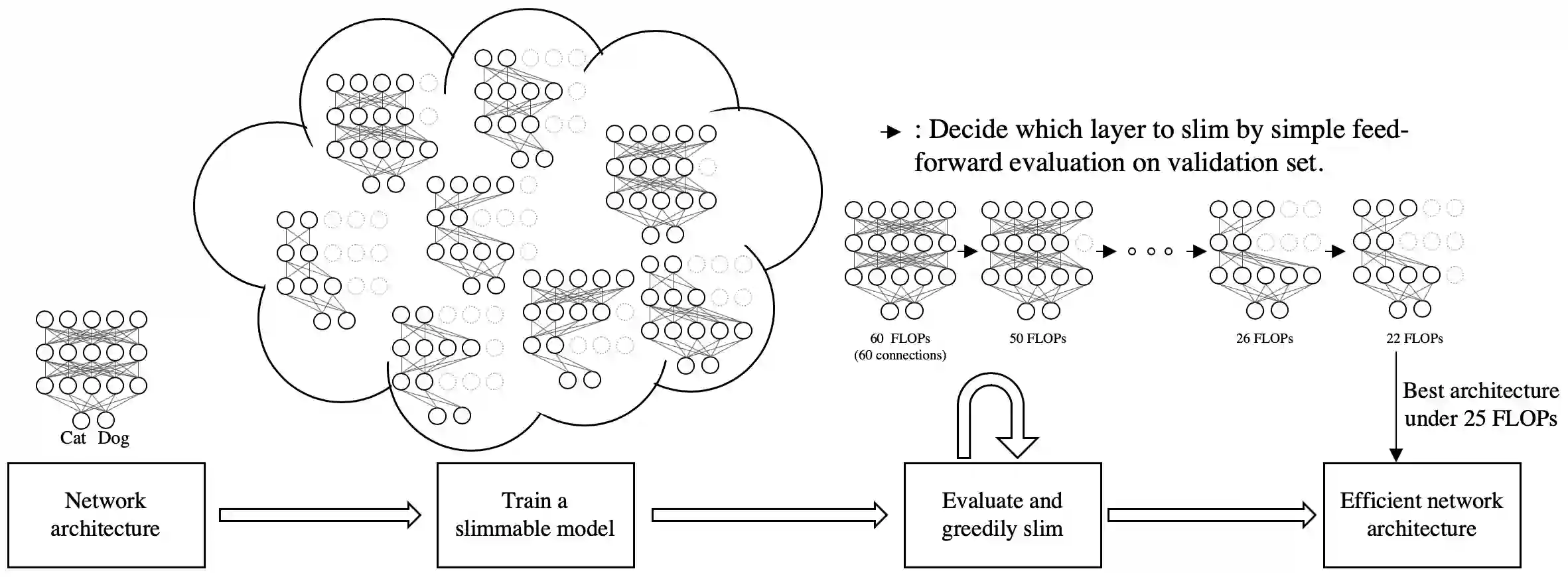

We study how to set channel numbers in a neural network to achieve better accuracy under constrained resources (e.g., FLOPs, latency, memory footprint or model size). A simple and one-shot solution, named AutoSlim, is presented. Instead of training many network samples and searching with reinforcement learning, we train a single slimmable network to approximate the network accuracy of different channel configurations. We then iteratively evaluate the trained slimmable model and greedily slim the layer with minimal accuracy drop. By this single pass, we can obtain the optimized channel configurations under different resource constraints. We present experiments with MobileNet v1, MobileNet v2, ResNet-50 and RL-searched MNasNet on ImageNet classification. We show significant improvements over their default channel configurations. We also achieve better accuracy than recent channel pruning methods and neural architecture search methods. Notably, by setting optimized channel numbers, our AutoSlim-MobileNet-v2 at 305M FLOPs achieves 74.2% top-1 accuracy, 2.4% better than default MobileNet-v2 (301M FLOPs), and even 0.2% better than RL-searched MNasNet (317M FLOPs). Our AutoSlim-ResNet-50 at 570M FLOPs, without depthwise convolutions, achieves 1.3% better accuracy than MobileNet-v1 (569M FLOPs). Code and models will be available at: https://github.com/JiahuiYu/slimmable_networks

翻译:我们研究如何在一个神经网络中设置频道数字,以便在有限的资源(例如,FLOPs、延缓度、记忆足迹或模型大小)下实现更准确性。 演示了一个简单和一发的解决方案,名为AutoSlim。 我们不是培训许多网络样本,而是通过强化学习搜索,而是训练一个单薄网络,以近似不同频道配置的网络准确性。 然后我们迭接地评估经过训练的微薄模型,并尽量降低精确性。 通过这个单一通道,我们可以在不同的资源限制下获得优化的频道配置。 我们在图像网络分类上使用移动Net v1, MobNet2, MobtNet2, ResNet-50和RL-Searched MNAsNet的实验。 我们的默认频道配置有了显著改进。 我们还比最近的频道运行方法和神经架构搜索方法更精确。 值得注意的是,我们在305M FLOP/FLOPs 上设置了最佳频道数字, 我们的AutSlim-MobleNet2, 顶级的准确性为72%, 比默认的移动网络2 (301M-FLOP-50) 的精确度更好。