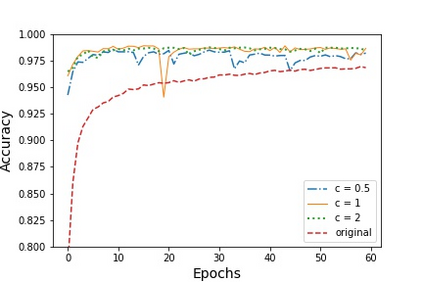

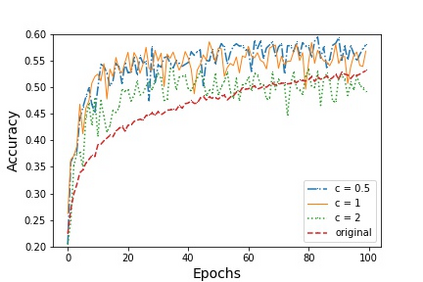

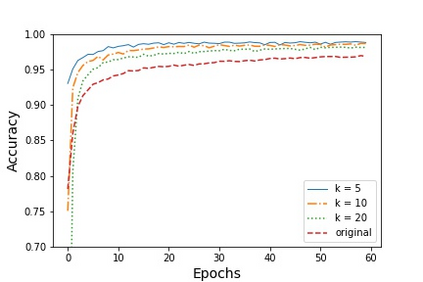

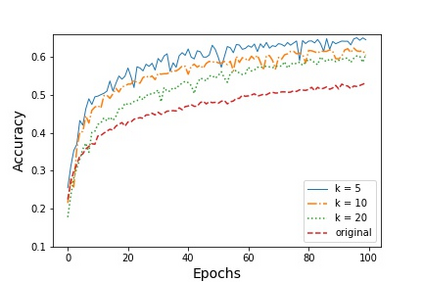

In this paper, an adjustment to the original differentially private stochastic gradient descent (DPSGD) algorithm for deep learning models is proposed. As a matter of motivation, to date, almost no state-of-the-art machine learning algorithm hires the existing privacy protecting components due to otherwise serious compromise in their utility despite the vital necessity. The idea in this study is natural and interpretable, contributing to improve the utility with respect to the state-of-the-art. Another property of the proposed technique is its simplicity which makes it again more natural and also more appropriate for real world and specially commercial applications. The intuition is to trim and balance out wild individual discrepancies for privacy reasons, and at the same time, to preserve relative individual differences for seeking performance. The idea proposed here can also be applied to the recurrent neural networks (RNN) to solve the gradient exploding problem. The algorithm is applied to benchmark datasets MNIST and CIFAR-10 for a classification task and the utility measure is calculated. The results outperformed the original work.

翻译:本文建议对深层学习模式的最初有差别的私人随机梯度梯度梯度(DPSGD)算法进行调整,作为动机,迄今为止,几乎没有任何最先进的机器学习算法雇用现有的隐私保护部件,尽管其效用存在严重的妥协,尽管极为必要。本研究报告的想法是自然和可解释的,有助于改进对最新技术的实用性。拟议技术的另一个特性是其简单性,使它再次更自然,更适合现实世界和特别商业应用。直觉是为了隐私的原因而调整和平衡野生个人差异,同时为了保持相对的个人差异以寻求性能。此处提出的想法也可以适用于经常性神经网络(RNNN),以解决梯度爆炸问题。该算法用于基准数据集、MNIST和CIFAR-10的分类任务和实用性测量。结果优于最初的工作。