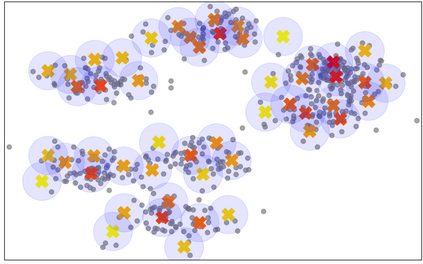

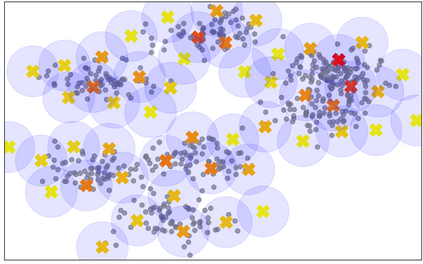

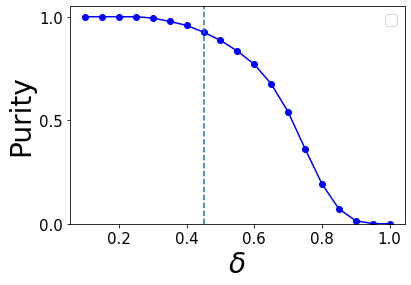

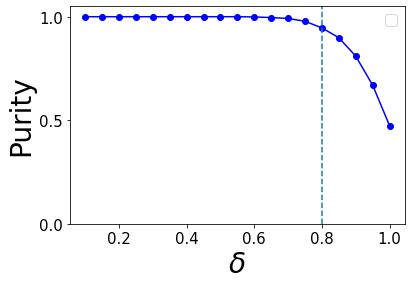

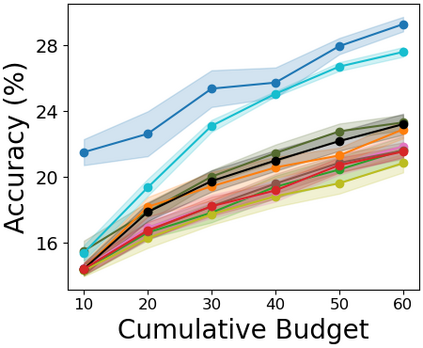

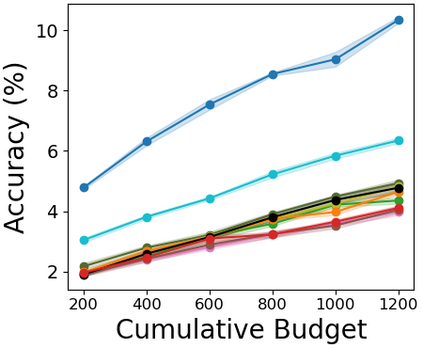

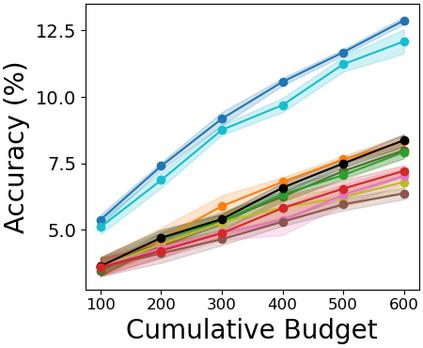

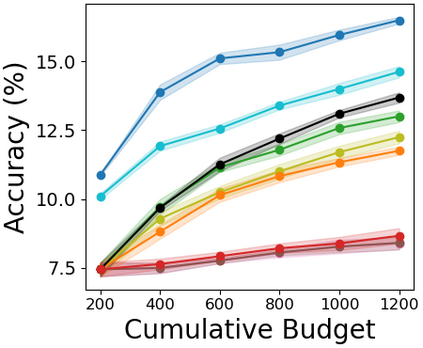

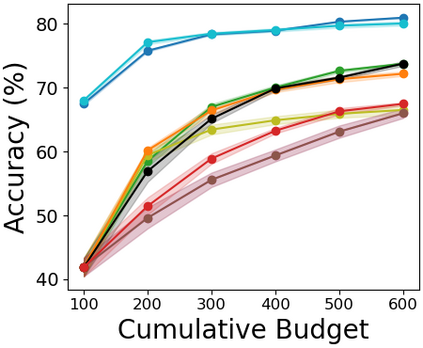

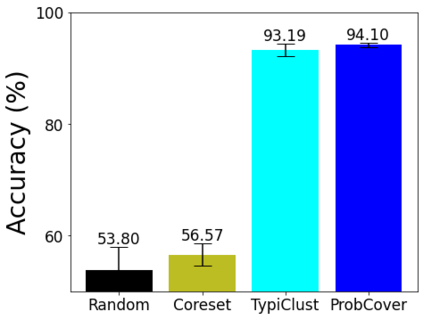

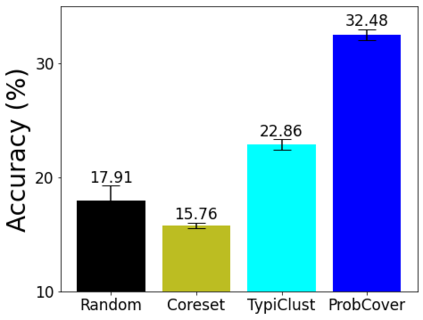

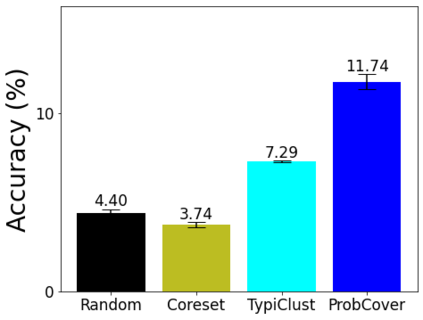

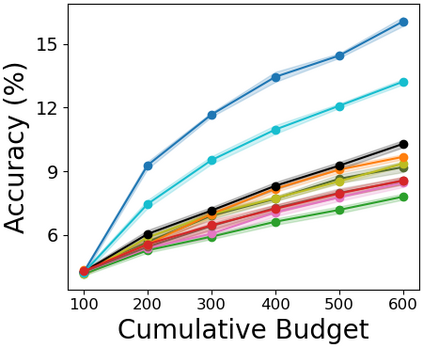

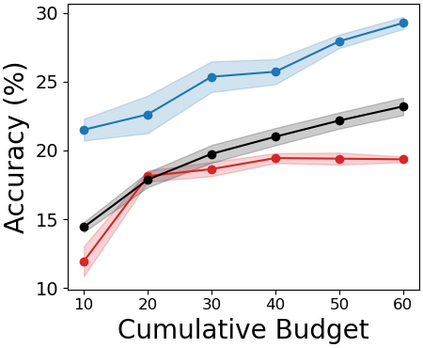

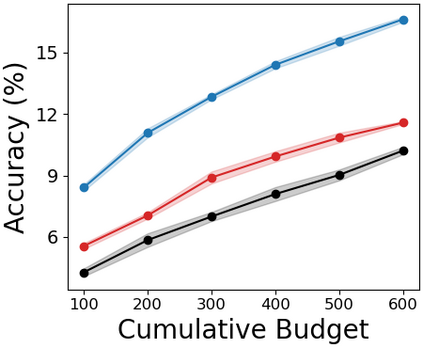

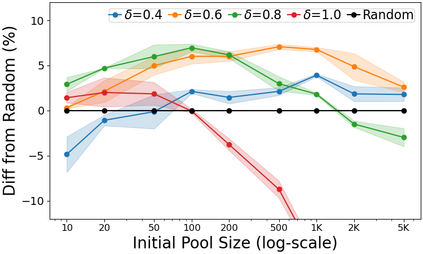

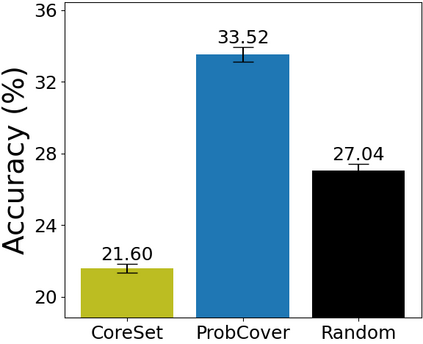

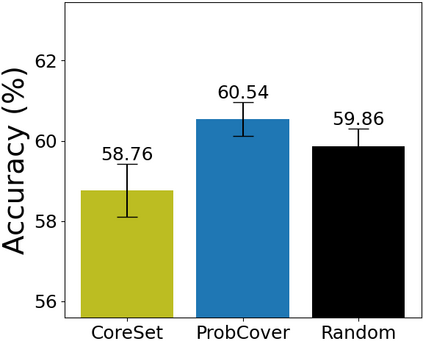

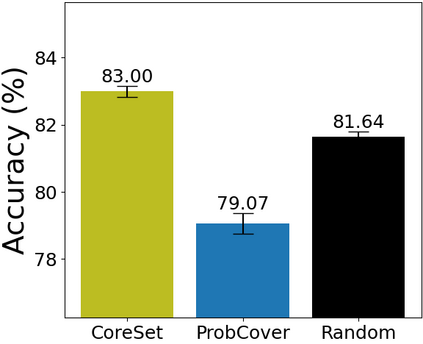

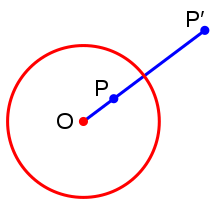

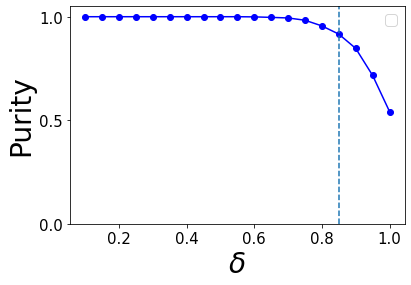

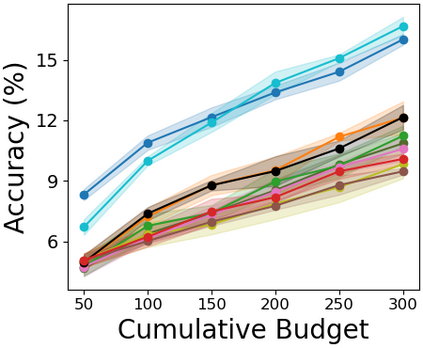

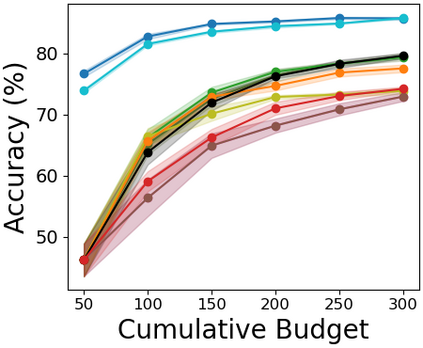

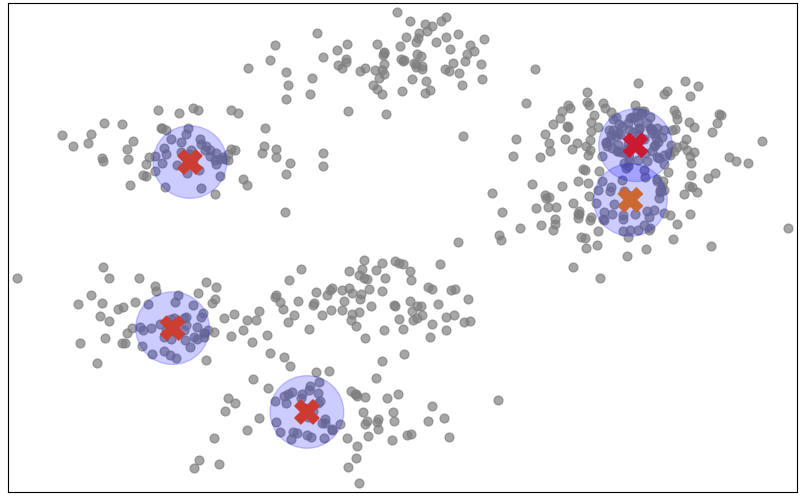

Deep active learning aims to reduce the annotation cost for deep neural networks, which are notoriously data-hungry. Until recently, deep active learning methods struggled in the low-budget regime, where only a small amount of samples are annotated. The situation has been alleviated by recent advances in self-supervised representation learning methods, which impart the geometry of the data representation with rich information about the points. Taking advantage of this progress, we study the problem of subset selection for annotation through a "covering" lens, proposing ProbCover -- a new active learning algorithm for the low budget regime, which seeks to maximize Probability Coverage. We describe a dual way to view our formulation, from which one can derive strategies suitable for the high budget regime of active learning, related to existing methods like Coreset. We conclude with extensive experiments, evaluating ProbCover in the low budget regime. We show that our principled active learning strategy improves the state-of-the-art in the low-budget regime in several image recognition benchmarks. This method is especially beneficial in semi-supervised settings, allowing state-of-the-art semi-supervised methods to achieve high accuracy with only a few labels.

翻译:深层积极学习旨在降低深神经网络的批注成本,这些网络是臭名昭著的数据渴望。直到最近,在低预算制度下挣扎的深层积极学习方法,其中只有少量的样本有附加说明。最近自我监督的代议制学习方法的进展缓解了这一局面,这种方法使数据代表的几何结构与关于这些点的丰富信息有了丰富的信息。利用这一进展,我们研究了通过“覆盖”镜头选择子子子集以进行批注的问题,提出了ProbCover -- -- 一种新的低预算制度的积极学习算法,寻求最大限度地扩大概率覆盖率。我们描述了一种双管齐下的方法来查看我们的提法,从中可以产生适合高预算的积极学习制度的战略,与核心等现有方法相关。我们以广泛的实验结束,对低预算制度中ProbCover系统ProbCover系统进行评估。我们通过几种图像识别基准表明,我们的原则性积极学习战略改善了低预算制度中的状态。这种方法在半超强的环境下特别有益,允许使用州级半监督的半监督方法实现高精确度的标签。