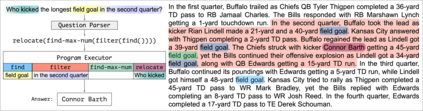

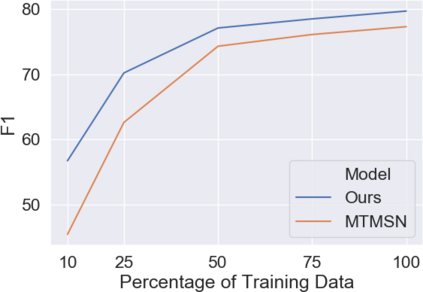

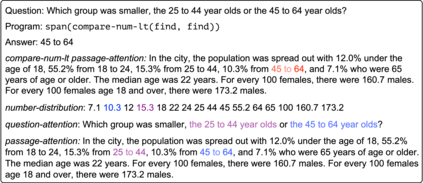

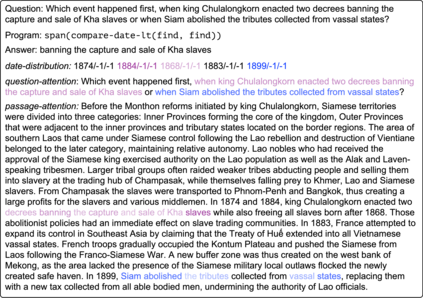

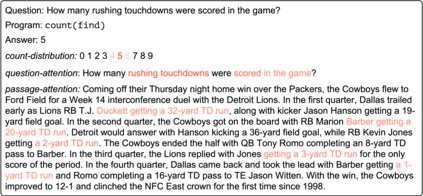

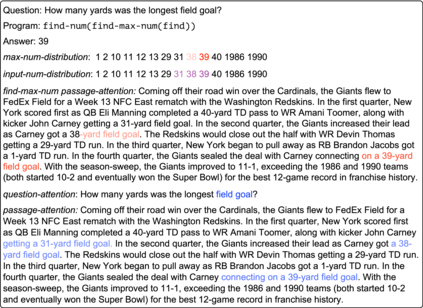

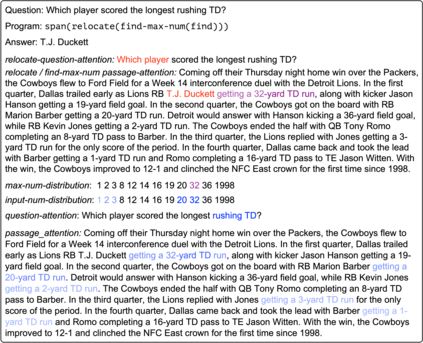

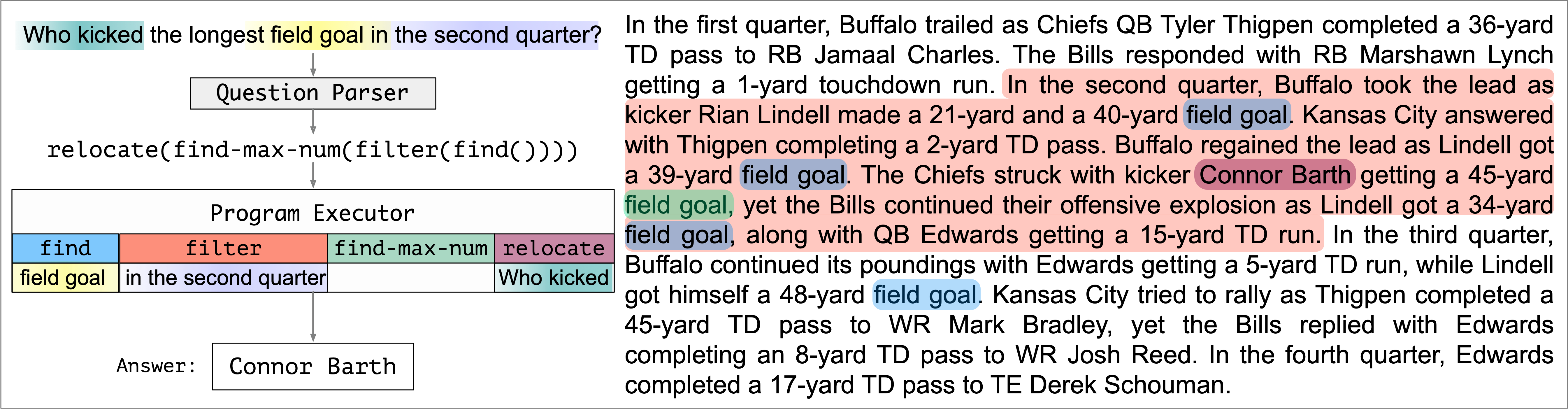

Answering compositional questions that require multiple steps of reasoning against text is challenging, especially when they involve discrete, symbolic operations. Neural module networks (NMNs) learn to parse such questions as executable programs composed of learnable modules, performing well on synthetic visual QA domains. However, we find that it is challenging to learn these models for non-synthetic questions on open-domain text, where a model needs to deal with the diversity of natural language and perform a broader range of reasoning. We extend NMNs by: (a) introducing modules that reason over a paragraph of text, performing symbolic reasoning (such as arithmetic, sorting, counting) over numbers and dates in a probabilistic and differentiable manner; and (b) proposing an unsupervised auxiliary loss to help extract arguments associated with the events in text. Additionally, we show that a limited amount of heuristically-obtained question program and intermediate module output supervision provides sufficient inductive bias for accurate learning. Our proposed model significantly outperforms state-of-the-art models on a subset of the DROP dataset that poses a variety of reasoning challenges that are covered by our modules.

翻译:需要对文本进行多步推理的构成问题具有挑战性,特别是当它们涉及离散的象征性操作时。神经模块网络(NMNNs)学会分析由可学习模块组成的可执行程序等问题,在合成可视QA域方面表现良好。然而,我们发现,在开放式文本中学习这些非合成问题的模型具有挑战性,因为模型需要处理自然语言的多样性,并进行更广泛的推理。我们扩展NMN,方法是:(a) 采用模块,说明文本段落的理由,以概率和不同的方式对数字和日期进行符号推理(例如算术、排序、计算);以及(b) 提出一种不受监督的辅助损失,以帮助提取与文本中事件相关的论据。此外,我们表明,数量有限的超自然问题程序以及中间模块输出监督为准确学习提供了充分的感应偏差。我们提议的模型在构成我们各种推理挑战的DROP数据集的子集上明显超越了状态模型。