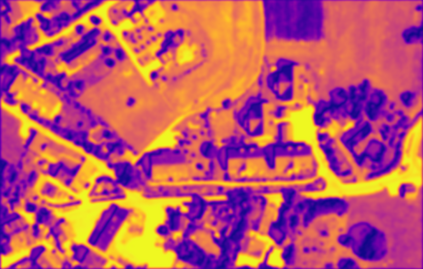

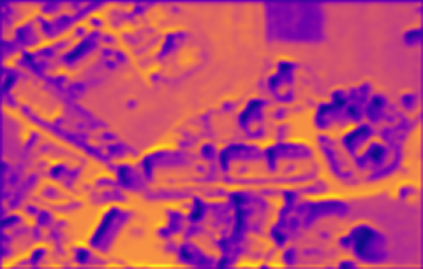

This paper introduces SD-6DoF-ICLK, a learning-based Inverse Compositional Lucas-Kanade (ICLK) pipeline that uses sparse depth information to optimize the relative pose that best aligns two images on SE(3). To compute this six Degrees-of-Freedom (DoF) relative transformation, the proposed formulation requires only sparse depth information in one of the images, which is often the only available depth source in visual-inertial odometry or Simultaneous Localization and Mapping (SLAM) pipelines. In an optional subsequent step, the framework further refines feature locations and the relative pose using individual feature alignment and bundle adjustment for pose and structure re-alignment. The resulting sparse point correspondences with subpixel-accuracy and refined relative pose can be used for depth map generation, or the image alignment module can be embedded in an odometry or mapping framework. Experiments with rendered imagery show that the forward SD-6DoF-ICLK runs at 145 ms per image pair with a resolution of 752 x 480 pixels each, and vastly outperforms the classical, sparse 6DoF-ICLK algorithm, making it the ideal framework for robust image alignment under severe conditions.

翻译:本文介绍SD-6DoF- ICLK, 这是一种基于学习的反成份Lucas- Kanade(ICLK) 管道, 使用稀薄的深度信息优化SE(3) 上两种图像最匹配的相对面貌。 要计算出六等自由度相对变异的六等自由度相对面貌, 拟议的配方仅需要其中一种图像中的稀薄深度信息, 通常是视觉- 内皮测量或同声定位和映射管道中唯一可用的深度源。 在接下来的可选步骤中, 框架进一步细化地貌位置和相对面, 使用单个地貌调整和捆绑调整, 来调整面貌和结构重新对接。 由此产生的与亚等精度和精细度相对面形的稀疏点对应, 可用于深度地图生成, 或图像校正模块可以嵌入一个观察或绘图框架。 与成像实验显示, SD-6DoF- ICLK 的前方图像每对145 ms, 分辨率为752x 480平方, 大幅超出模型, 并大大超越了古典, 6DoFIC- L 模型的模型, 基- L 的精确度为稳定的模型。