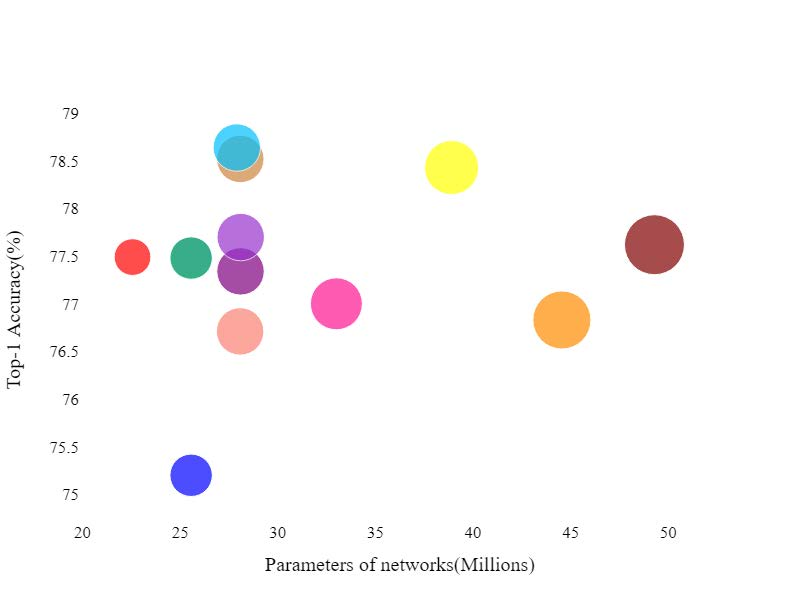

Recently, it has been demonstrated that the performance of a deep convolutional neural network can be effectively improved by embedding an attention module into it. In this work, a novel lightweight and effective attention method named Pyramid Squeeze Attention (PSA) module is proposed. By replacing the 3x3 convolution with the PSA module in the bottleneck blocks of the ResNet, a novel representational block named Efficient Pyramid Squeeze Attention (EPSA) is obtained. The EPSA block can be easily added as a plug-and-play component into a well-established backbone network, and significant improvements on model performance can be achieved. Hence, a simple and efficient backbone architecture named EPSANet is developed in this work by stacking these ResNet-style EPSA blocks. Correspondingly, a stronger multi-scale representation ability can be offered by the proposed EPSANet for various computer vision tasks including but not limited to, image classification, object detection, instance segmentation, etc. Without bells and whistles, the performance of the proposed EPSANet outperforms most of the state-of-the-art channel attention methods. As compared to the SENet-50, the Top-1 accuracy is improved by 1.93% on ImageNet dataset, a larger margin of +2.7 box AP for object detection and an improvement of +1.7 mask AP for instance segmentation by using the Mask-RCNN on MS-COCO dataset are obtained. Our source code is available at:https://github.com/murufeng/EPSANet.

翻译:最近,人们已经证明,通过将关注模块嵌入其中,深卷神经网络的性能可以有效地提高。在这项工作中,提出了名为“金字塔”的新型轻量和有效关注方法。通过在ResNet的瓶颈区块中用PSA模块取代3x3演化与3x3演化为ResNet瓶颈区块中的PSA模块,获得了一个名为“高效金字塔”的新型代表区块。EPSA块可以很容易地添加成一个功能完善的骨干网络的插件,并且可以实现模型性能的重大改进。因此,在这项工作中,一个名为 EPSA 的简单高效的骨干结构结构正在通过堆叠叠堆叠这些 ResNet 式 EPSA 的 3x3 组合。 相应地,拟议的EPSA 网可以提供更强大的多层次代表能力,包括但不限于图像分类、目标检测、实例分解等。 没有线和口哨,拟议的EPA网络的功能网络比值结构网的功能结构结构结构架构将使用SPSA-l-irefermal2的更精确度部分, AS-lax-ma-lax-lax-ma-de-lax-latial-st-lax-de Sta-d-d-d-de-de-lax-lax-Sal-Sal-la-d-d-de-d-Sal-Sal-Sal-d-st-de-de-de-Sal-Sal-Sal-de-s-to-d-s-d-d-d-d-d-d-d-d-d-s-s-s-s-s-s-s-s-s-s-s-s-s-st-s-s-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-st-s-s-st-st-st-s-s-s-st-st-i-i-i-st-st-st-s-s-i-s-