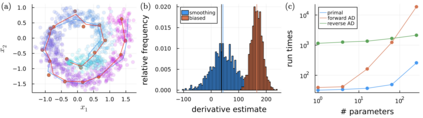

Automatic differentiation (AD), a technique for constructing new programs which compute the derivative of an original program, has become ubiquitous throughout scientific computing and deep learning due to the improved performance afforded by gradient-based optimization. However, AD systems have been restricted to the subset of programs that have a continuous dependence on parameters. Programs that have discrete stochastic behaviors governed by distribution parameters, such as flipping a coin with probability $p$ of being heads, pose a challenge to these systems because the connection between the result (heads vs tails) and the parameters ($p$) is fundamentally discrete. In this paper we develop a new reparameterization-based methodology that allows for generating programs whose expectation is the derivative of the expectation of the original program. We showcase how this method gives an unbiased and low-variance estimator which is as automated as traditional AD mechanisms. We demonstrate unbiased forward-mode AD of discrete-time Markov chains, agent-based models such as Conway's Game of Life, and unbiased reverse-mode AD of a particle filter. Our code is available at https://github.com/gaurav-arya/StochasticAD.jl.

翻译:自动差异(AD)是计算原始程序衍生物的新程序的一种技术,由于基于梯度的优化提高了性能,因此在科学计算和深层次学习过程中,自动差异(AD)已经变得无处不在。然而,由于基于梯度的优化提高了性能,自动差异系统被限制在持续依赖参数的子程序范围内。具有受分配参数制约的离散随机偏差行为的程序,例如抛掷硬币,概率为头的硬币,给这些系统带来挑战,因为结果(头对尾)和参数(美元)之间的联系基本上离散。在本文中,我们开发了一种新的基于再计量法的方法,允许产生期望是原始程序预期的衍生物的程式。我们展示了这种方法如何提供像传统的自动自动自动的不偏颇和低变率的估量器。我们展示了离散时间Markov链的无偏向前摩多,以代理人为基础的模型,如Conway的生命游戏和颗粒过滤器的公平反向模式。我们的代码可以在 https://githubj.Sqorta-ADasty.