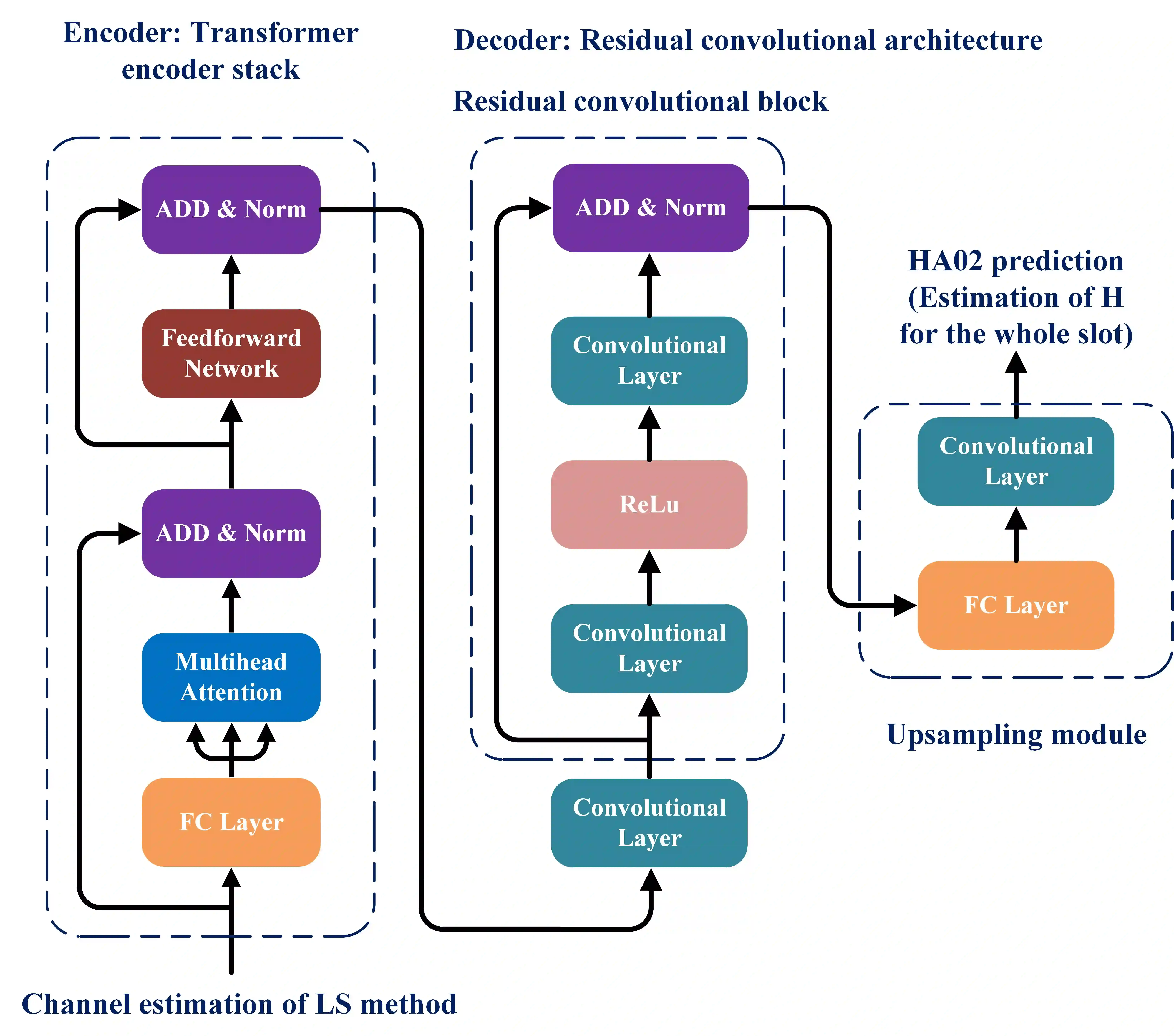

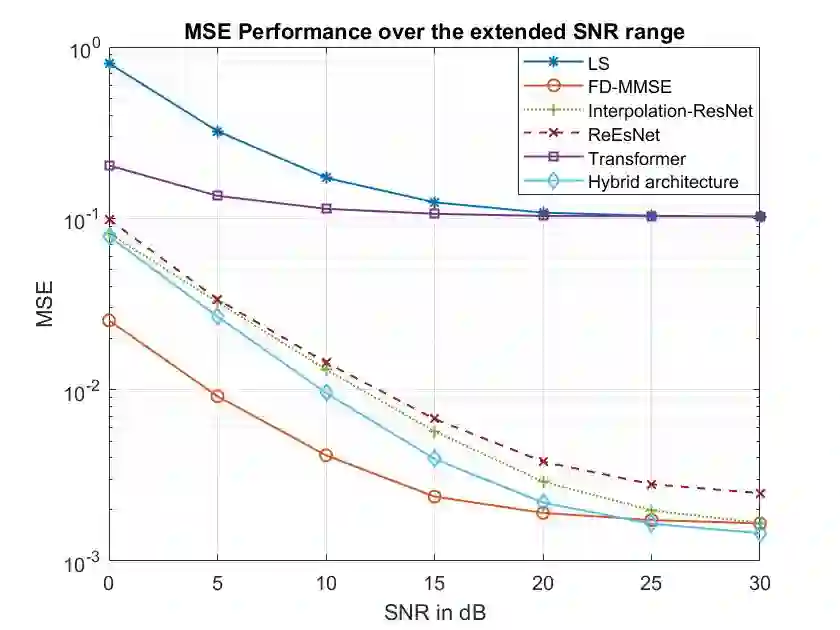

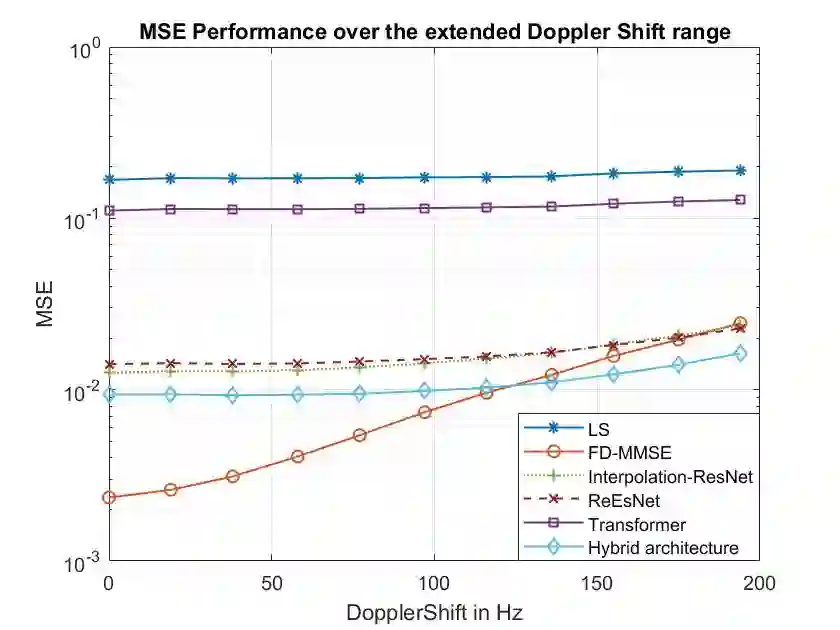

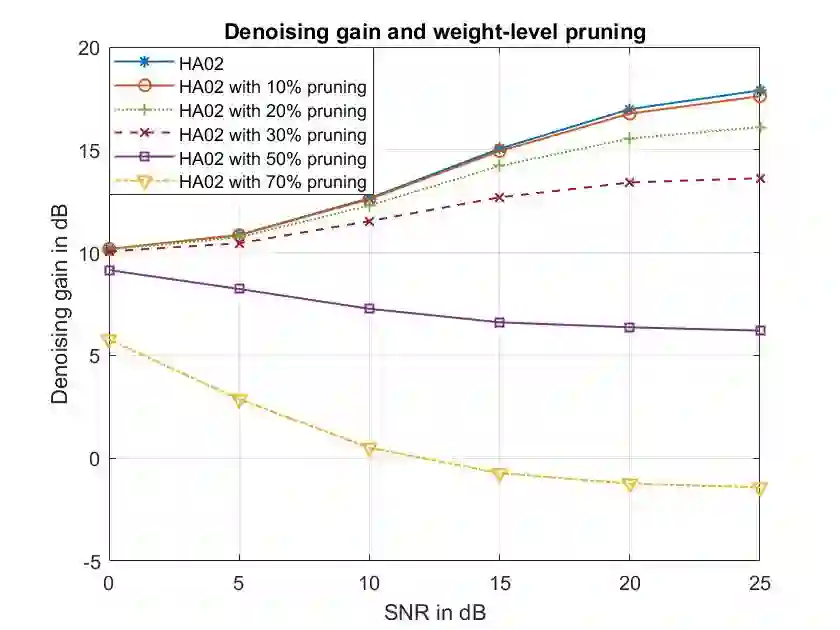

In this paper, we deploy the self-attention mechanism to achieve improved channel estimation for orthogonal frequency-division multiplexing waveforms in the downlink. Specifically, we propose a new hybrid encoder-decoder structure (called HA02) for the first time which exploits the attention mechanism to focus on the most important input information. In particular, we implement a transformer encoder block as the encoder to achieve the sparsity in the input features and a residual neural network as the decoder respectively, inspired by the success of the attention mechanism. Using 3GPP channel models, our simulations show superior estimation performance compared with other candidate neural network methods for channel estimation.

翻译:在本文中,我们运用了自我注意机制来改进对下行链路中正方位频率分向多氧化波形的频道估计。具体地说,我们首次提出了一个新的混合编码器解码器结构(称为HA02 ), 利用关注机制集中关注最重要的输入信息。特别是,我们实施了一个变压器编码器块作为编码器,以实现输入功能的宽度,并使用一个剩余神经网络作为分别受关注机制成功启发的解码器。使用3GPP频道模型,我们的模拟显示,与其他候选的频道估计神经网络方法相比,我们的估计性能更高。