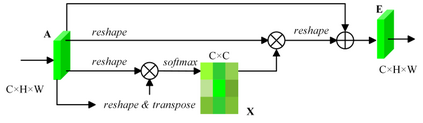

Semantic segmentation of remote sensing images plays an important role in land resource management, yield estimation, and economic assessment. Even though the semantic segmentation of remote sensing images has been prominently improved by convolutional neural networks, there are still several limitations contained in standard models. First, for encoder-decoder architectures like U-Net, the utilization of multi-scale features causes overuse of information, where similar low-level features are exploited at multiple scales for multiple times. Second, long-range dependencies of feature maps are not sufficiently explored, leading to feature representations associated with each semantic class are not optimal. Third, despite the dot-product attention mechanism has been introduced and harnessed widely in semantic segmentation to model long-range dependencies, the high time and space complexities of attention impede the usage of attention in application scenarios with large input. In this paper, we proposed a Multi-Attention-Network (MANet) to remedy these drawbacks, which extracts contextual dependencies by multi efficient attention mechanisms. A novel attention mechanism named kernel attention with linear complexity is proposed to alleviate the high computational demand of attention. Based on kernel attention and channel attention, we integrate local feature maps extracted by ResNeXt-101 with their corresponding global dependencies, and adaptively signalize interdependent channel maps. Experiments conducted on two remote sensing image datasets captured by variant satellites demonstrate that the performance of our MANet transcends the DeepLab V3+, PSPNet, FastFCN, and other baseline algorithms.

翻译:遥感图像的语义分解在土地资源管理、产量估计和经济评估中起着重要作用。尽管遥感图像的语义分解通过神经神经网络得到显著改进,但标准模型中仍然有一些限制。首先,对于U-Net等编码器脱coder结构而言,多尺度特征的利用造成信息过度使用,在多个规模上多次利用类似的低级别特征。第二,对地貌图的长距离依赖性没有进行充分的探索,导致每个语系类的特征表征不是最佳的。第三,尽管在远程依赖性模型的语义分解中,引入并广泛利用了遥感图像的语义分解机制。首先,对于诸如U-Net等编码的编码解码-解码结构,使用多尺度特征造成信息过度使用,在多个规模上利用类似的低级别特征进行利用。第二,对地貌图的长距离依赖性,导致每个语系相匹配的语系特征表显示线性精度关注的新式注意机制。第三,尽管已引入了点-产品分量分解的分解分解分解机制,但是,通过高层次流路路段的流流流流流数据显示了对等流流流流流流数据的注意,也显示了对等流流流流流流流流流流流的注意,从而显示了我们进行了了对等流路路路路路路的注意。