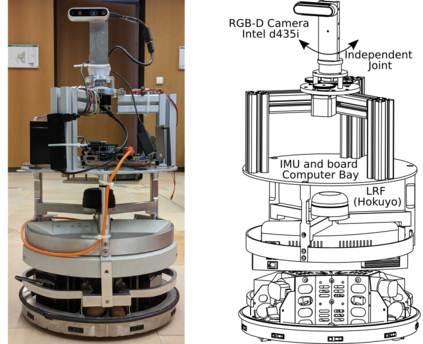

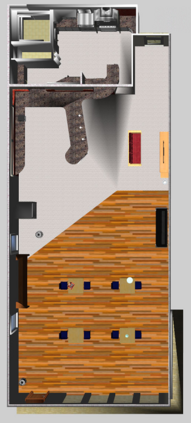

In active Visual-SLAM (V-SLAM), a robot relies on the information retrieved by its cameras to control its own movements for autonomous mapping of the environment. Cameras are usually statically linked to the robot's body, limiting the extra degrees of freedom for visual information acquisition. In this work, we overcome the aforementioned problem by introducing and leveraging an independently rotating camera on the robot base. This enables us to continuously control the heading of the camera, obtaining the desired optimal orientation for active V-SLAM, without rotating the robot itself. However, this additional degree of freedom introduces additional estimation uncertainties, which need to be accounted for. We do this by extending our robot's state estimate to include the camera state and jointly estimate the uncertainties. We develop our method based on a state-of-the-art active V-SLAM approach for omnidirectional robots and evaluate it through rigorous simulation and real robot experiments. We obtain more accurate maps, with lower energy consumption, while maintaining the benefits of the active approach with respect to the baseline. We also demonstrate how our method easily generalizes to other non-omnidirectional robotic platforms, which was a limitation of the previous approach. Code and implementation details are provided as open-source.

翻译:在活跃的视觉-SLAM(V-SLAM)中,机器人依靠其相机检索到的信息来控制自己的环境自动绘图运动。 相机通常与机器人的身体静态连接, 限制获取视觉信息的额外自由度。 在这项工作中, 我们通过在机器人基地上引入并使用独立旋转相机来克服上述问题。 这使我们能够持续控制相机的标题, 获得主动V- SLAM的理想最佳方向, 而不旋转机器人本身。 然而, 这种额外的自由度带来了额外的估计不确定性, 需要对此进行核算。 我们这样做的方法是扩大我们的机器人状态估计, 将相机状态包括摄像头状态, 并共同估计不确定性。 我们开发了我们的方法, 以最先进的动态V- SLAM 方法为基础, 用于对万向机器人进行全向性模拟和真正的机器人实验, 并且通过严格的模拟和真正的机器人实验来评估它。 我们得到了更精确的地图, 并且能消耗更低, 同时保持了对基线的积极方法的好处。 我们还展示了我们的方法如何容易地概括到其他非向上向导的机器人平台, 这是对前一个开源的方法的限制。