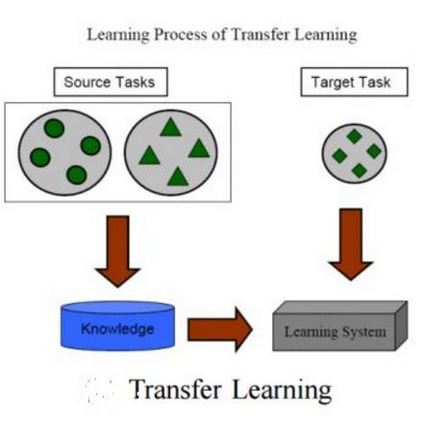

Transfer learning is essential when sufficient data comes from the source domain, with scarce labeled data from the target domain. We develop estimators that achieve minimax linear risk for linear regression problems under distribution shift. Our algorithms cover different transfer learning settings including covariate shift and model shift. We also consider when data are generated from either linear or general nonlinear models. We show that linear minimax estimators are within an absolute constant of the minimax risk even among nonlinear estimators for various source/target distributions.

翻译:当来自源域的足够数据来自源域,而目标域的标签数据很少时,转移学习是必不可少的。我们开发了最小负线线性风险的测算器,用于在分布式转换中解决线性回归问题。我们的算法涵盖不同的转移学习设置,包括共变量转换和模型转换。我们还考虑从线性模型或一般非线性模型中生成数据的时间。我们显示线性微线性微轴估测器在最小值风险的绝对常数之内,即使在各种源/目标分布的非线性估测器中也是如此。