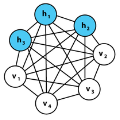

We study a generic ensemble of deep belief networks which is parametrized by the distribution of energy levels of the hidden states of each layer. We show that, within a random energy approach, statistical dependence can propagate from the visible to deep layers only if each layer is tuned close to the critical point during learning. As a consequence, efficiently trained learning machines are characterised by a broad distribution of energy levels. The analysis of Deep Belief Networks and Restricted Boltzmann Machines on different datasets confirms these conclusions.

翻译:我们研究一套通用的深层信仰网络,这种网络通过每一层隐藏状态的能量水平分布而得到平衡。我们表明,在随机能源方法中,只有每一层与学习的关键点相近,统计依赖性才能从可见到深层。因此,经过有效培训的学习机器具有广泛分布能量水平的特点。对深层信仰网络和关于不同数据集的受限波尔茨曼机器的分析证实了这些结论。

相关内容

专知会员服务

36+阅读 · 2019年10月17日

Arxiv

0+阅读 · 2022年2月20日