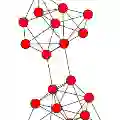

This paper presents our Facial Action Units (AUs) recognition submission to the fifth Affective Behavior Analysis in-the-wild Competition (ABAW). Our approach consists of three main modules: (i) a pre-trained facial representation encoder which produce a strong facial representation from each input face image in the input sequence; (ii) an AU-specific feature generator that specifically learns a set of AU features from each facial representation; and (iii) a spatio-temporal graph learning module that constructs a spatio-temporal graph representation. This graph representation describes AUs contained in all frames and predicts the occurrence of each AU based on both the modeled spatial information within the corresponding face and the learned temporal dynamics among frames. The experimental results show that our approach outperformed the baseline and the spatio-temporal graph representation learning allows our model to generate the best results among all ablated systems. Our model ranks at the 4th place in the AU recognition track at the 5th ABAW Competition.

翻译:本文介绍我们在第五届野外情感行为分析竞赛(ABAW)中提交的人脸动作单位(AU)识别方案。我们的方法包含三个主要模块:(i)一个经过预训练的面部表示编码器,用于从输入序列中的每个输入面部图像产生强面部表示;(ii)一种AU特定的特征生成器,用于从每个面部表示中专门学习一组AU特征;和(iii)一种时空图学习模块,用于构建时空图表示。该图表示描述了所有帧中包含的AU,并基于相应面部内模拟的空间信息和帧之间学习的时间动态来预测每个AU的发生。实验结果表明,我们的方法优于基准线,且时空图表示学习使我们的模型在所有消融系统中产生了最好的结果。我们的模型在第五届ABAW竞赛的AU识别赛道中排名第四。