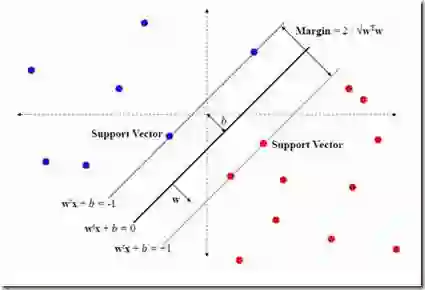

This paper considers distributed optimization algorithms, with application in binary classification via distributed support-vector-machines (D-SVM) over multi-agent networks subject to some link nonlinearities. The agents solve a consensus-constraint distributed optimization cooperatively via continuous-time dynamics, while the links are subject to strongly sign-preserving odd nonlinear conditions. Logarithmic quantization and clipping (saturation) are two examples of such nonlinearities. In contrast to existing literature that mostly considers ideal links and perfect information exchange over linear channels, we show how general sector-bounded models affect the convergence to the optimizer (i.e., the SVM classifier) over dynamic balanced directed networks. In general, any odd sector-bounded nonlinear mapping can be applied to our dynamics. The main challenge is to show that the proposed system dynamics always have one zero eigenvalue (associated with the consensus) and the other eigenvalues all have negative real parts. This is done by recalling arguments from matrix perturbation theory. Then, the solution is shown to converge to the agreement state under certain conditions. For example, the gradient tracking (GT) step size is tighter than the linear case by factors related to the upper/lower sector bounds. To the best of our knowledge, no existing work in distributed optimization and learning literature considers non-ideal link conditions.

翻译:本文考虑分布式优化算法,应用于通过分布式支持向量机(D-SVM)进行二元分类,而多智能体网络则面临着一些非理想连接的情况。代理通过连续时间动态协同解决共识约束分布式优化问题,而链路受到强烈的保持符号奇数非线性条件的影响。对数量化和挤压(饱和)是这些非线性条件的两个例子。与现有文献大多考虑理想链路和通过线性通道进行完美信息交换的情况相反,我们展示了一般性的区间有界模型如何影响动态平衡有向网络上的收敛性和优化器(即SVM分类器)。总的来说,任何奇区间有界非线性映射都可以应用于我们的动态系统。主要挑战是展示所提出的系统动态始终具有一个零特征值(与共识有关)和其他特征值都具有负的实部。这是通过从矩阵扰动理论中回忆论证得到的。然后,证明在某些条件下解决方案会收敛到一致状态。例如,梯度追踪(GT)步长比线性情况下更紧,与上/下区域边界相关的因素紧密相关。据我们所知,在分布式优化和学习文献中还没有考虑非理想链路条件的现有工作。