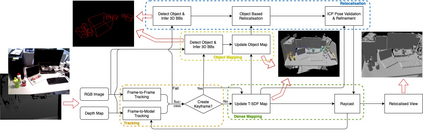

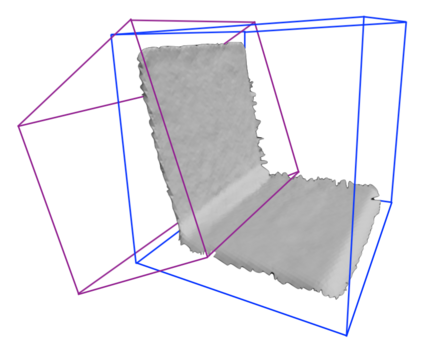

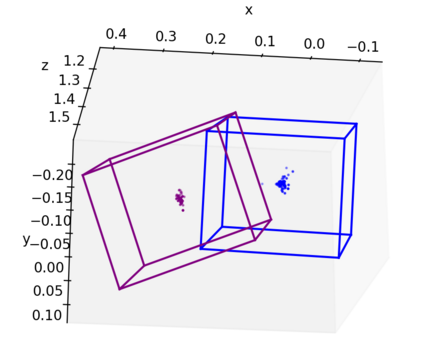

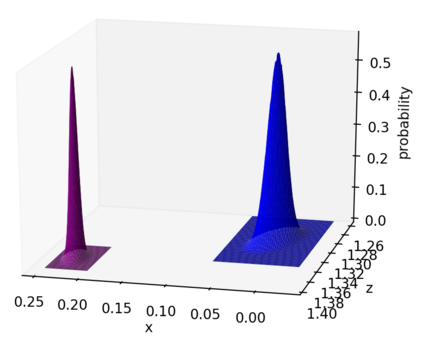

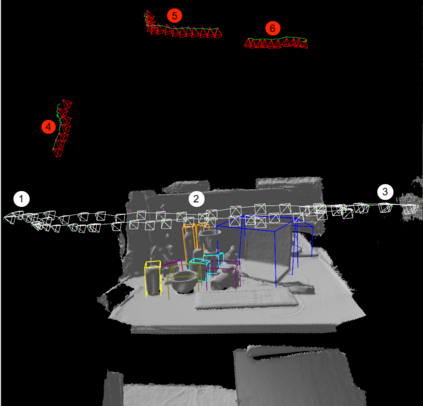

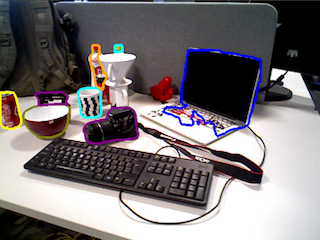

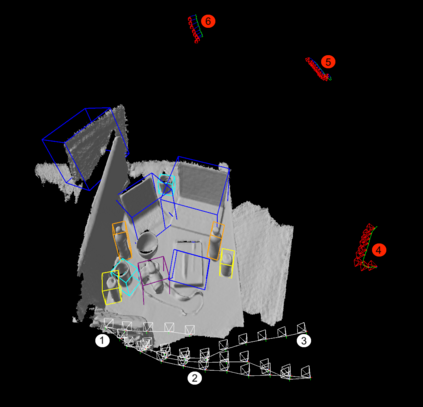

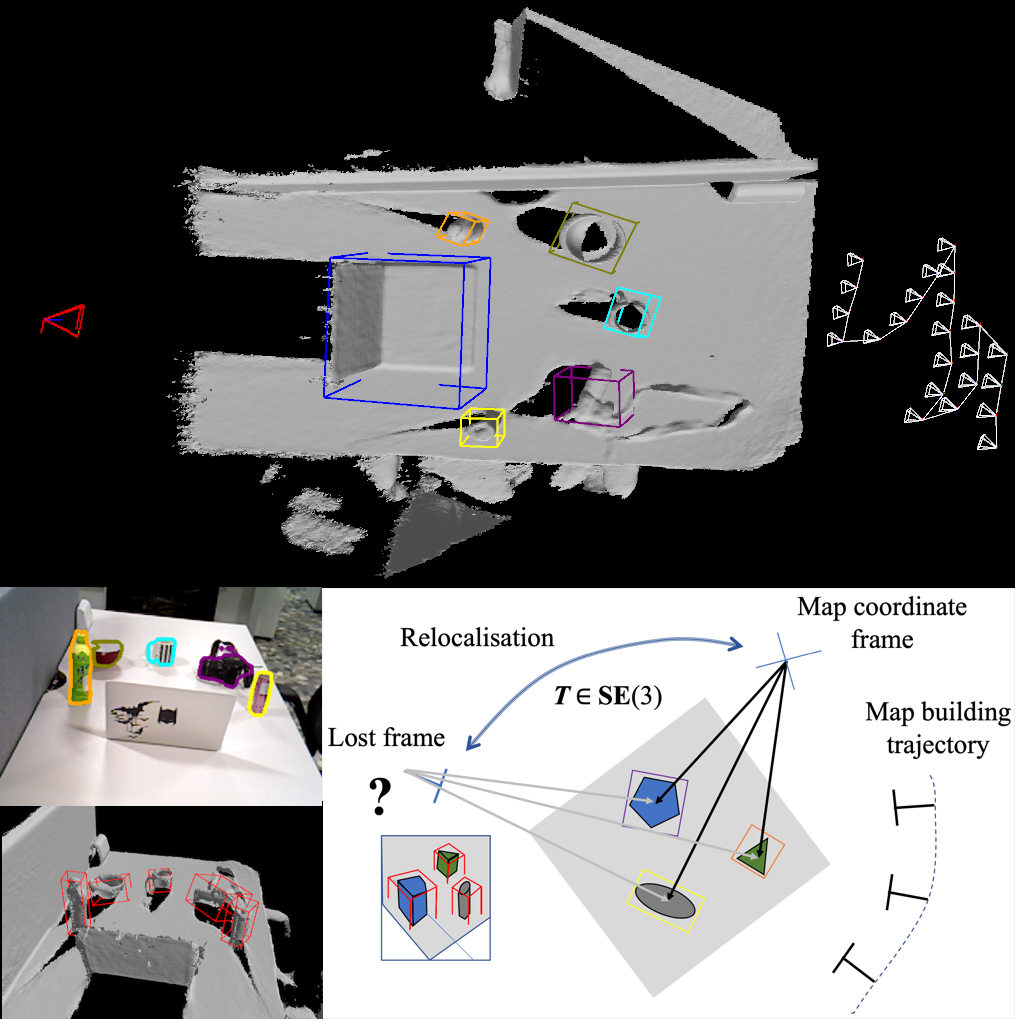

We propose a novel object-augmented RGB-D SLAM system that is capable of constructing a consistent object map and performing relocalisation based on centroids of objects in the map. The approach aims to overcome the view dependence of appearance-based relocalisation methods using point features or images. During the map construction, we use a pre-trained neural network to detect objects and estimate 6D poses from RGB-D data. An incremental probabilistic model is used to aggregate estimates over time to create the object map. Then in relocalisation, we use the same network to extract objects-of-interest in the `lost' frames. Pairwise geometric matching finds correspondences between map and frame objects, and probabilistic absolute orientation followed by application of iterative closest point to dense depth maps and object centroids gives relocalisation. Results of experiments in desktop environments demonstrate very high success rates even for frames with widely different viewpoints from those used to construct the map, significantly outperforming two appearance-based methods.

翻译:我们提出一个新的天体缩放 RGB-D SLAM 系统, 该系统能够构建一个一致的天体图, 并根据地图中天体的机器人进行重新定位。 该方法旨在克服基于外观的重新定位方法对使用点特征或图像的视依赖性。 在绘制地图时, 我们使用预先训练的神经网络来探测物体, 并估计 RGB- D 数据中的 6D 构成。 一个递增概率模型, 用来对时间的天体图进行汇总估计。 然后在重新定位中, 我们使用同样的网络来提取“ 丢失” 框中的利益对象。 相对对称的几何匹配在地图和框架对象之间找到对应的对应点, 并在应用交互性最接近深度图的绝对方向后进行概率性绝对定位。 桌面环境中的实验结果显示非常高的成功率, 即使框架与用于绘制地图的框架观点大不相同, 明显优于两种基于外观的方法。