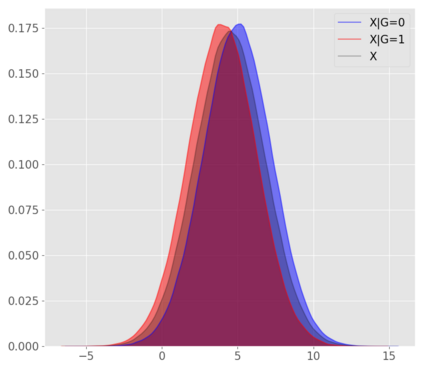

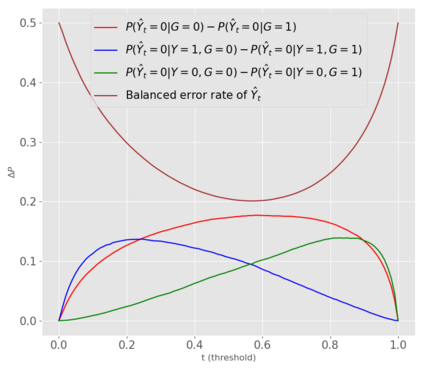

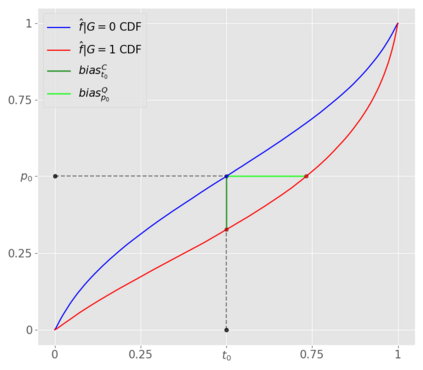

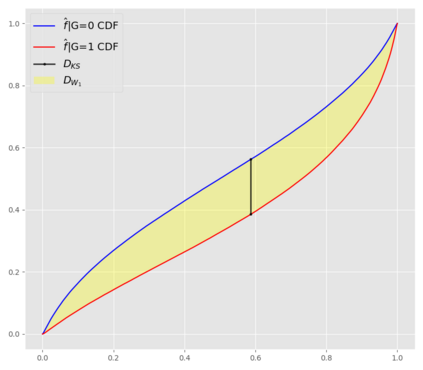

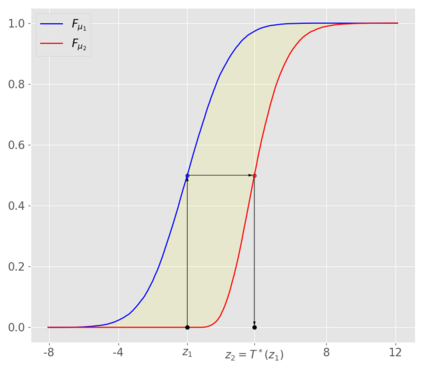

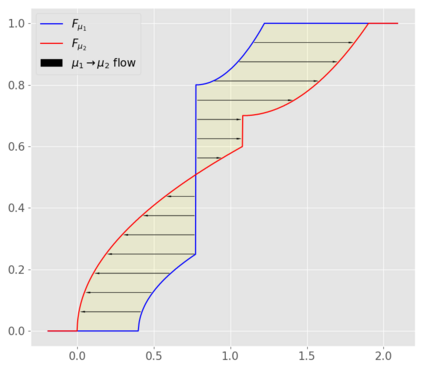

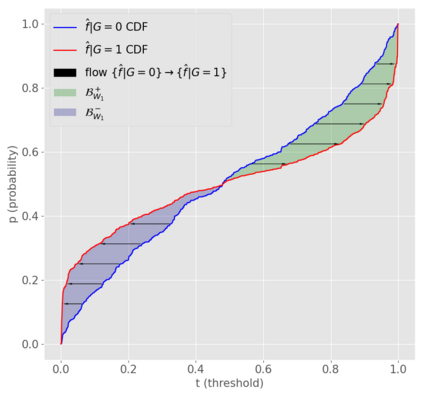

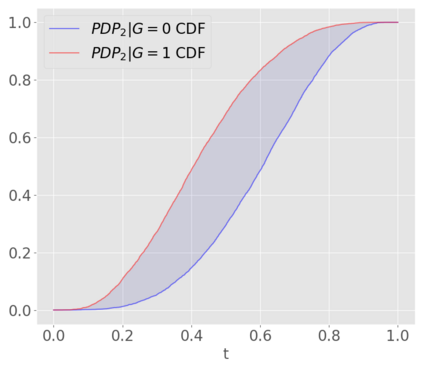

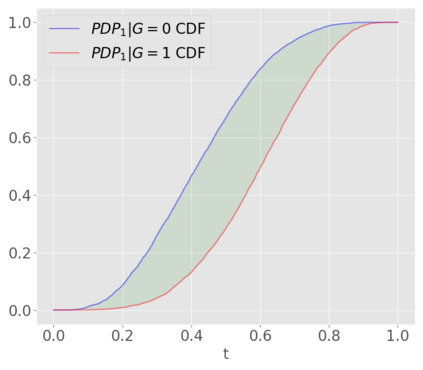

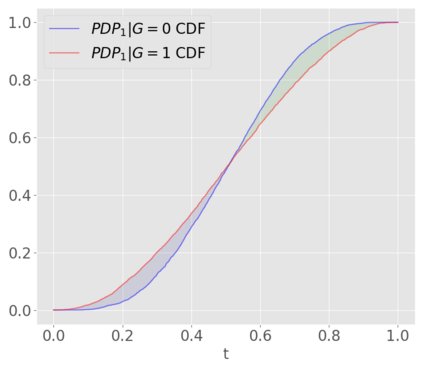

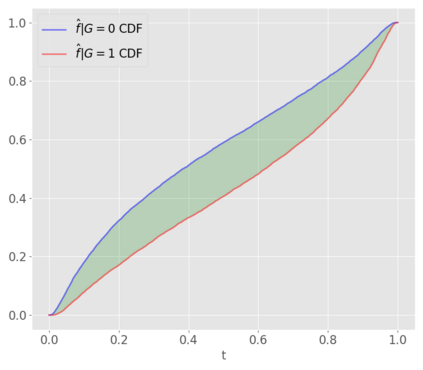

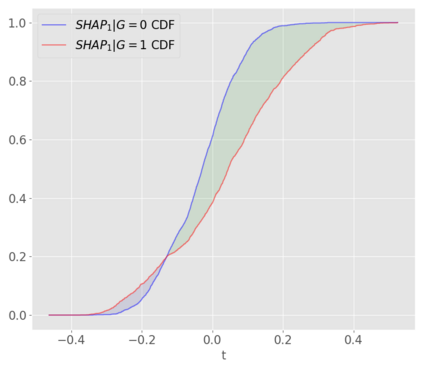

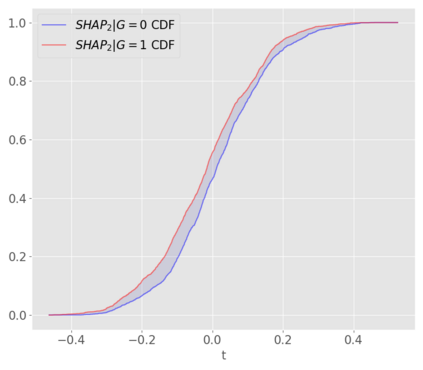

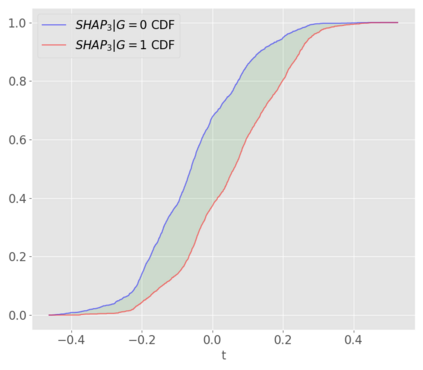

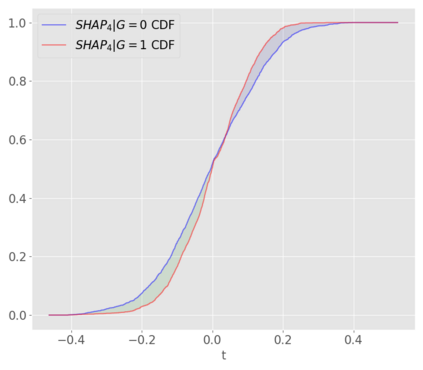

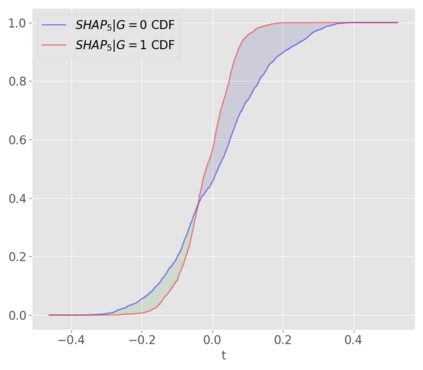

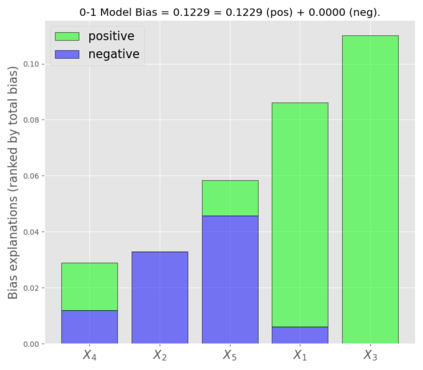

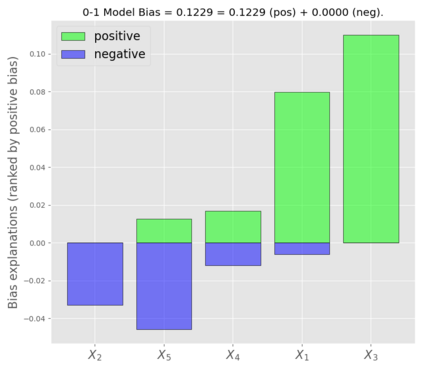

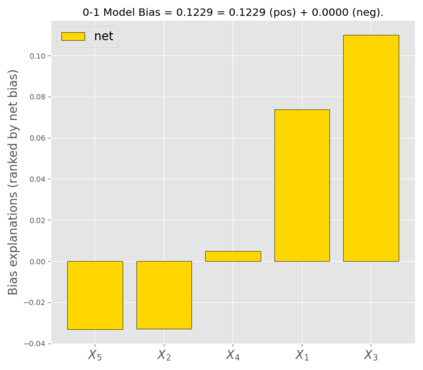

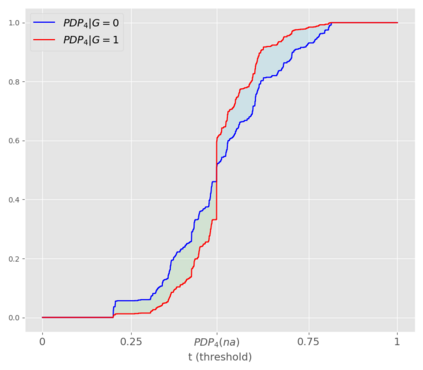

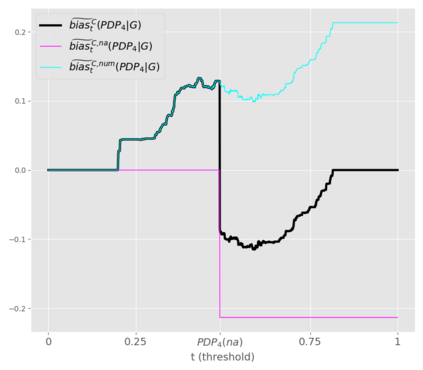

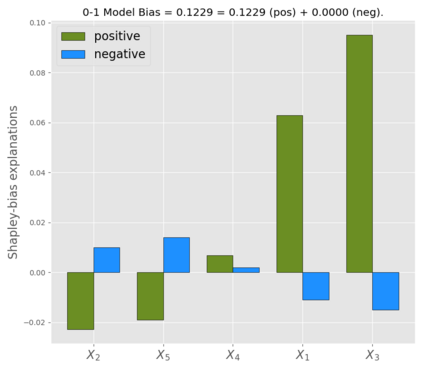

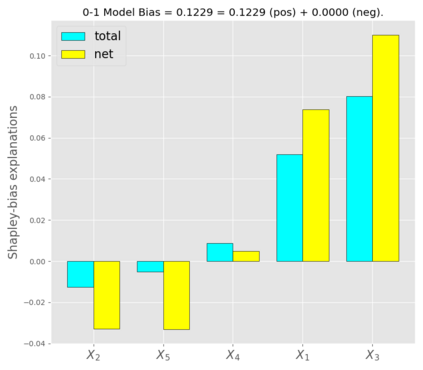

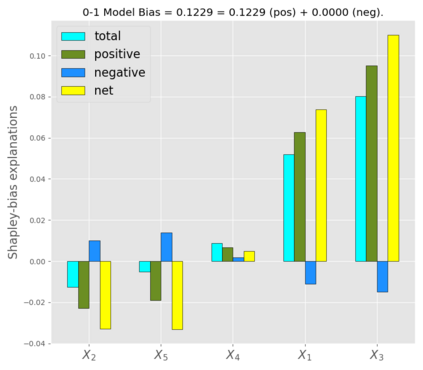

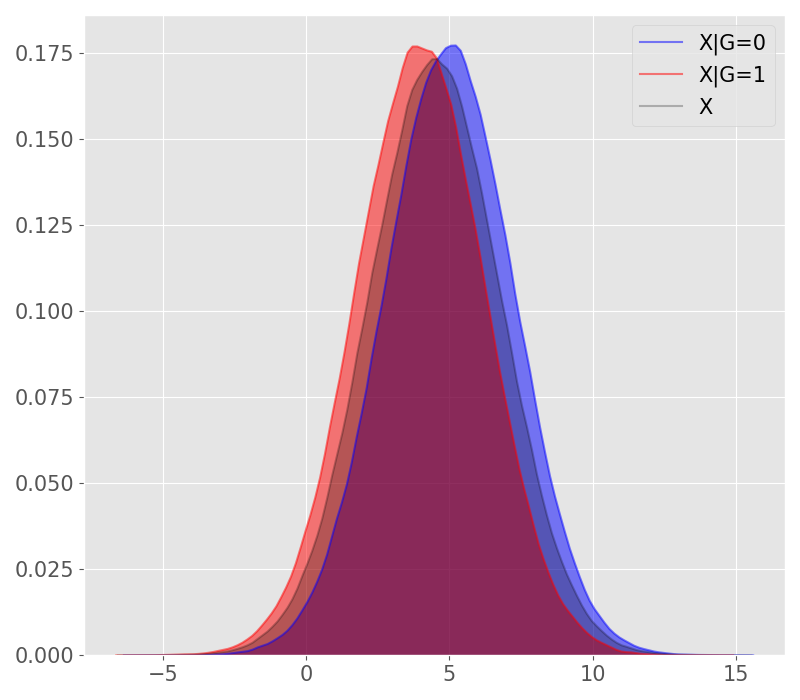

In this article, we introduce a fairness interpretability framework for measuring and explaining bias in classification and regression models at the level of a distribution. In our work, motivated by the ideas of Dwork et al. (2012), we measure the model bias across sub-population distributions using the Wasserstein metric. The transport theory characterization of the Wasserstein metric allows us to take into account the sign of the bias across the model distribution which in turn yields the decomposition of the model bias into positive and negative components. To understand how predictors contribute to the model bias, we introduce and theoretically characterize bias predictor attributions called bias explanations and investigate their stability. We also provide the formulation for the bias explanations that take into account the impact of missing values. In addition, motivated by the works of \v{S}trumbelj and Kononenko (2014) and Lundberg and Lee (2017), we construct additive bias explanations by employing cooperative game theory and investigate their properties.

翻译:在文章中,我们引入了一个公平解释框架,用于衡量和解释分类和回归模型在分布层面的偏差;在Dwork等人(2012年)的理念激励下,我们在工作中使用瓦西尔斯坦指标衡量亚人口分布模式的偏差;瓦塞斯坦指标的运输理论特征使我们能够考虑到模型分布的偏差迹象,这反过来又导致模型偏差分解成正和负部分;为了了解预测者如何促成模型偏差,我们引入并理论上定性偏见预测者属性,称之为偏差解释,并调查其稳定性;我们还为偏差解释提供了公式,其中考虑到缺失值的影响;此外,根据瓦塞特鲁姆贝利和科诺贝利(2014年)以及伦德伯格(2017年)的作品,我们通过使用合作游戏理论并调查其特性来构建附加的偏差解释。