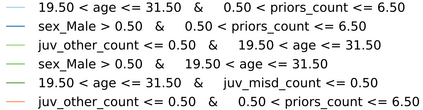

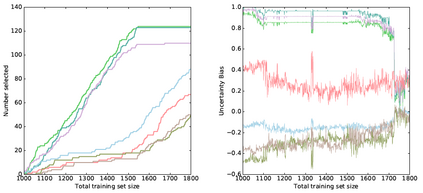

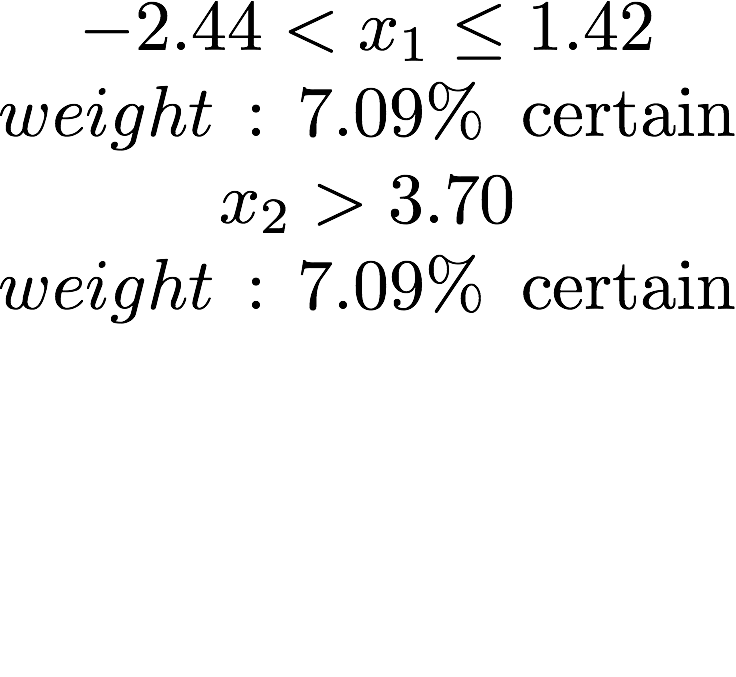

Active learning has long been a topic of study in machine learning. However, as increasingly complex and opaque models have become standard practice, the process of active learning, too, has become more opaque. There has been little investigation into interpreting what specific trends and patterns an active learning strategy may be exploring. This work expands on the Local Interpretable Model-agnostic Explanations framework (LIME) to provide explanations for active learning recommendations. We demonstrate how LIME can be used to generate locally faithful explanations for an active learning strategy, and how these explanations can be used to understand how different models and datasets explore a problem space over time. In order to quantify the per-subgroup differences in how an active learning strategy queries spatial regions, we introduce a notion of uncertainty bias (based on disparate impact) to measure the discrepancy in the confidence for a model's predictions between one subgroup and another. Using the uncertainty bias measure, we show that our query explanations accurately reflect the subgroup focus of the active learning queries, allowing for an interpretable explanation of what is being learned as points with similar sources of uncertainty have their uncertainty bias resolved. We demonstrate that this technique can be applied to track uncertainty bias over user-defined clusters or automatically generated clusters based on the source of uncertainty.

翻译:长期以来,积极学习一直是机器学习的一个研究课题。然而,随着日益复杂和不透明的模型成为标准的实践,积极学习的过程也变得更加不透明。对于如何解释积极学习战略可能探索的具体趋势和模式,我们很少进行调查。这项工作扩展了本地解释模型分析解释框架(LIME),为积极的学习建议提供解释。我们证明如何利用LIME为积极的学习战略生成当地忠实的解释,以及如何利用这些解释来理解不同模型和数据集如何随着时间的推移探索问题空间。为了量化各分组在积极学习战略如何查询空间区域方面的差异,我们引入了不确定性偏差的概念(基于不同影响),以衡量模型预测在某个分组和另一个分组之间的信任度的差异。我们使用不确定性偏差度测量方法,表明我们的查询准确地反映了积极学习询问的分组重点,允许对作为类似不确定性来源而正在学到的哪些内容作出可解释的解释,从而解决其不确定性的偏差。我们证明,这一技术可以用于自动地跟踪基于用户定义的分组的不确定性。