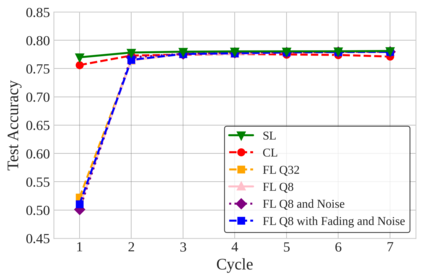

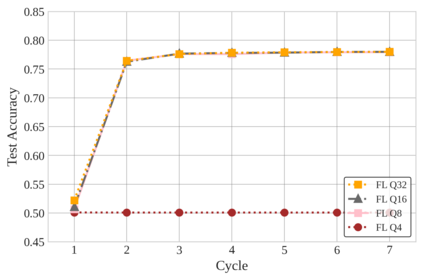

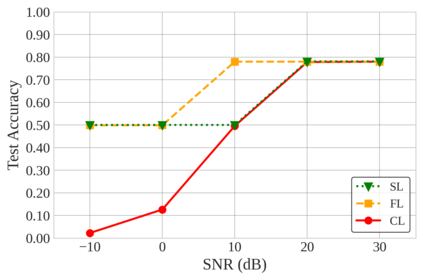

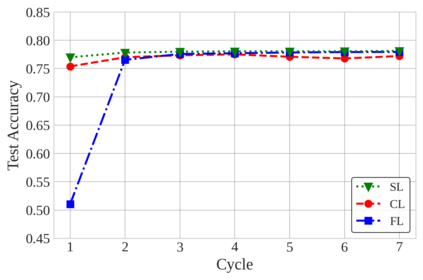

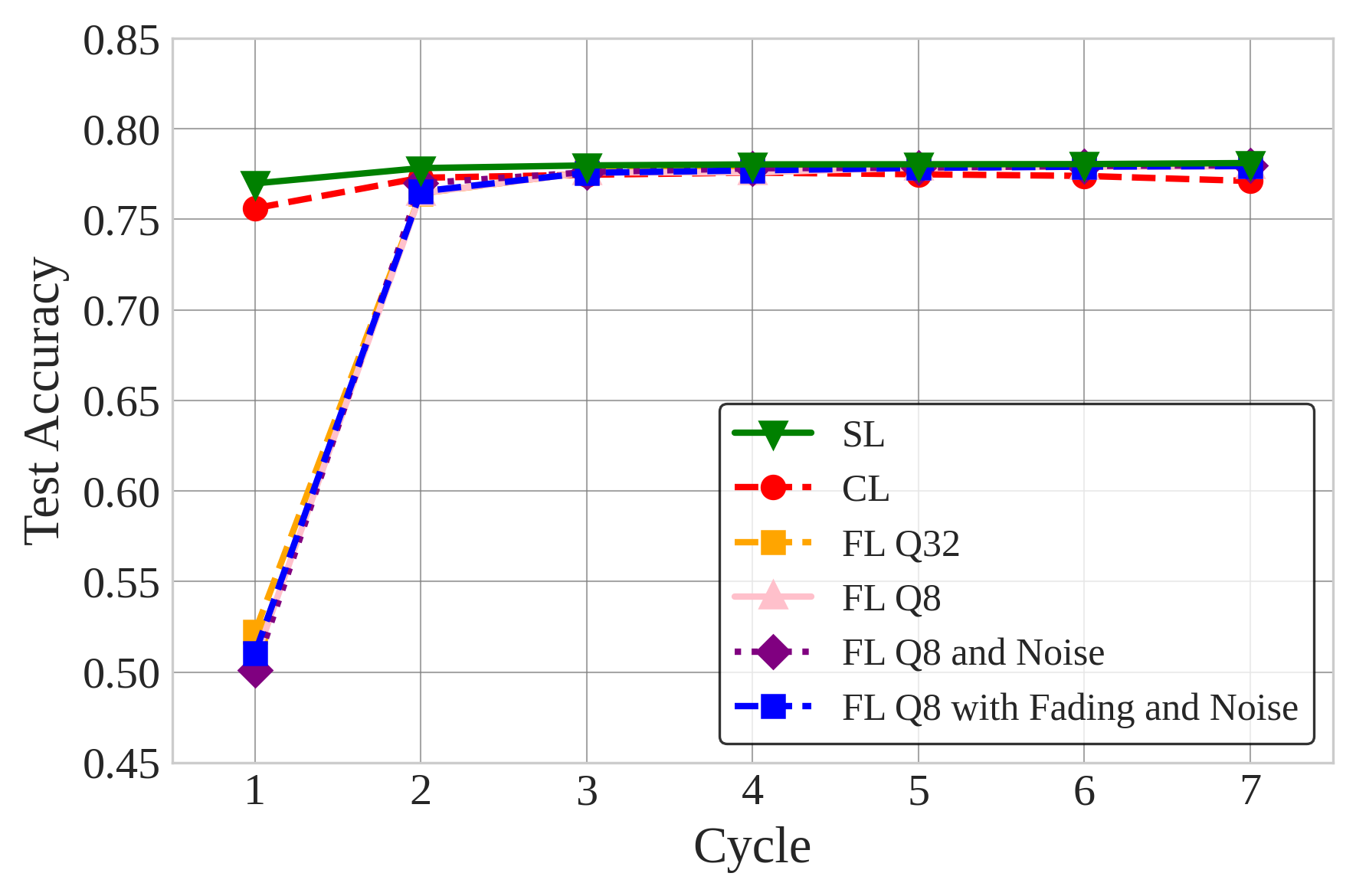

Natural Language Processing (NLP) operations, such as semantic sentiment analysis and text synthesis, often raise privacy concerns and demand significant on-device computational resources. Centralized Learning (CL) on the edge provides an energy-efficient alternative but requires collecting raw data, compromising user privacy. While Federated Learning (FL) enhances privacy, it imposes high computational energy demands on resource-constrained devices. We introduce Split Learning (SL) as an energy-efficient, privacy-preserving Tiny Machine Learning (TinyML) framework and compare it to FL and CL in the presence of Rayleigh fading and additive noise. Our results show that SL significantly reduces computational power and CO2 emissions while enhancing privacy, as evidenced by a fourfold increase in reconstruction error compared to FL and nearly eighteen times that of CL. In contrast, FL offers a balanced trade-off between privacy and efficiency. This study provides insights into deploying privacy-preserving, energy-efficient NLP models on edge devices.

翻译:暂无翻译