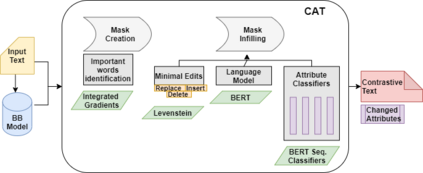

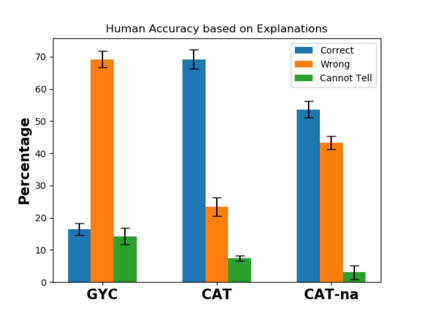

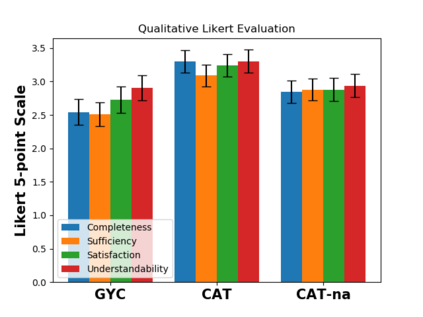

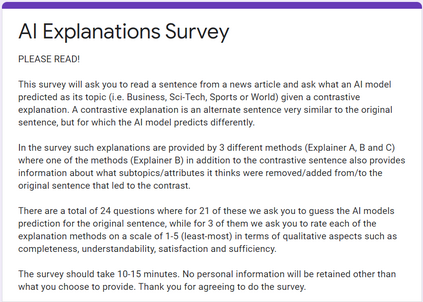

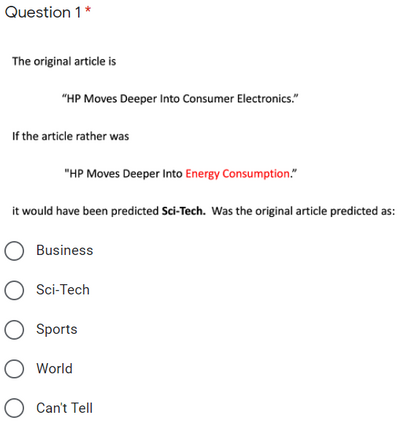

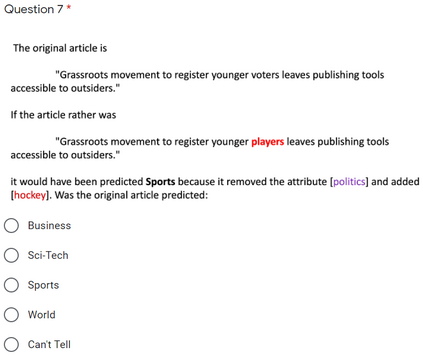

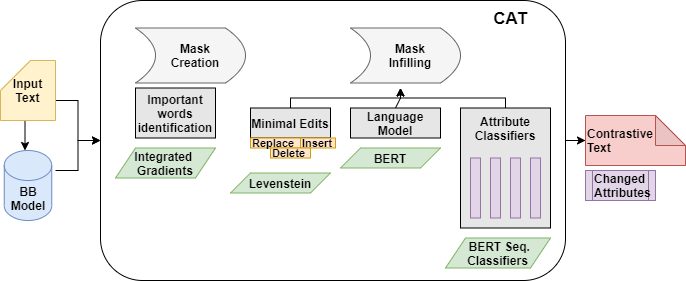

Contrastive explanations for understanding the behavior of black box models has gained a lot of attention recently as they provide potential for recourse. In this paper, we propose a method Contrastive Attributed explanations for Text (CAT) which provides contrastive explanations for natural language text data with a novel twist as we build and exploit attribute classifiers leading to more semantically meaningful explanations. To ensure that our contrastive generated text has the fewest possible edits with respect to the original text, while also being fluent and close to a human generated contrastive, we resort to a minimal perturbation approach regularized using a BERT language model and attribute classifiers trained on available attributes. We show through qualitative examples and a user study that our method not only conveys more insight because of these attributes, but also leads to better quality (contrastive) text. Moreover, quantitatively we show that our method is more efficient than other state-of-the-art methods with it also scoring higher on benchmark metrics such as flip rate, (normalized) Levenstein distance, fluency and content preservation.

翻译:理解黑盒模型行为的对比性解释最近引起了人们的极大关注,因为它们提供了追索的可能性。 在本文中,我们建议了一种方法,即对文本的对比性属性解释,该方法为自然语言文本数据提供了对比性解释,在我们建立和利用属性分类器时,我们用新的曲折来进行更具有语义意义的解释。为了确保我们对比性生成的文本对原始文本有尽可能少的编辑,同时也是流利的,接近人类生成的对比,我们采用了一种最起码的扰动方法,使用BERT语言模型和根据现有属性受过培训的属性分类师进行正规化。我们通过定性实例和用户研究显示,我们的方法不仅能够对这些属性进行更多的洞察,而且能够带来更好的质量(调控)文本。此外,在数量上,我们表明我们的方法比其他最先进的方法更有效,在诸如翻速率、(常规化的)Levestein距离、流利和内容保护等基准指标上也得分得更高。