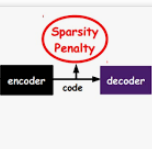

Motivated by time series forecasting, we study Online Linear Optimization (OLO) under the coupling of two problem structures: the domain is unbounded, and the performance of an algorithm is measured by its dynamic regret. Handling either of them requires the regret bound to depend on certain complexity measure of the comparator sequence -- specifically, the comparator norm in unconstrained OLO, and the path length in dynamic regret. In contrast to a recent work (Jacobsen & Cutkosky, 2022) that adapts to the combination of these two complexity measures, we propose an alternative complexity measure by recasting the problem into sparse coding. Adaptivity can be achieved by a simple modular framework, which naturally exploits more intricate prior knowledge of the environment. Along the way, we also present a new gradient adaptive algorithm for static unconstrained OLO, designed using novel continuous time machinery. This could be of independent interest.

翻译:稀疏编码实现无限制动态遗憾

Translated abstract:

本文针对时间序列预测动态遗憾问题,研究了在两个问题结构耦合的情况下的在线线性优化(OLO):领域不受限制,且算法的性能通过其动态遗憾来衡量。解决这两者之一需要使遗憾界限取决于比较器序列的某些复杂度量,具体而言是无约束OLO中的比较器范数和动态遗憾中的路径长度。与最近的一项工作(Jacobsen&Cukosky,2022)不同,该工作适应了这两种复杂度量的组合,我们提出了一种通过重新构造问题为稀疏编码的复杂度量。通过简单的模块化框架,可以实现适应性,自然地利用环境中更复杂的先验知识。同时,我们还提出了一种新的静态无约束OLO梯度自适应算法,使用了新颖的连续时间机制。这可能具有独立的兴趣。