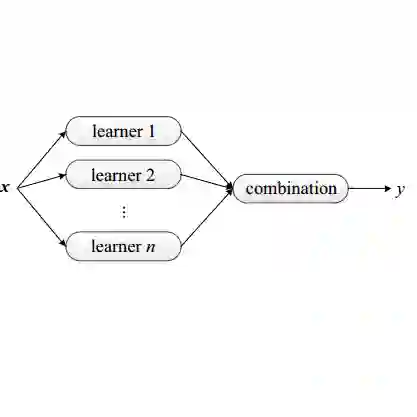

Static Analysis (SA) tools are used to identify potential weaknesses in code and fix them in advance, while the code is being developed. In legacy codebases with high complexity, these rules-based static analysis tools generally report a lot of false warnings along with the actual ones. Though the SA tools uncover many hidden bugs, they are lost in the volume of fake warnings reported. The developers expend large hours of time and effort in identifying the true warnings. Other than impacting the developer productivity, true bugs are also missed out due to this challenge. To address this problem, we propose a Machine Learning (ML)-based learning process that uses source codes, historic commit data, and classifier-ensembles to prioritize the True warnings from the given list of warnings. This tool is integrated into the development workflow to filter out the false warnings and prioritize actual bugs. We evaluated our approach on the networking C codes, from a large data pool of static analysis warnings reported by the tools. Time-to-time these warnings are addressed by the developers, labelling them as authentic bugs or fake alerts. The ML model is trained with full supervision over the code features. Our results confirm that applying deep learning over the traditional static analysis reports is an assuring approach for drastically reducing the false positive rates.

翻译:静态分析(SA) 工具用于识别代码中的潜在弱点并提前修正这些弱点,而该代码正在开发过程中。在具有高度复杂性的遗留代码库中,基于规则的静态分析工具一般会报告大量虚假警告以及实际警告。虽然基于规则的静态分析工具发现了许多隐藏的错误,但在报告的虚假警告数量中却丢失了这些工具。开发者花费了大量的时间和精力来识别真实警告。除了影响开发者生产力之外,真正的错误也会因这一挑战而被忽略。为了解决这一问题,我们提议了一个基于机器学习(ML)的学习程序,该程序使用源代码、历史承诺数据以及分类器-感应器,将真实警告与实际警告列表中的许多错误作为优先事项排列。虽然基于规则的静态分析工具发现了许多隐蔽的错误,但是这些工具却在报告数量上丢失了它们。我们从一个庞大的静态分析警告数据库中评估了我们在网络C代码上的方法。这些警告被开发者处理,将其标记标记为真实的错误或假警报。ML模型是经过全面监督而培训的,以全面监督来降低代码特性特性特性。我们的成果是保证静态分析报告。