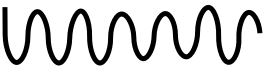

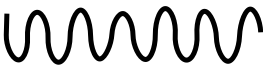

Multimodal abstractive summarization with sentence output is to generate a textual summary given a multimodal triad -- sentence, image and audio, which has been proven to improve users satisfaction and convenient our life. Existing approaches mainly focus on the enhancement of multimodal fusion, while ignoring the unalignment among multiple inputs and the emphasis of different segments in feature, which has resulted in the superfluity of multimodal interaction. To alleviate these problems, we propose a Multimodal Hierarchical Selective Transformer (mhsf) model that considers reciprocal relationships among modalities (by low-level cross-modal interaction module) and respective characteristics within single fusion feature (by high-level selective routing module). In details, it firstly aligns the inputs from different sources and then adopts a divide and conquer strategy to highlight or de-emphasize multimodal fusion representation, which can be seen as a sparsely feed-forward model - different groups of parameters will be activated facing different segments in feature. We evaluate the generalism of proposed mhsf model with the pre-trained+fine-tuning and fresh training strategies. And Further experimental results on MSMO demonstrate that our model outperforms SOTA baselines in terms of ROUGE, relevance scores and human evaluation.

翻译:现有方法主要侧重于加强多式联运融合,同时忽视多种投入的不匹配和不同特点部分的强调,从而导致多式联运互动的超易流性。为了缓解这些问题,我们建议采用多式分级分级选择变异器(mhsf)模式,该模式考虑模式(通过低层次跨式互动模块)与单一聚合特征(通过高层次选择性选择路由模块)内各特点之间的对等关系。在细节方面,现有方法主要侧重于加强多式联运融合,同时忽视多种投入之间的不匹配和不同特点部分的强调,从而导致多式联运互动的超易变性。为缓解这些问题,我们建议采用多式分级分级分级选择变异器模式(mhsf)模式,考虑模式(通过低层次跨式互动模块)和单一组合特征(通过高层次选择性路由模块)中各自特点之间的对等关系。在细节方面,它首先调整不同来源的投入,然后采取分化和征服战略,突出或淡化多式联运代表,这可被视为一种稀少的进进取模式。不同的参数组将面对不同特点。我们评估前加新调整后再培训战略中拟议的 mshf模式的通用模式,进一步实验性结果显示SOFMMO基准和SODA的参考基准。