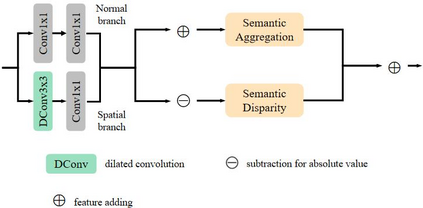

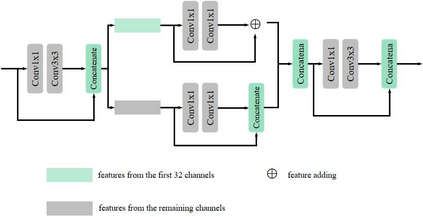

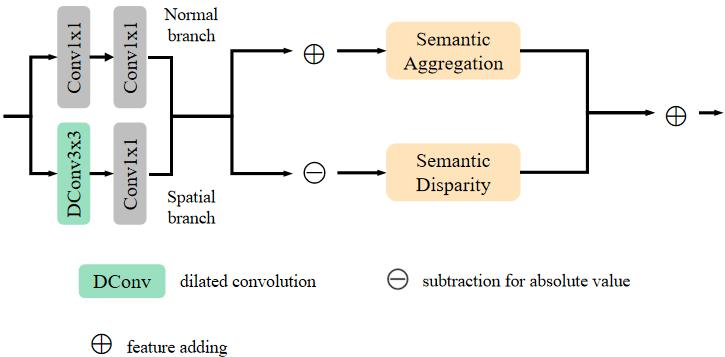

Existing deep learning based methods effectively prompt the performance of aerial scene classification. However, due to the large amount of parameters and computational cost, it is rather difficult to apply these methods to multiple real-time remote sensing applications such as on-board data preception on drones and satellites. In this paper, we address this task by developing a light-weight ConvNet named multi-stage duplex fusion network (MSDF-Net). The key idea is to use parameters as little as possible while obtaining as strong as possible scene representation capability. To this end, a residual-dense duplex fusion strategy is developed to enhance the feature propagation while re-using parameters as much as possible, and is realized by our duplex fusion block (DFblock). Specifically, our MSDF-Net consists of multi-stage structures with DFblock. Moreover, duplex semantic aggregation (DSA) module is developed to mine the remote sensing scene information from extracted convolutional features, which also contains two parallel branches for semantic description. Extensive experiments are conducted on three widely-used aerial scene classification benchmarks, and reflect that our MSDF-Net can achieve a competitive performance against the recent state-of-art while reducing up to 80% parameter numbers. Particularly, an accuracy of 92.96% is achieved on AID with only 0.49M parameters.

翻译:现有的深层次学习方法有效地促进了空中场景分类的性能。然而,由于大量的参数和计算成本,很难将这些方法应用于多实时遥感应用,如无人机和卫星的机载数据预感。在本文件中,我们通过开发一个名为多阶段双相融合网络(MSDF-Net)的轻量级ConNet来应对这项任务。关键的想法是尽可能少地使用参数,同时尽可能地获得最强的场景展示能力。为此,制定了一个残余的双面融合战略,以加强特征传播,同时尽可能地重新使用参数,并且由我们的双面聚变区(DF区)实现。具体地说,我们的MSDF-Net由带有DF区块的多阶段结构组成。此外,还开发了双面静态集合模块,以便从提取的卷发地特征中挖掘遥感场景信息,其中还包含两个平行的分区描述分支。为此,在三个广泛使用的空中场景分类基准上进行了广泛的实验,并反映了我们的MSDF-Net能够达到80-MIDM的竞争性参数,而最近达到80-M的精确度的精确度只有80MDM的精确度。