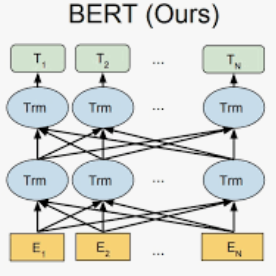

Recent papers have shown that large pre-trained language models (LLMs) such as BERT, GPT-2 can be fine-tuned on private data to achieve performance comparable to non-private models for many downstream Natural Language Processing (NLP) tasks while simultaneously guaranteeing differential privacy. The inference cost of these models -- which consist of hundreds of millions of parameters -- however, can be prohibitively large. Hence, often in practice, LLMs are compressed before they are deployed in specific applications. In this paper, we initiate the study of differentially private model compression and propose frameworks for achieving 50% sparsity levels while maintaining nearly full performance. We demonstrate these ideas on standard GLUE benchmarks using BERT models, setting benchmarks for future research on this topic.

翻译:最近的论文表明,大型预先培训语言模型(LLMs),如BERT、GPT-2等大型语言模型(LLMs)可以对私人数据进行微调,以取得与许多下游自然语言处理(NLP)的非私人模型(NLP)任务类似的业绩,同时保障不同的隐私,但这些模型的推论成本(由数亿个参数组成)可能高得令人望而却步,因此,在实际中,LLMs通常在应用特定应用之前就被压缩。在本文件中,我们开始研究差别化的私人模型压缩,并提出实现50%宽度水平的框架,同时保持接近全面的业绩。我们用BERT模型展示这些关于标准GLUE基准的想法,为今后有关这一专题的研究设定基准。