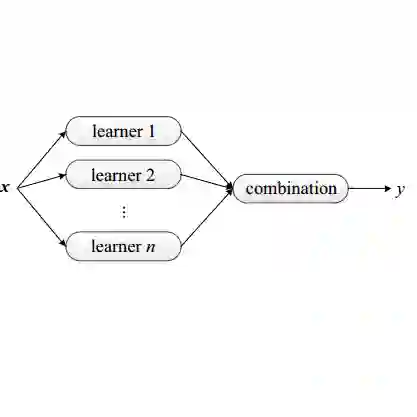

Detailed phenotype information is fundamental to accurate diagnosis and risk estimation of diseases. As a rich source of phenotype information, electronic health records (EHRs) promise to empower diagnostic variant interpretation. However, how to accurately and efficiently extract phenotypes from the heterogeneous EHR data remains a challenge. In this work, we present PheME, an Ensemble framework using Multi-modality data of structured EHRs and unstructured clinical notes for accurate Phenotype prediction. Firstly, we employ multiple deep neural networks to learn reliable representations from the sparse structured EHR data and redundant clinical notes. A multi-modal model then aligns multi-modal features onto the same latent space to predict phenotypes. Secondly, we leverage ensemble learning to combine outputs from single-modal models and multi-modal models to improve phenotype predictions. We choose seven diseases to evaluate the phenotyping performance of the proposed framework. Experimental results show that using multi-modal data significantly improves phenotype prediction in all diseases, the proposed ensemble learning framework can further boost the performance.

翻译:详细的表型信息对于疾病的精确诊断和风险评估至关重要。作为表型信息的丰富来源,电子健康记录(EHR)有望赋予诊断变异解释以力量。然而,如何从异构的EHR数据中准确高效地提取表型仍然是一个挑战。在本研究中,我们提出了PheME,利用结构化EHR和非结构化临床记录的多模态数据进行准确表型预测的集成框架。首先,我们采用多个深度神经网络从稀疏的结构化EHR数据和冗余的临床记录中学习可靠的表示。多模型将多模式特征对齐到同一个潜在空间中,以预测表型。其次,我们利用集成学习来组合来自单模型和多模型的输出,以提高表型预测的准确性。我们选择了七种疾病来评估所提出的框架的表型划分性能。实验结果表明,使用多模态数据显著提高了所有疾病的表型预测性能,所提出的集成学习框架可以进一步提高性能。