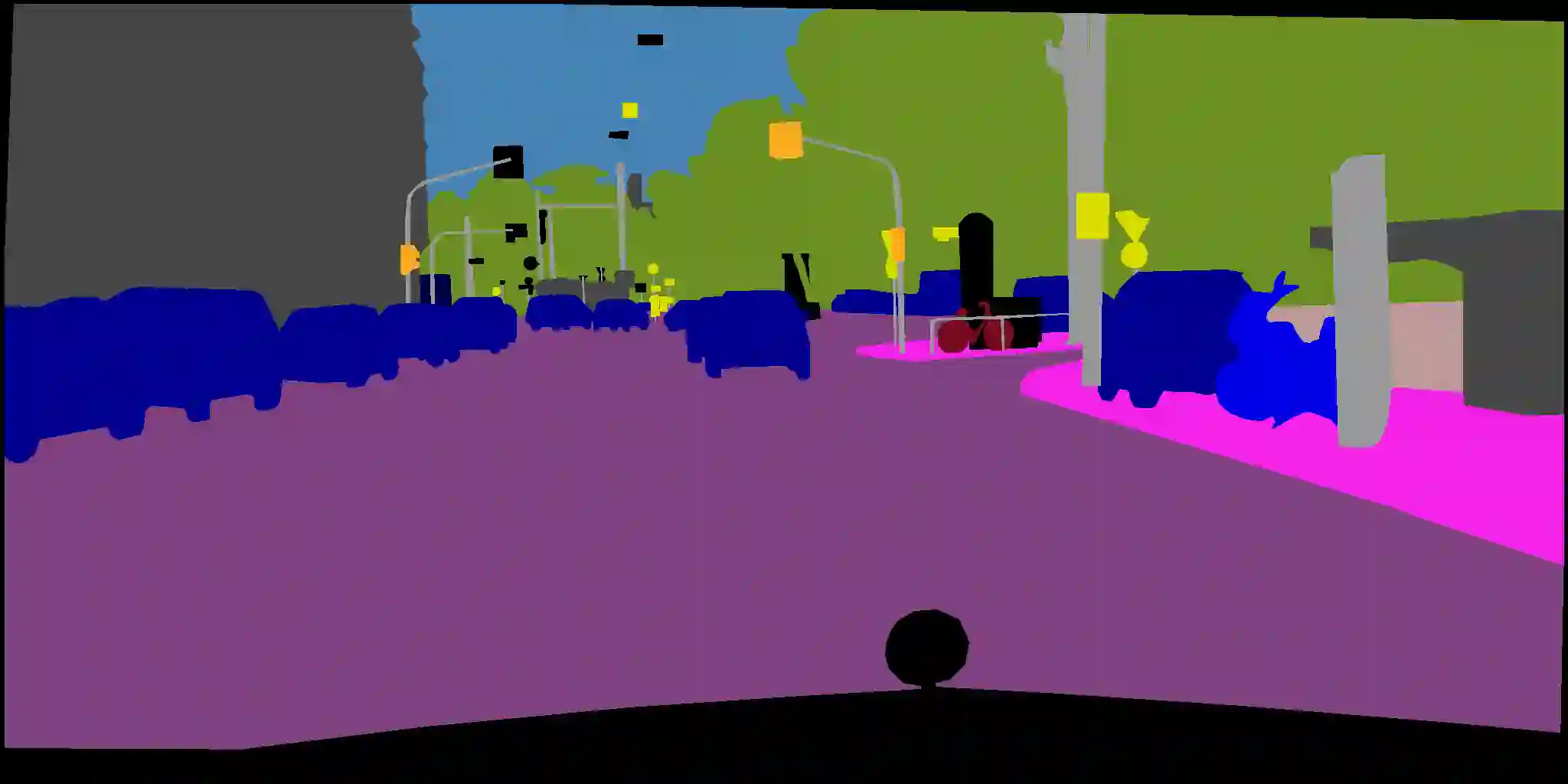

Collection of real world annotations for training semantic segmentation models is an expensive process. Unsupervised domain adaptation (UDA) tries to solve this problem by studying how more accessible data such as synthetic data can be used to train and adapt models to real world images without requiring their annotations. Recent UDA methods applies self-learning by training on pixel-wise classification loss using a student and teacher network. In this paper, we propose the addition of a consistency regularization term to semi-supervised UDA by modelling the inter-pixel relationship between elements in networks' output. We demonstrate the effectiveness of the proposed consistency regularization term by applying it to the state-of-the-art DAFormer framework and improving mIoU19 performance on the GTA5 to Cityscapes benchmark by 0.8 and mIou16 performance on the SYNTHIA to Cityscapes benchmark by 1.2.

翻译:为培训语义分解模型收集真实世界注释是一个昂贵的过程。 不受监督的域适应(UDA)试图解决这个问题,研究如何利用合成数据等更便捷的数据培训和使模型适应真实世界图像而无需附加说明。 最近的UDA方法采用自学方法,通过使用学生和教师网络进行像素分类损失培训。 在本文中,我们提议在半受监督的UDA中增加一个一致性正规化术语,为网络产出各要素之间的像素关系建模。 我们通过将这一拟议的一致性正规化术语应用到最新的DAFormer框架,并通过将GTA5的MIOU19性能应用于城市景基准,在SYNTHIA到城市景基准上提高0. 8 和 mIou16性能,在1.2 城市景点基准上提高MIOU19性能,从而证明拟议的一致性正规化术语的有效性。