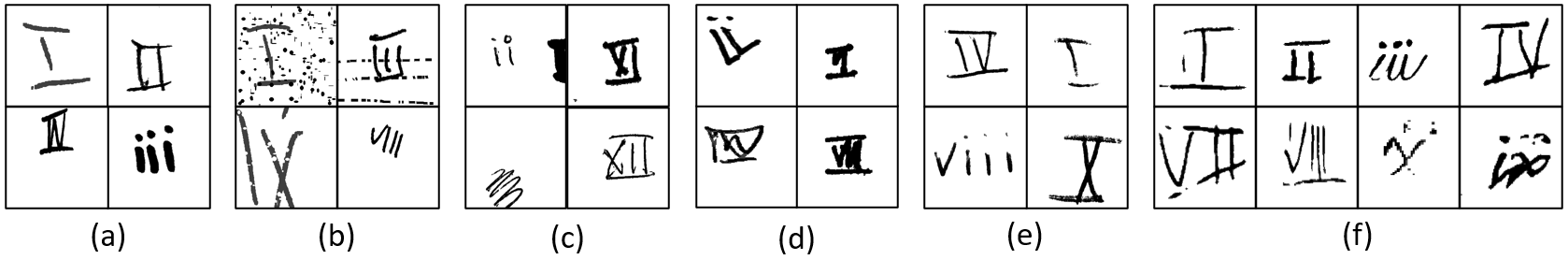

While the availability of large datasets is perceived to be a key requirement for training deep neural networks, it is possible to train such models with relatively little data. However, compensating for the absence of large datasets demands a series of actions to enhance the quality of the existing samples and to generate new ones. This paper summarizes our winning submission to the "Data-Centric AI" competition. We discuss some of the challenges that arise while training with a small dataset, offer a principled approach for systematic data quality enhancement, and propose a GAN-based solution for synthesizing new data points. Our evaluations indicate that the dataset generated by the proposed pipeline offers 5% accuracy improvement while being significantly smaller than the baseline.

翻译:虽然大型数据集的可用性被认为是培训深神经网络的关键要求,但有可能以相对较少的数据来培训这类模型。然而,弥补缺乏大型数据集的情况需要采取一系列行动,以提高现有样品的质量和产生新的数据集。本文件总结了我们中标的“数据目录”竞争申请。我们讨论了在培训小型数据集的同时产生的一些挑战,为系统提高数据质量提供了原则性方法,并为合成新数据点提出了基于全球网络的解决方案。我们的评估表明,拟议管道生成的数据集的准确性提高了5%,但比基线要小得多。