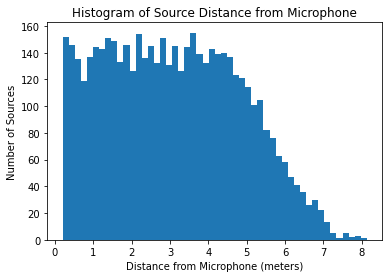

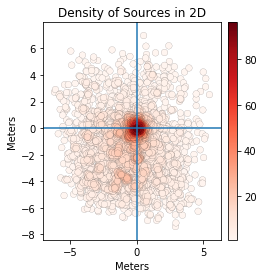

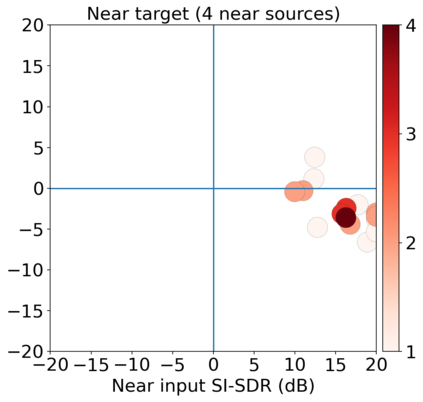

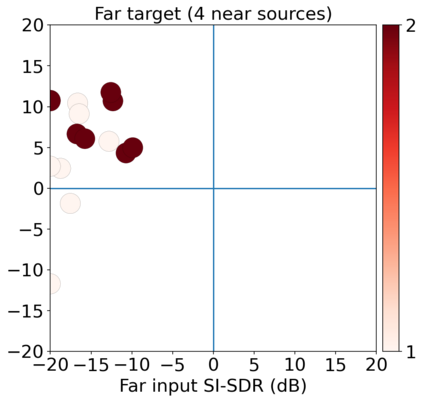

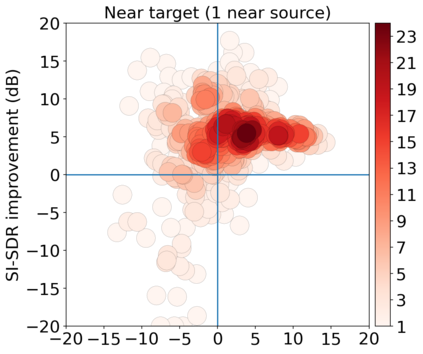

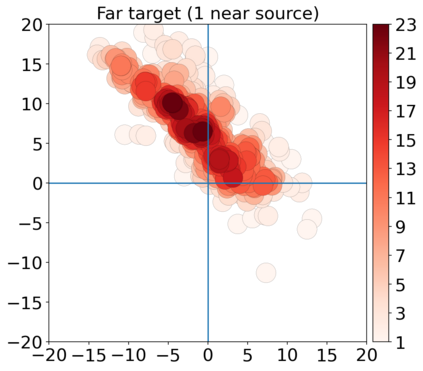

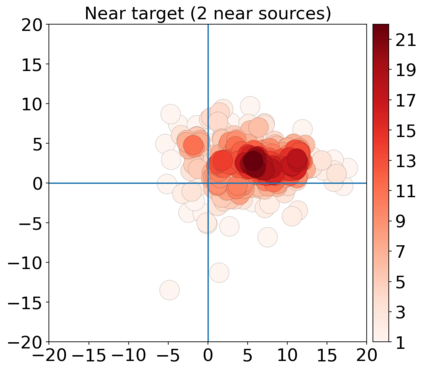

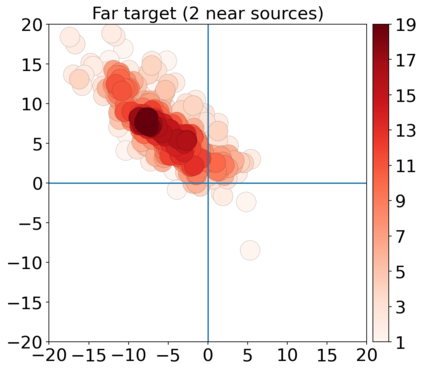

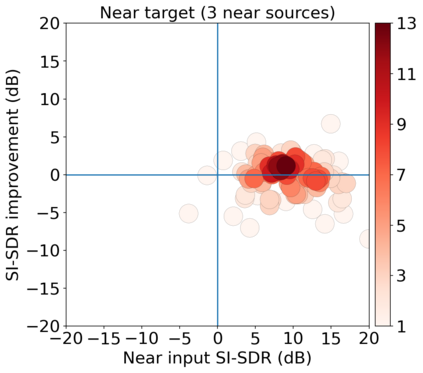

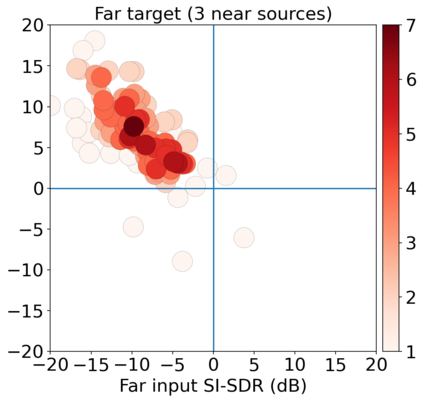

We propose the novel task of distance-based sound separation, where sounds are separated based only on their distance from a single microphone. In the context of assisted listening devices, proximity provides a simple criterion for sound selection in noisy environments that would allow the user to focus on sounds relevant to a local conversation. We demonstrate the feasibility of this approach by training a neural network to separate near sounds from far sounds in single channel synthetic reverberant mixtures, relative to a threshold distance defining the boundary between near and far. With a single nearby speaker and four distant speakers, the model improves scale-invariant signal to noise ratio by 4.4 dB for near sounds and 6.8 dB for far sounds.

翻译:我们提出基于远程的音响分离的新任务,即声音仅根据与单一麦克风的距离而分离。在辅助听力装置方面,近距离为在吵闹环境中进行音响选择提供了一个简单的标准,使用户能够集中关注与本地对话相关的音响。我们通过训练神经网络将声音与单一频道合成回响混合物中的远音相分离,相对于界定近距离和远距离边界的临界距离,来证明这一方法的可行性。模型用一个邻近的发言者和四个远距离的发言者,使接近音音音的信号与噪音的比例比提高4.4 dB,远音的信号比提高6.8 dB。