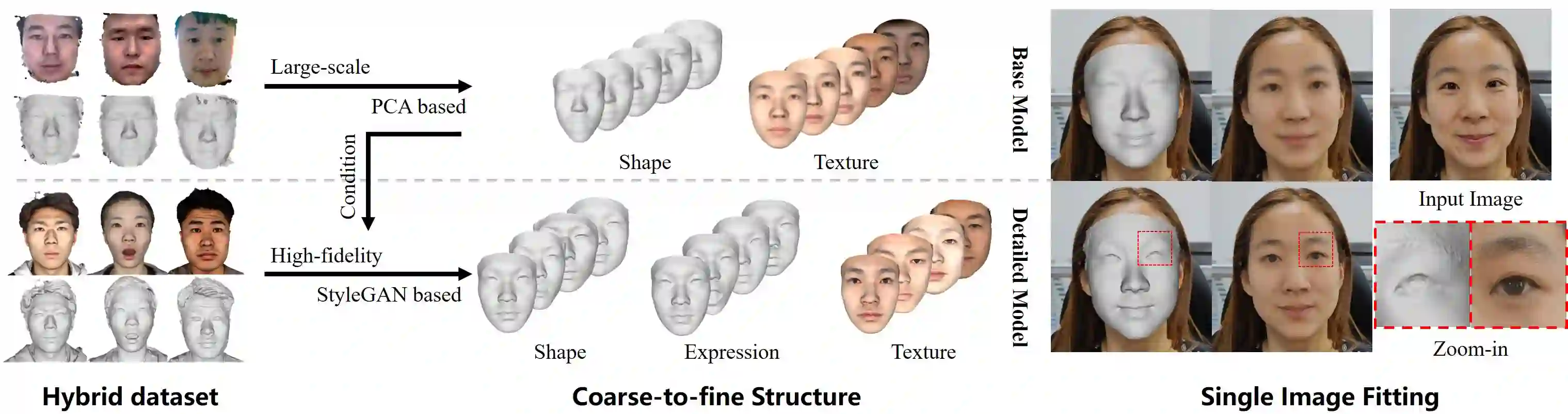

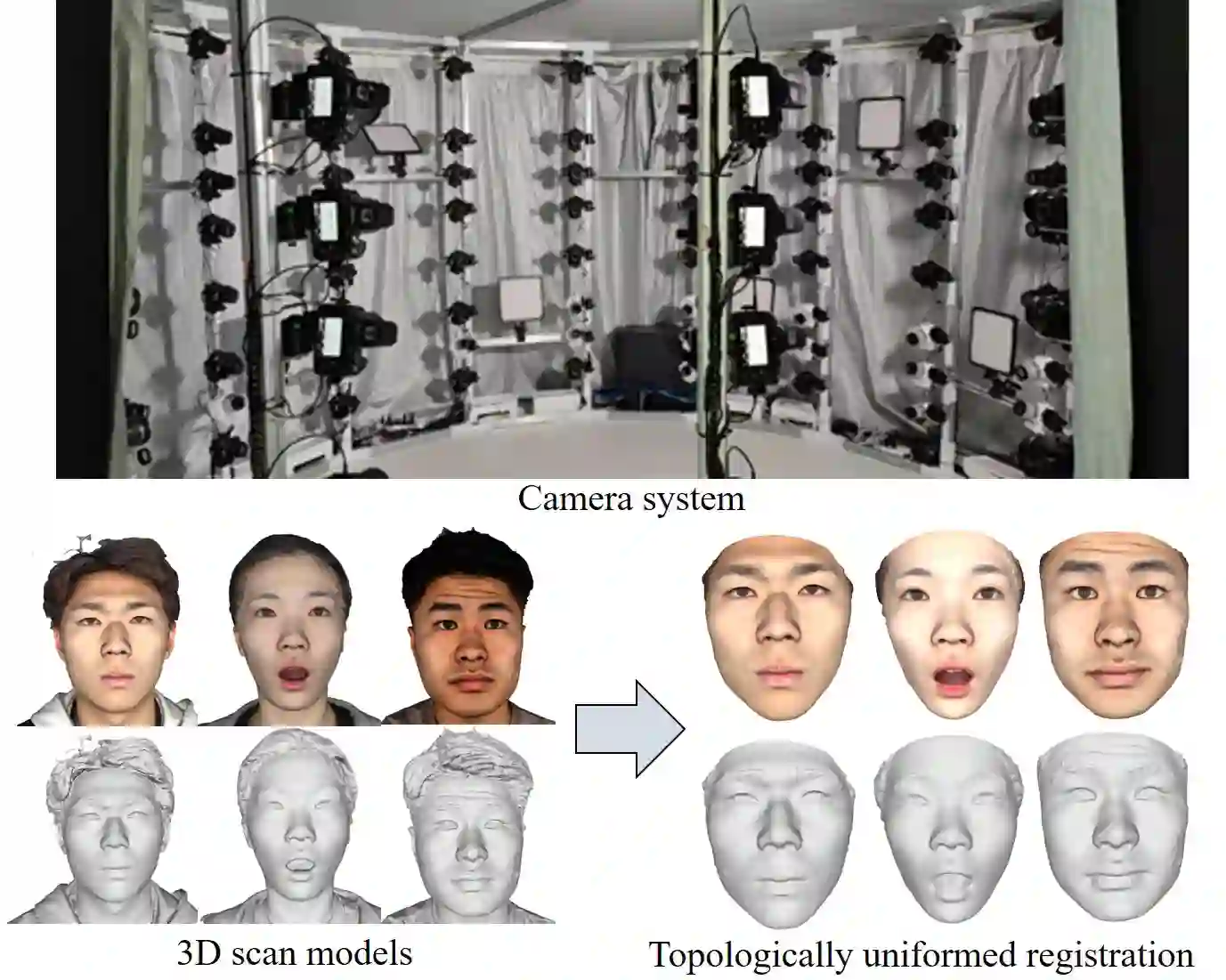

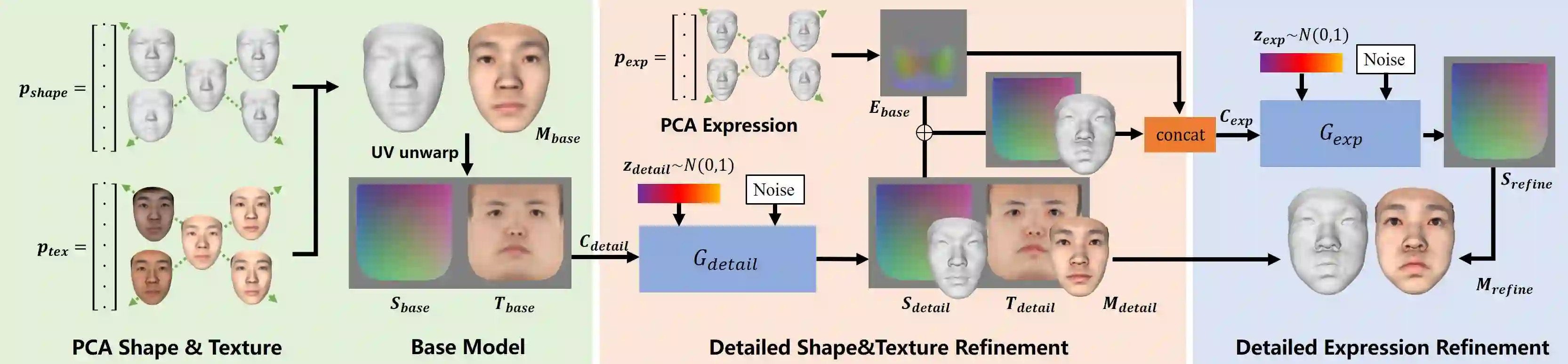

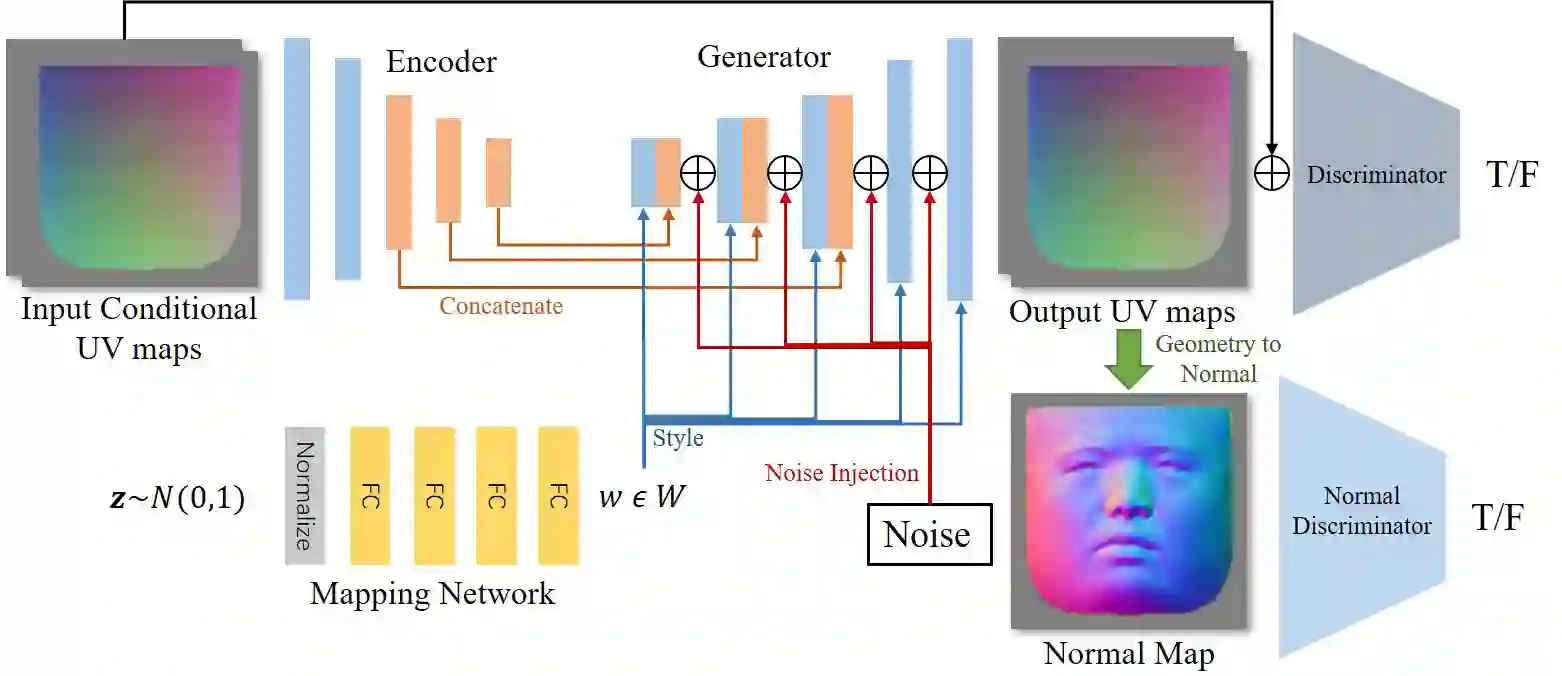

We present FaceVerse, a fine-grained 3D Neural Face Model, which is built from hybrid East Asian face datasets containing 60K fused RGB-D images and 2K high-fidelity 3D head scan models. A novel coarse-to-fine structure is proposed to take better advantage of our hybrid dataset. In the coarse module, we generate a base parametric model from large-scale RGB-D images, which is able to predict accurate rough 3D face models in different genders, ages, etc. Then in the fine module, a conditional StyleGAN architecture trained with high-fidelity scan models is introduced to enrich elaborate facial geometric and texture details. Note that different from previous methods, our base and detailed modules are both changeable, which enables an innovative application of adjusting both the basic attributes and the facial details of 3D face models. Furthermore, we propose a single-image fitting framework based on differentiable rendering. Rich experiments show that our method outperforms the state-of-the-art methods.

翻译:我们展示了FaceVerse(FaceVerse),这是一个精细的3D神经面部模型,它由东亚混合面部数据集组成,包含60K Feded RGB-D图像和2K高纤维3D头扫瞄模型。我们建议了一个新的粗略至软结构,以更好地利用我们的混合数据集。在粗糙的模块中,我们从大型 RGB-D 图像中生成了一个基础参数模型,能够预测不同性别、年龄等的准确的粗略3D面部模型。然后,在精细模块中,引入了一个有条件的StyleGAN结构,该结构由高纤维扫描模型培训,以丰富精心设计的面部几何和纹理细节。注意到与以往方法不同,我们的基础和详细的模块都是可更改的,从而能够创新地应用调整3D 面部模型的基本属性和面部细节。此外,我们提出了一个基于不同图像的单一图像匹配框架。丰富的实验显示,我们的方法超过了最先进的方法。