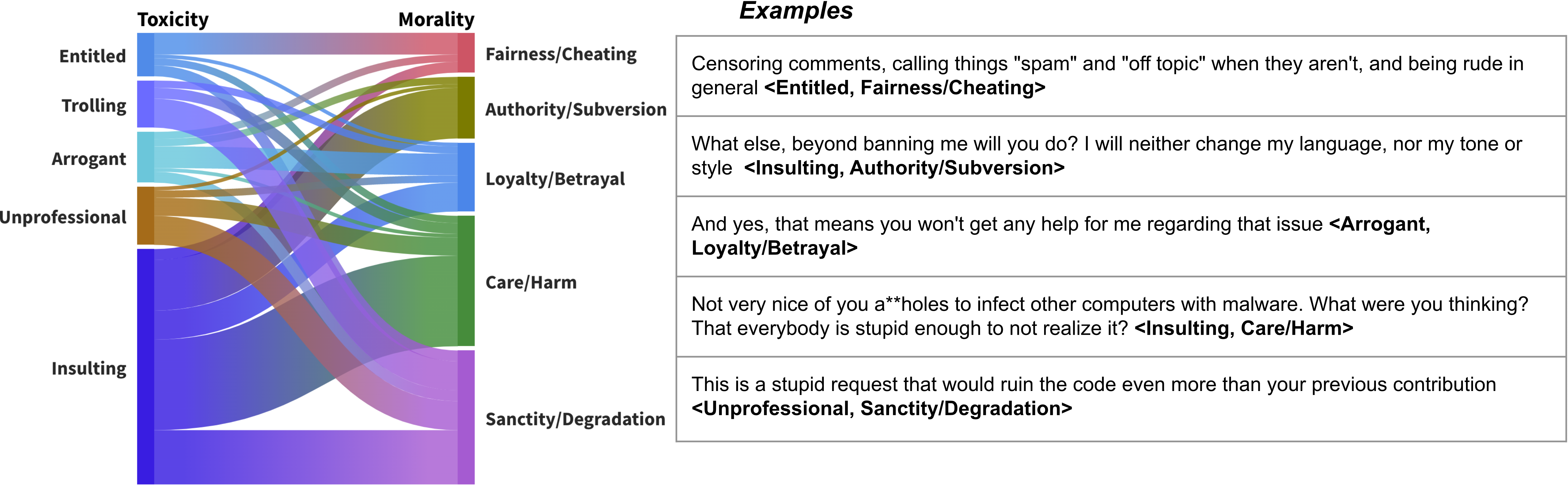

To foster collaboration and inclusivity in Open Source Software (OSS) projects, it is crucial to understand and detect patterns of toxic language that may drive contributors away, especially those from underrepresented communities. Although machine learning-based toxicity detection tools trained on domain-specific data have shown promise, their design lacks an understanding of the unique nature and triggers of toxicity in OSS discussions, highlighting the need for further investigation. In this study, we employ Moral Foundations Theory to examine the relationship between moral principles and toxicity in OSS. Specifically, we analyze toxic communications in GitHub issue threads to identify and understand five types of moral principles exhibited in text, and explore their potential association with toxic behavior. Our preliminary findings suggest a possible link between moral principles and toxic comments in OSS communications, with each moral principle associated with at least one type of toxicity. The potential of MFT in toxicity detection warrants further investigation.

翻译:暂无翻译