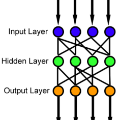

Neural networks have seen an explosion of usage and research in the past decade, particularly within the domains of computer vision and natural language processing. However, only recently have advancements in neural networks yielded performance improvements beyond narrow applications and translated to expanded multitask models capable of generalizing across multiple data types and modalities. Simultaneously, it has been shown that neural networks are overparameterized to a high degree, and pruning techniques have proved capable of significantly reducing the number of active weights within the network while largely preserving performance. In this work, we identify a methodology and network representational structure which allows a pruned network to employ previously unused weights to learn subsequent tasks. We employ these methodologies on well-known benchmarking datasets for testing purposes and show that networks trained using our approaches are able to learn multiple tasks, which may be related or unrelated, in parallel or in sequence without sacrificing performance on any task or exhibiting catastrophic forgetting.

翻译:过去十年来,神经网络在使用和研究方面,特别是在计算机视觉和自然语言处理领域出现了爆炸性,但直到最近,神经网络的改进才在狭隘的应用程序之外产生性能改进,并转化为扩大的多任务模型,能够跨越多种数据类型和模式。与此同时,已经表明神经网络过于分离,而且修剪技术已证明能够大大减少网络内主动权重的数量,同时在很大程度上保持性能。在这项工作中,我们确定了一种方法和网络代表结构,使经修剪的网络能够利用以前未用过的权重来学习随后的任务。我们利用这些方法在众所周知的基准数据集上进行测试,并表明利用我们的方法培训的网络能够学习多种任务,这些任务可能相互关联或互不相干,同时或按顺序进行,而不会牺牲任何任务的业绩或显示灾难性的忘却。