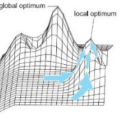

We present Reward-Switching Policy Optimization (RSPO), a paradigm to discover diverse strategies in complex RL environments by iteratively finding novel policies that are both locally optimal and sufficiently different from existing ones. To encourage the learning policy to consistently converge towards a previously undiscovered local optimum, RSPO switches between extrinsic and intrinsic rewards via a trajectory-based novelty measurement during the optimization process. When a sampled trajectory is sufficiently distinct, RSPO performs standard policy optimization with extrinsic rewards. For trajectories with high likelihood under existing policies, RSPO utilizes an intrinsic diversity reward to promote exploration. Experiments show that RSPO is able to discover a wide spectrum of strategies in a variety of domains, ranging from single-agent particle-world tasks and MuJoCo continuous control to multi-agent stag-hunt games and StarCraftII challenges.

翻译:我们提出了一种范式,通过迭接地寻找既地方最佳又与现有政策充分不同的新政策,在复杂的RL环境中发现不同的战略。鼓励学习政策通过在优化过程中以轨迹为基础的新测量方法,将原先尚未发现的地方最佳的RSPO开关与外部和内在回报开关一致。当取样的轨迹足够不同时,RSPO用外观奖励来进行标准的政策优化。对于在现有政策下极有可能的轨迹,RSPO利用内在的多样性奖励来促进探索。实验显示,RSPO能够在各个领域发现广泛的战略,从单剂粒子世界任务和MujoCo持续控制到多剂静态狩猎游戏和StarCraftII挑战等。