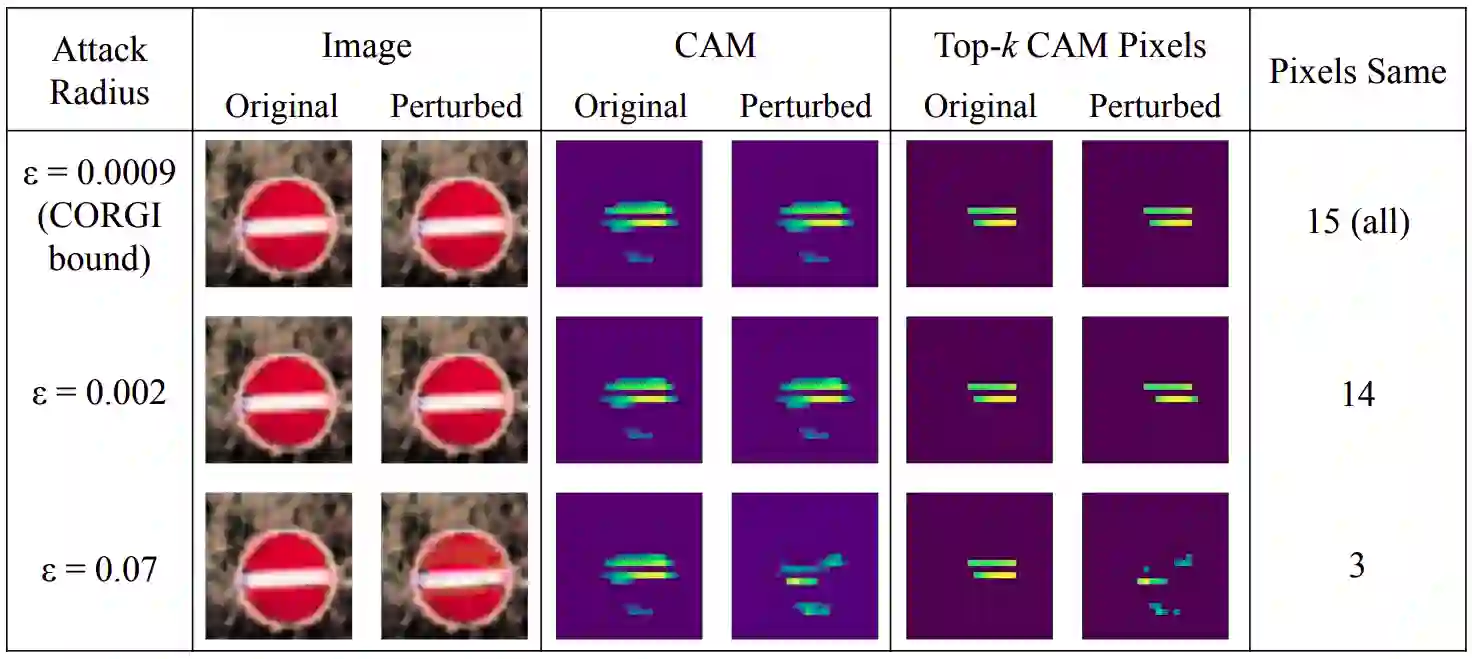

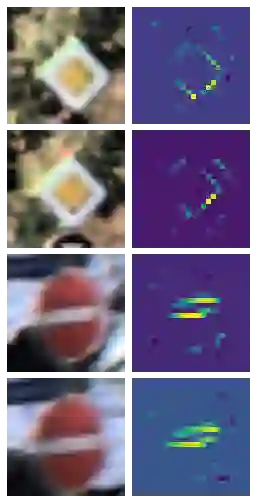

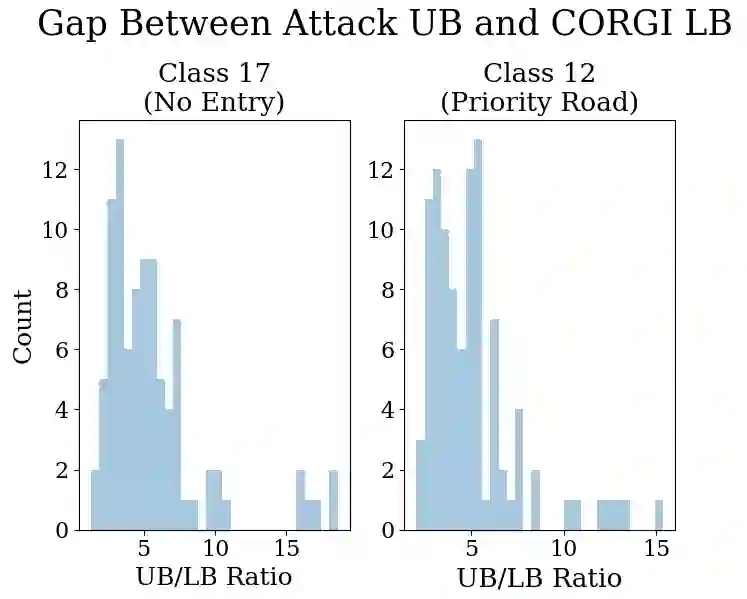

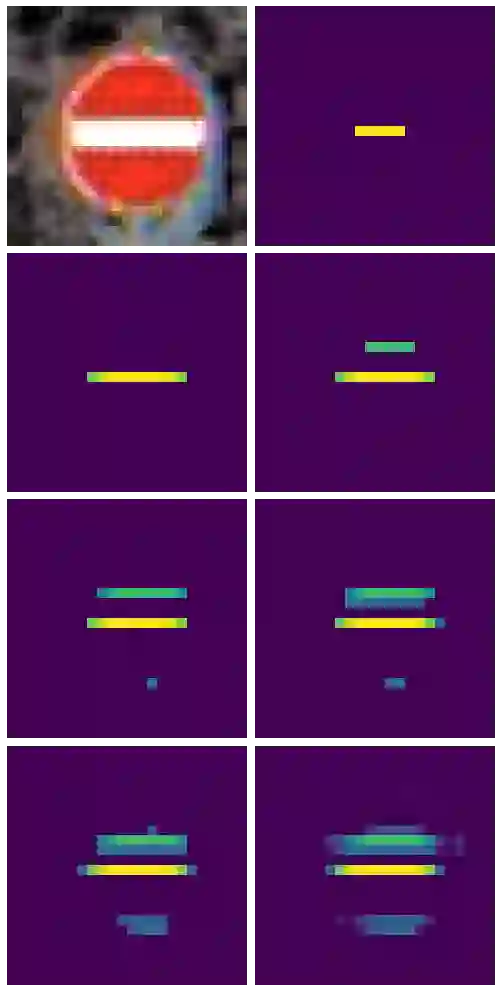

Interpreting machine learning models is challenging but crucial for ensuring the safety of deep networks in autonomous driving systems. Due to the prevalence of deep learning based perception models in autonomous vehicles, accurately interpreting their predictions is crucial. While a variety of such methods have been proposed, most are shown to lack robustness. Yet, little has been done to provide certificates for interpretability robustness. Taking a step in this direction, we present CORGI, short for Certifiably prOvable Robustness Guarantees for Interpretability mapping. CORGI is an algorithm that takes in an input image and gives a certifiable lower bound for the robustness of the top k pixels of its CAM interpretability map. We show the effectiveness of CORGI via a case study on traffic sign data, certifying lower bounds on the minimum adversarial perturbation not far from (4-5x) state-of-the-art attack methods.

翻译:解释机器学习模型具有挑战性,但对于确保自主驾驶系统深层网络的安全至关重要。由于自主车辆中普遍存在基于深层次学习的认知模型,准确解释其预测至关重要。虽然提出了各种这类方法,但大多数方法都显示缺乏稳健性。然而,在提供可解释性坚固性证书方面没有做多少工作。在这方面迈出一步,我们介绍CORGI,为可核实的可解释性绘图的可证实性可靠保障提供简称。CORGI是一种算法,在输入图像中取用,并为CAM可解释性地图的顶部千像素的强度提供可验证的下限。我们通过对交通标志数据的案例研究展示CORGI的有效性,在离(4-5x)最远的攻击方法的最低限度的对角性扰动性扰动性保障上证明下限。