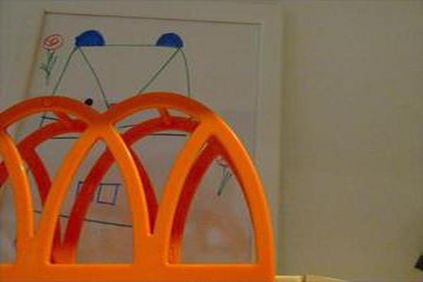

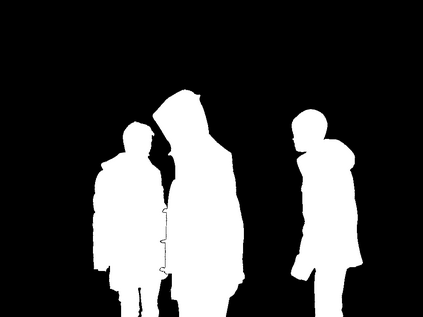

RGB-D saliency detection integrates information from both RGB images and depth maps to improve prediction of salient regions under challenging conditions. The key to RGB-D saliency detection is to fully mine and fuse information at multiple scales across the two modalities. Previous approaches tend to apply the multi-scale and multi-modal fusion separately via local operations, which fails to capture long-range dependencies. Here we propose a transformer-based network to address this issue. Our proposed architecture is composed of two modules: a transformer-based within-modality feature enhancement module (TWFEM) and a transformer-based feature fusion module (TFFM). TFFM conducts a sufficient feature fusion by integrating features from multiple scales and two modalities over all positions simultaneously. TWFEM enhances feature on each scale by selecting and integrating complementary information from other scales within the same modality before TFFM. We show that transformer is a uniform operation which presents great efficacy in both feature fusion and feature enhancement, and simplifies the model design. Extensive experimental results on six benchmark datasets demonstrate that our proposed network performs favorably against state-of-the-art RGB-D saliency detection methods.

翻译:RGB-显要性探测将来自RGB图像和深度地图的信息结合起来,以改进在具有挑战性的条件下对显要地区的预测。RGB-显要性探测的关键是,在两种模式的多个尺度上完全开采和集成信息。以前的方法倾向于通过当地作业分别使用多尺度和多模式的聚合,而当地作业未能捕捉长距离依赖性。我们在这里建议一个基于变压器的网络来解决这一问题。我们提议的结构由两个模块组成:一个基于变压器的内调特点增强模块(TWFEM)和一个基于变压器的特征融合模块(TFFM)。TFFM通过同时将多个尺度和所有位置的两种模式结合起来,进行充分的特征融合。TFEM通过选择和整合在TFMFM之前同一模式内其他尺度中的补充性信息,提高每个尺度的特征。我们表明变压器是一种统一的运作,在特性融合和特性增强方面都具有极大的功效,并且简化了模型设计。关于六个基准数据集的广泛实验结果表明,我们提议的网络对州测得式RD的RD方法的有利性。