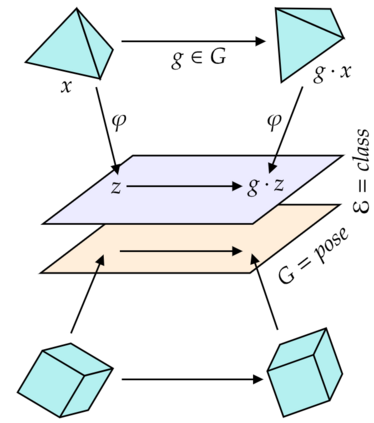

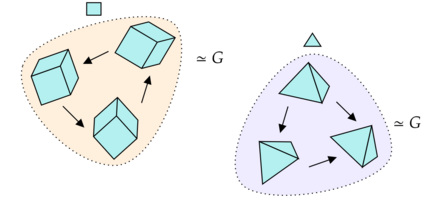

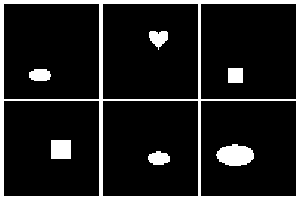

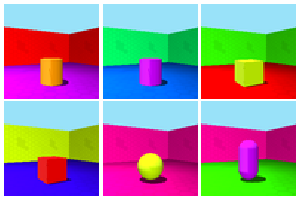

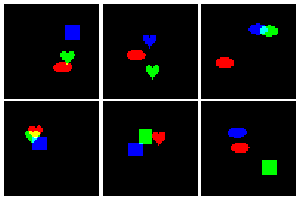

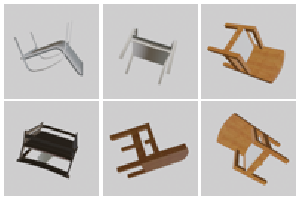

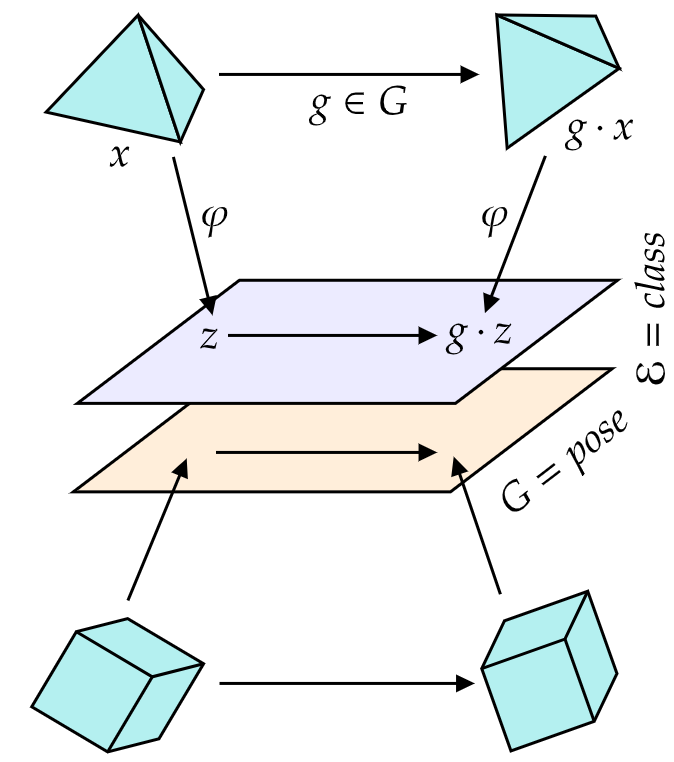

We introduce a general method for learning representations that are equivariant to symmetries of data. The central idea is to to decompose the latent space in an invariant factor and the symmetry group itself. The components semantically correspond to intrinsic data classes and poses respectively. The learner is self-supervised and infers these semantics based on relative symmetry information. The approach is motivated by theoretical results from group theory and guarantees representations that are lossless, interpretable and disentangled. We empirically investigate the approach via experiments involving datasets with a variety of symmetries. Results show that our representations capture the geometry of data and outperform other equivariant representation learning frameworks.

翻译:我们引入了一种一般的学习表达方法,该方法与数据对称不等,中心思想是将潜在空间分解成一个变量因素和对称组本身。这些组成部分与内在数据类别和构成分别对应。学习者自我监督,根据相对对称信息推断出这些语义。该方法的动力来自团体理论的理论结果和保证无损、可解释和分解的表述。我们通过涉及各种对称的数据集的实验,实证地调查了该方法。结果显示,我们的表达方式反映了数据的几何性,并超越了其他等式代表学习框架。