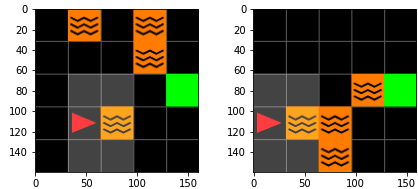

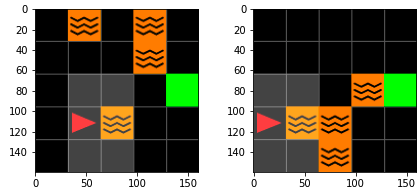

Explainability of Reinforcement Learning (RL) policies remains a challenging research problem, particularly when considering RL in a safety context. Understanding the decisions and intentions of an RL policy offer avenues to incorporate safety into the policy by limiting undesirable actions. We propose the use of a Boolean Decision Rules model to create a post-hoc rule-based summary of an agent's policy. We evaluate our proposed approach using a DQN agent trained on an implementation of a lava gridworld and show that, given a hand-crafted feature representation of this gridworld, simple generalised rules can be created, giving a post-hoc explainable summary of the agent's policy. We discuss possible avenues to introduce safety into a RL agent's policy by using rules generated by this rule-based model as constraints imposed on the agent's policy, as well as discuss how creating simple rule summaries of an agent's policy may help in the debugging process of RL agents.

翻译:加强学习(RL)政策的可解释性仍然是一个具有挑战性的研究问题,特别是在安全背景下考虑RL时。理解RL政策的决定和意图提供了通过限制不受欢迎的行动将安全纳入政策的途径。我们提议使用布林决定规则模式,对代理人的政策进行事后按规则总结。我们使用经过实施熔岩电网世界培训的DQN代理来评估我们提议的方法,并表明,鉴于这个网格世界的手工制作特征,可以制定简单的通用规则,对代理人的政策进行事后可解释的概述。我们讨论了将这种基于规则的模式所产生的规则作为代理人政策的限制,从而将安全纳入RL代理人政策的可能途径,并讨论了为代理人政策制定简单的规则摘要如何有助于减少调试过程。