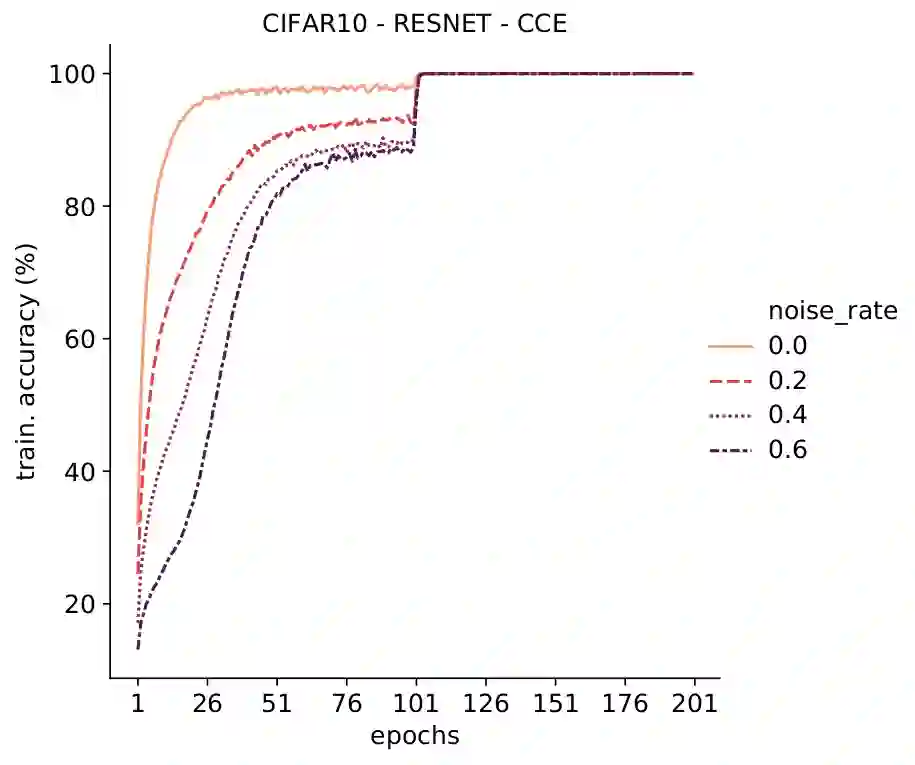

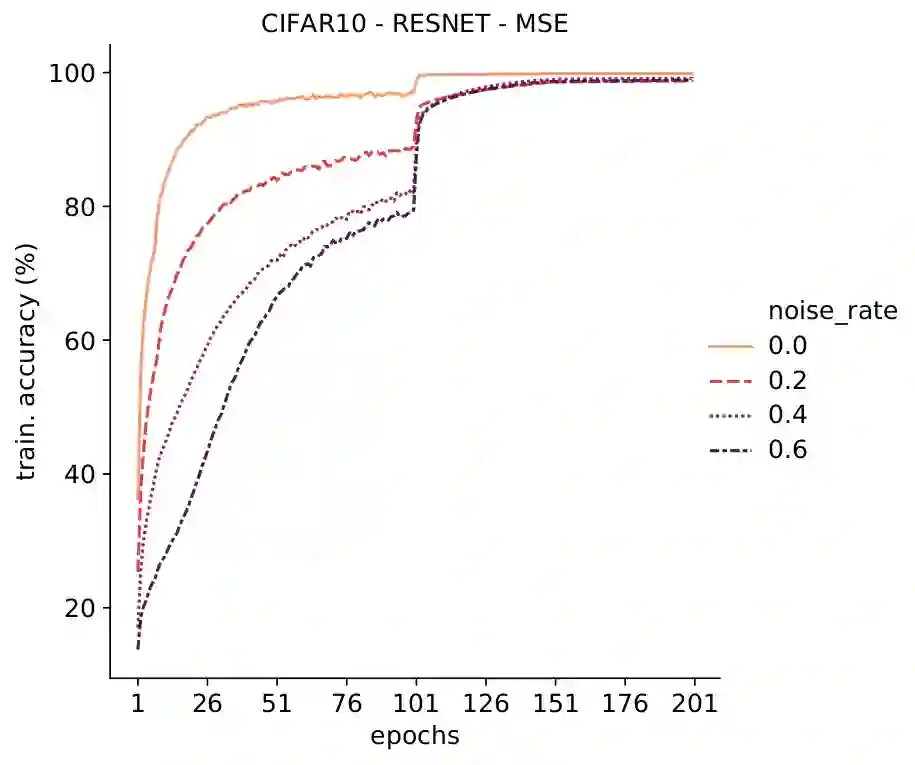

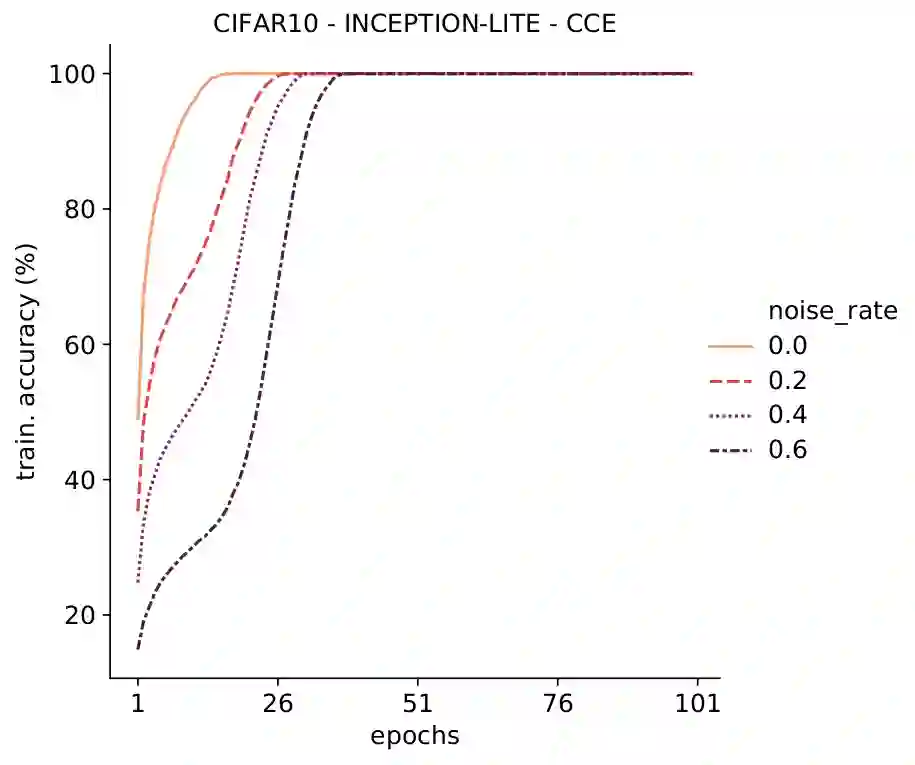

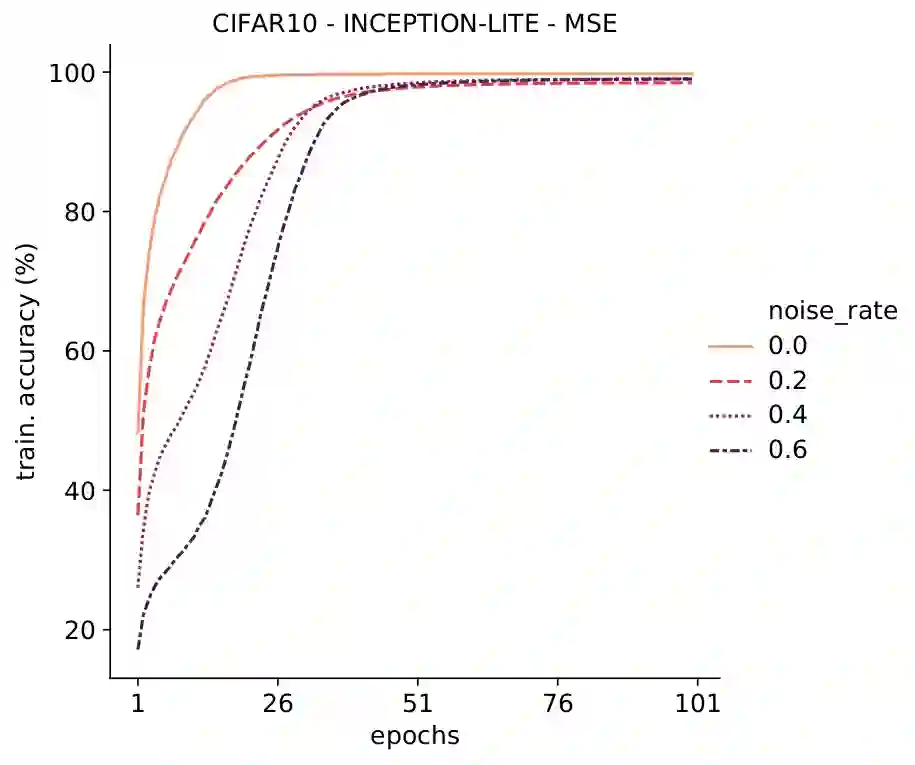

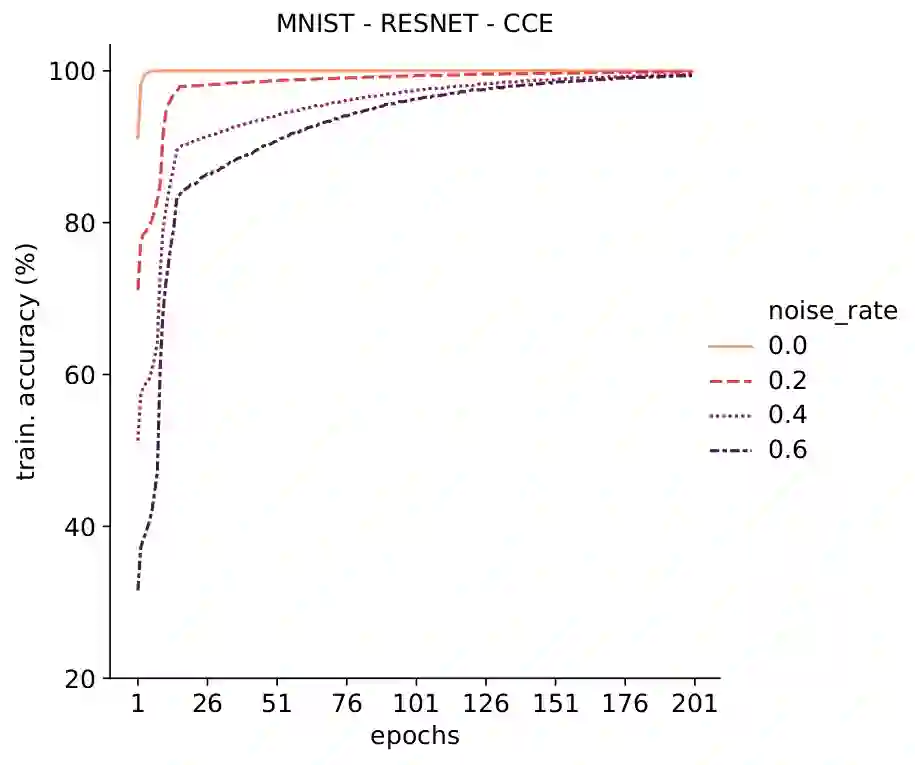

Deep Neural Networks, often owing to the overparameterization, are shown to be capable of exactly memorizing even randomly labelled data. Empirical studies have also shown that none of the standard regularization techniques mitigate such overfitting. We investigate whether the choice of the loss function can affect this memorization. We empirically show, with benchmark data sets MNIST and CIFAR-10, that a symmetric loss function, as opposed to either cross-entropy or squared error loss, results in significant improvement in the ability of the network to resist such overfitting. We then provide a formal definition for robustness to memorization and provide a theoretical explanation as to why the symmetric losses provide this robustness. Our results clearly bring out the role loss functions alone can play in this phenomenon of memorization.

翻译:深海神经网络往往由于过分的参数化,被证明能够精确地存储甚至随机标注的数据。 经验性研究还表明,标准正规化技术中没有任何一种技术能够缓解这种过度配置。 我们调查损失功能的选择是否会影响这种记忆化。 我们用基准数据集MNIST和CIFAR-10, 实验性地显示,对称损失功能,而不是交叉湿度或平方误差损失,导致网络抵抗这种过度配置的能力得到显著提高。 我们随后为记忆化的稳健性提供了正式定义,并提供了理论解释,说明为什么对称损失提供了这种稳健性。 我们的结果清楚地表明,在这种记忆化现象中,单靠对称损失功能就能起到作用。