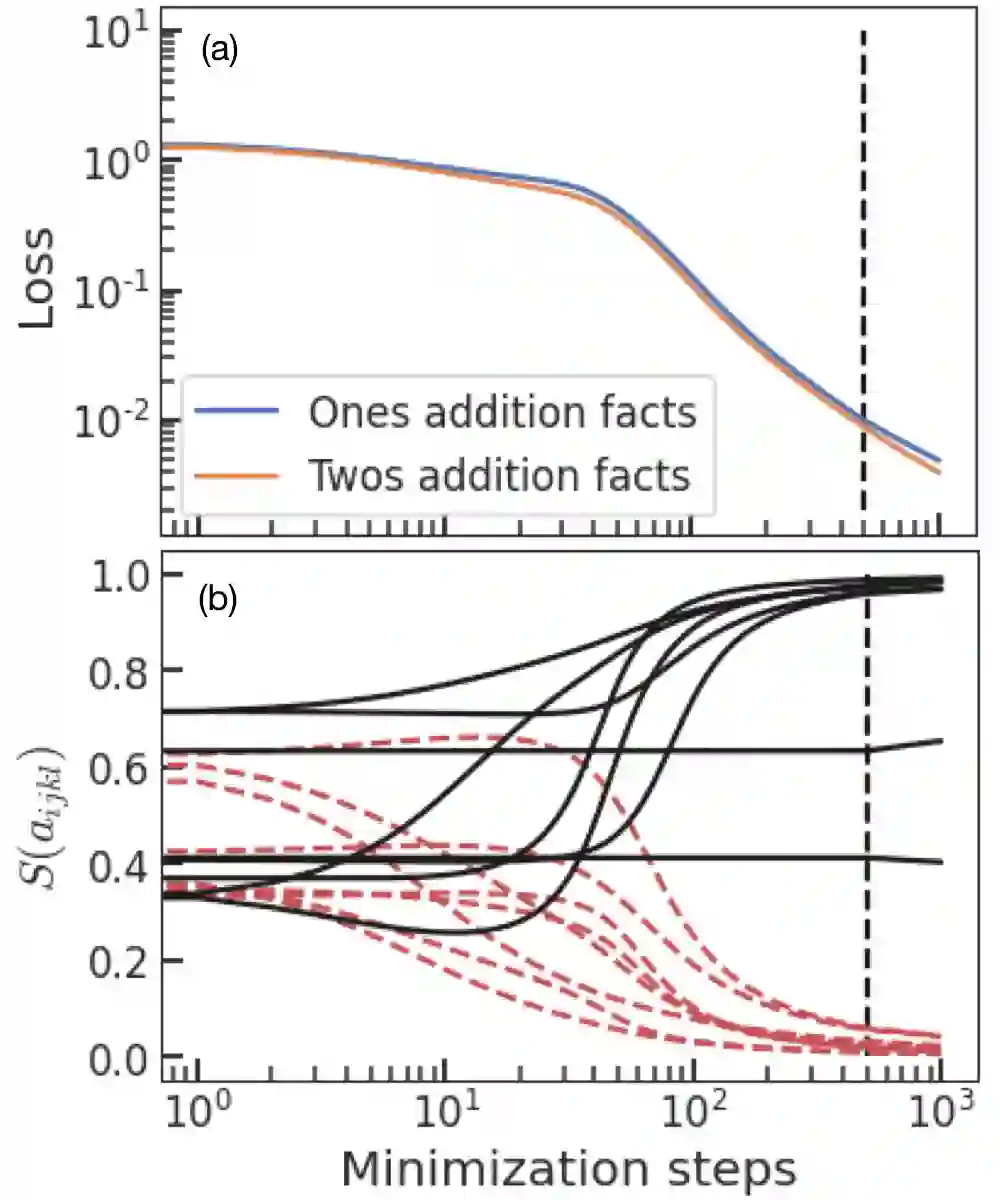

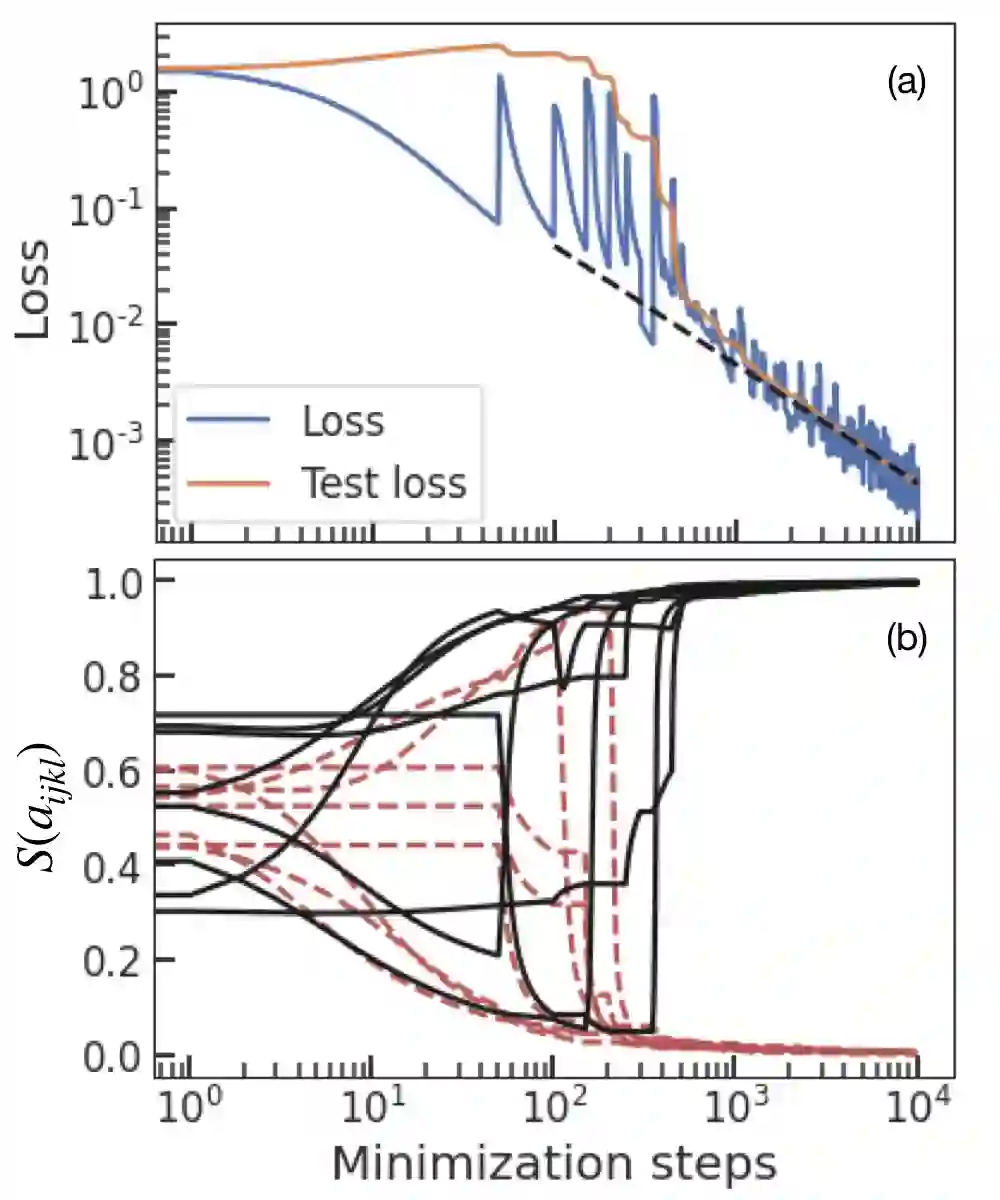

The work of McCloskey and Cohen popularized the concept of catastrophic interference. They used a neural network that tried to learn addition using two groups of examples as two different tasks. In their case, learning the second task rapidly deteriorated the acquired knowledge about the previous one. This could be a symptom of a fundamental problem: addition is an algorithmic task that should not be learned through pattern recognition. We propose to use a neural network with a different architecture that can be trained to recover the correct algorithm for the addition of binary numbers. We test it in the setting proposed by McCloskey and Cohen and training on random examples one by one. The neural network not only does not suffer from catastrophic forgetting but it improves its predictive power on unseen pairs of numbers as training progresses. This work emphasizes the importance that neural network architecture has for the emergence of catastrophic forgetting and introduces a neural network that is able to learn an algorithm.

翻译:McCloskey和Cohen的工作普及了灾难性干扰的概念。 他们使用神经网络, 试图用两组例子来学习附加, 作为两种不同的任务。 在他们的情况中, 学习第二个任务迅速恶化了对前一个任务获得的知识。 这可能是一个根本问题的症状: 添加是一个算法任务, 不应该通过模式识别来学习。 我们提议使用一个具有不同结构的神经网络, 可以训练它恢复正确的算法, 以添加二进制数字。 我们在麦克洛斯基和科恩提议的设置中测试这个神经网络, 并逐个对随机例子进行培训。 神经网络不仅不遭受灾难性的遗忘, 而且还在培训过程中改善了对数字的不可见配对的预测能力。 这项工作强调神经网络结构对于灾难性遗忘的出现的重要性, 并引入一个能够学习算法的神经网络。