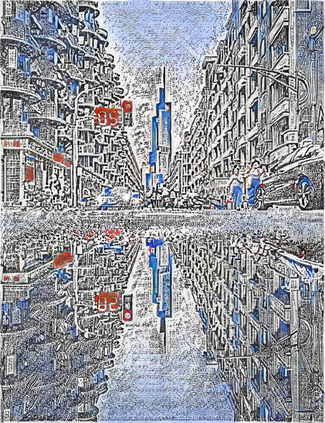

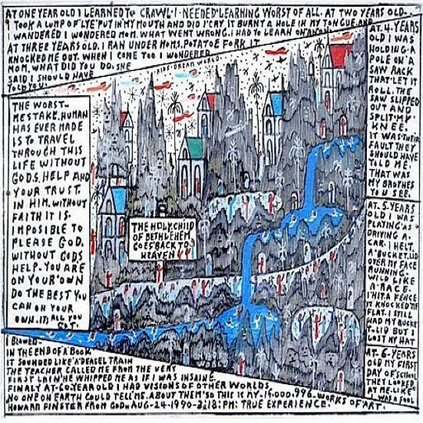

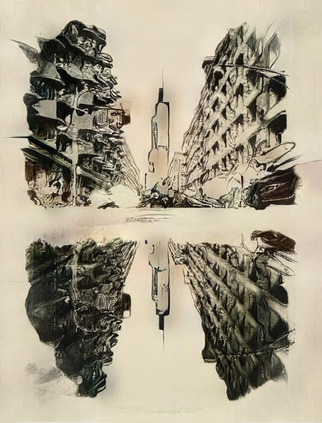

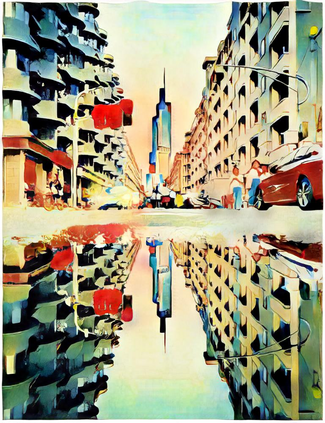

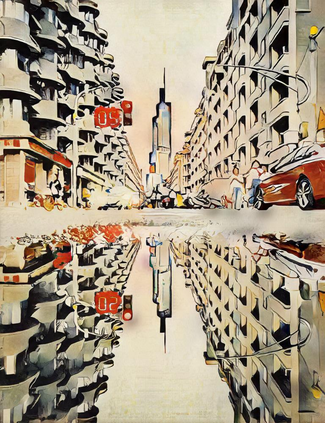

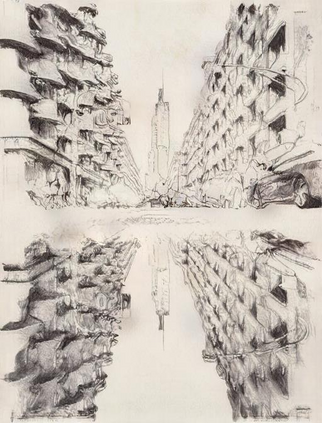

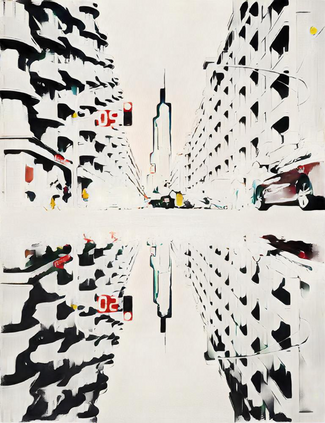

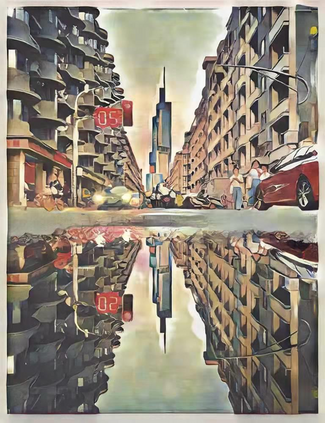

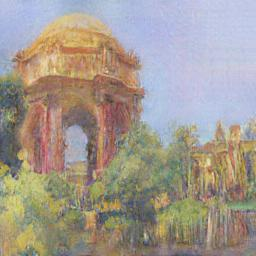

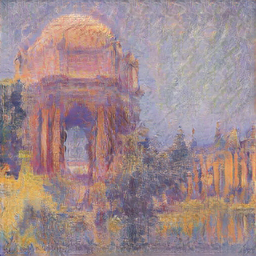

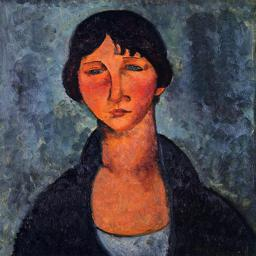

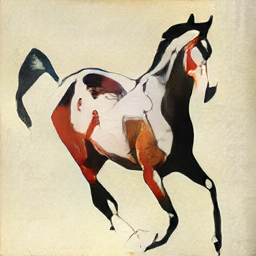

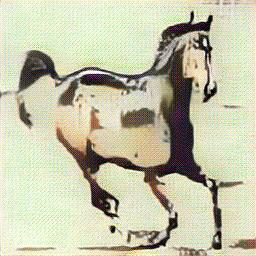

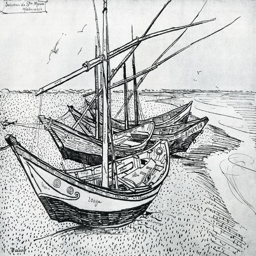

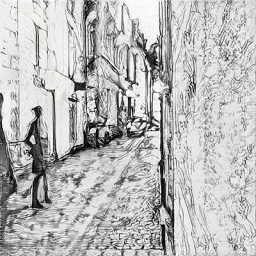

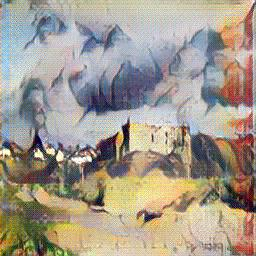

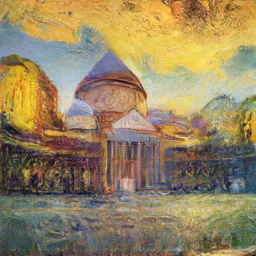

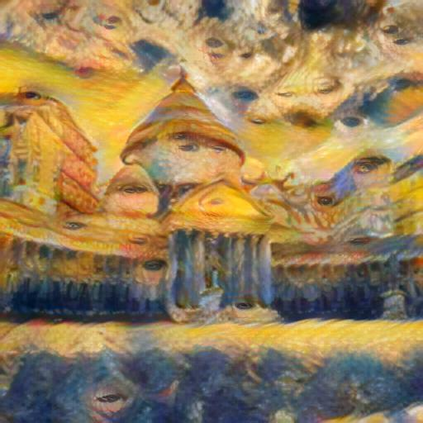

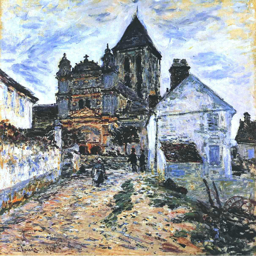

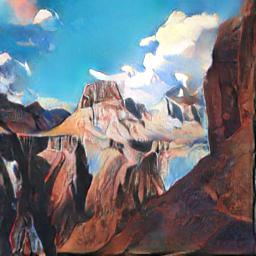

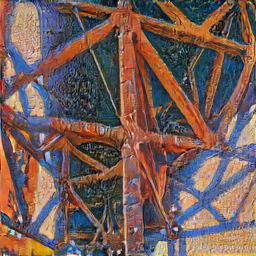

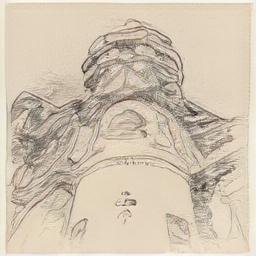

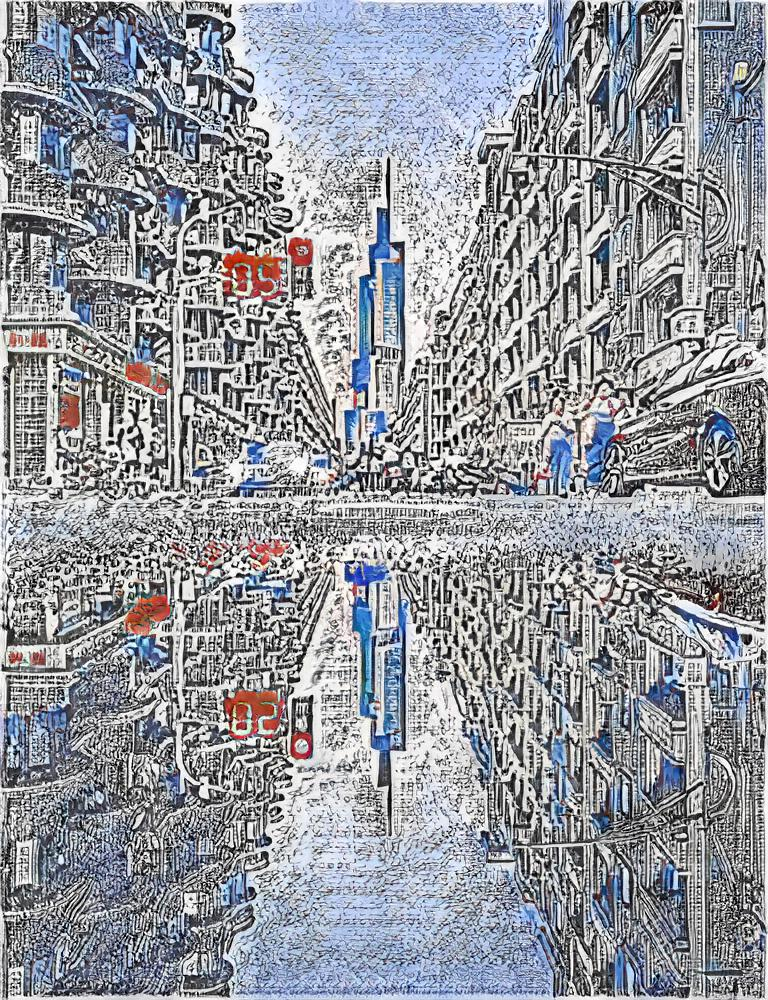

In this work, we tackle the challenging problem of arbitrary image style transfer using a novel style feature representation learning method. A suitable style representation, as a key component in image stylization tasks, is essential to achieve satisfactory results. Existing deep neural network based approaches achieve reasonable results with the guidance from second-order statistics such as Gram matrix of content features. However, they do not leverage sufficient style information, which results in artifacts such as local distortions and style inconsistency. To address these issues, we propose to learn style representation directly from image features instead of their second-order statistics, by analyzing the similarities and differences between multiple styles and considering the style distribution. Specifically, we present Contrastive Arbitrary Style Transfer (CAST), which is a new style representation learning and style transfer method via contrastive learning. Our framework consists of three key components, i.e., a multi-layer style projector for style code encoding, a domain enhancement module for effective learning of style distribution, and a generative network for image style transfer. We conduct qualitative and quantitative evaluations comprehensively to demonstrate that our approach achieves significantly better results compared to those obtained via state-of-the-art methods. Code and models are available at https://github.com/zyxElsa/CAST_pytorch

翻译:在这项工作中,我们利用一种创新风格特征的特征代表学习方法来解决任意图像风格转换的挑战性问题。适当的风格代表,作为图像Styl化任务的一个关键组成部分,对于取得令人满意的结果至关重要。现有的深层次神经网络方法在二阶统计(例如内容特征的格拉姆矩阵)的指导下取得了合理的结果。然而,它们没有利用足够的风格信息,从而产生本地扭曲和风格不一致等艺术品。为了解决这些问题,我们提议直接从图像特征而不是第二阶统计中学习风格表达方式。我们通过分析多种风格之间的异同和差异,并考虑到风格分布,对风格表达方式进行适当的风格描述。具体地说,我们介绍反性任意风格转移(CAST),这是一种新的风格代表学习和风格转换方法,通过对比学习。我们的框架由三个关键组成部分组成,即:风格编码多层风格投影仪,有效学习风格分布的域增强模块,以及图像风格传输的基因化网络。我们进行全面的定性和定量评估,以表明我们的方法比通过州-艺术方法获得的结果要好得多。我们的框架由三个关键组成部分组成,即,即,即多层次/CASmbrch/asmusmusmusmex。