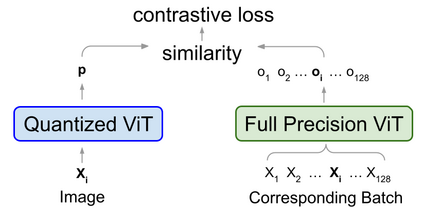

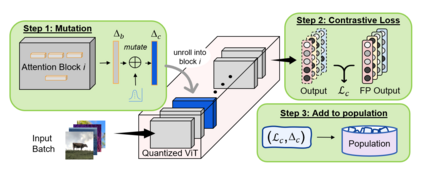

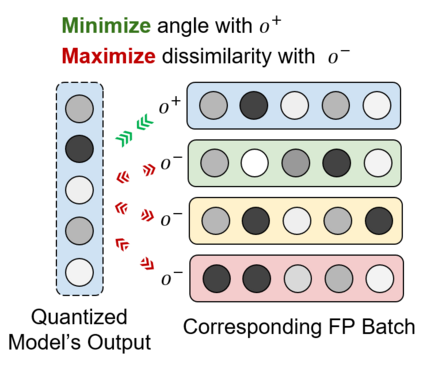

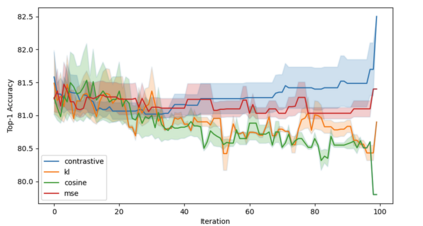

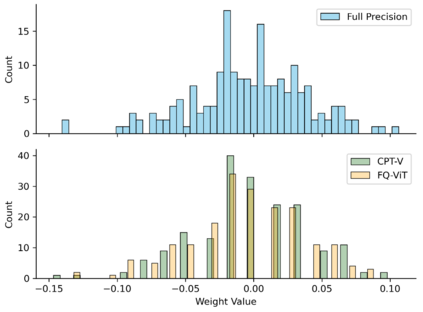

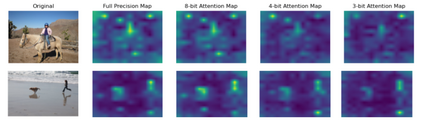

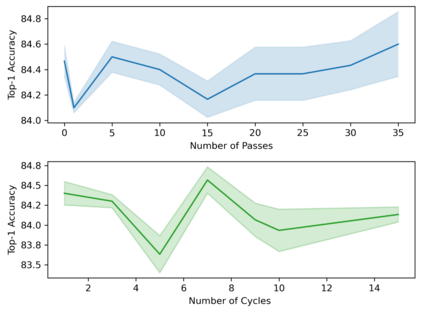

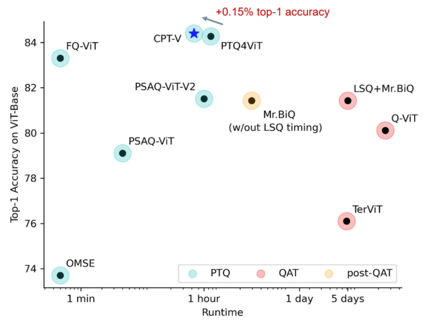

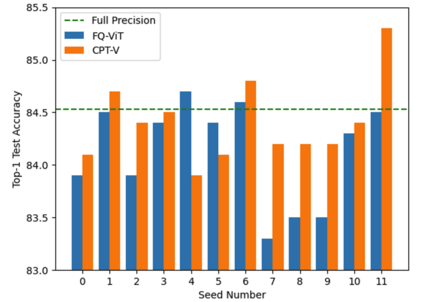

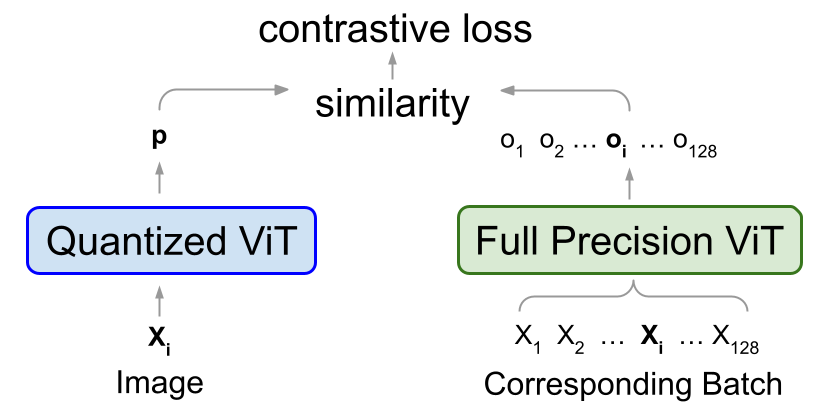

When considering post-training quantization, prior work has typically focused on developing a mixed precision scheme or learning the best way to partition a network for quantization. In our work, CPT-V, we look at a general way to improve the accuracy of networks that have already been quantized, simply by perturbing the quantization scales. Borrowing the idea of contrastive loss from self-supervised learning, we find a robust way to jointly minimize a loss function using just 1,000 calibration images. In order to determine the best performing quantization scale, CPT-V contrasts the features of quantized and full precision models in a self-supervised fashion. Unlike traditional reconstruction-based loss functions, the use of a contrastive loss function not only rewards similarity between the quantized and full precision outputs but also helps in distinguishing the quantized output from other outputs within a given batch. In addition, in contrast to prior works, CPT-V proposes a block-wise evolutionary search to minimize a global contrastive loss objective, allowing for accuracy improvement of existing vision transformer (ViT) quantization schemes. For example, CPT-V improves the top-1 accuracy of a fully quantized ViT-Base by 10.30%, 0.78%, and 0.15% for 3-bit, 4-bit, and 8-bit weight quantization levels. Extensive experiments on a variety of other ViT architectures further demonstrate its robustness in extreme quantization scenarios. Our code is available at <link>.

翻译:在考虑培训后量化时,先前的工作通常侧重于开发混合精密计划或学习分配量化网络的最佳方法。在我们的工作(CPT-V)中,我们审视了提高已经量化的网络准确性的一般方法,只是通过扰动量化尺度来提高量化网络的准确性。从自我监督的学习中借用了对比性损失的概念,我们找到了一种强有力的方法,用仅仅1,000个校准图像来联合尽量减少损失功能。为了确定最佳的量化规模,CPT-V以自我监督的方式对比量化和完全精确模型的特征。与传统的基于重建的损失功能不同,使用对比性损失功能不仅奖励了量化和完全精准的输出之间的相似性,而且还有助于在给定批量内将量化产出与其它产出区别开来。此外,CPT-V建议以轮进方式进行进化研究,以尽量减少全球的对比性损失规模,从而能够以自我监督的方式以自我监督的方式改进现有愿景转换(ViT)的量化和完全精确性模型的特性。在VAL-VBS-Qal-Qal-Qal-Qal-Ial-Ial-al-Ial-Ial-Qalizalizal-Qalizal-Qal-Iental-Iental-Iental-Iental-Ient 4 ex-Ial-Ial-Ial-Iental-Iental ex-I) 4 a a ex exal ex ex exal exal ex exalizalizalizal 4 a exxx。