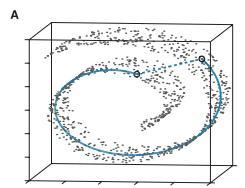

Deep neural networks are susceptible to generating overconfident yet erroneous predictions when presented with data beyond known concepts. This challenge underscores the importance of detecting out-of-distribution (OOD) samples in the open world. In this work, we propose a novel feature-space OOD detection score that jointly reasons with both class-specific and class-agnostic information. Specifically, our approach utilizes Whitened Linear Discriminative Analysis to project features into two subspaces - the discriminative and residual subspaces - in which the ID classes are maximally separated and closely clustered, respectively. The OOD score is then determined by combining the deviation from the input data to the ID distribution in both subspaces. The efficacy of our method, named WDiscOOD, is verified on the large-scale ImageNet-1k benchmark, with six OOD datasets that covers a variety of distribution shifts. WDiscOOD demonstrates superior performance on deep classifiers with diverse backbone architectures, including CNN and vision transformer. Furthermore, we also show that our method can more effectively detect novel concepts in representation space trained with contrastive objectives, including supervised contrastive loss and multi-modality contrastive loss.

翻译:深度神经网络在面对超出已知概念的数据时容易产生过度自信而错误的预测。这一挑战凸显了在开放世界中检测元数据样本的重要性。在本文中,我们提出了一种新颖的特征空间元数据检测得分,它同时考虑了类别特定和类别不可知的信息。具体而言,我们的方法利用白化线性判别分析将特征投影到两个子空间中——判别子空间和残差子空间中,在这两个子空间中,ID类别是最大分离的并且紧密聚合的。接着,我们的元数据得分是通过组合在两个子空间中输入数据与ID分布之间的偏差来确定的。我们的方法,名为WDiscOOD,在覆盖各种分布转移的6个元数据数据集上的大规模ImageNet-1k基准中得到了验证。WDiscOOD表现出了卓越的性能,在具有不同主干架构的深度分类器上,包括CNN和视觉转换器。此外,我们还展示了我们的方法在经过对比目标训练的表征空间中,包括监督对比损失和多模态对比损失,能够更有效地检测新颖的概念。