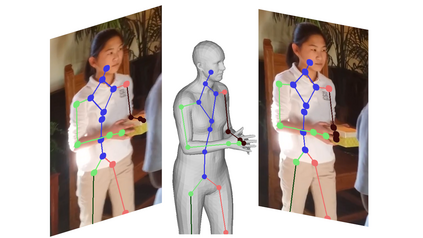

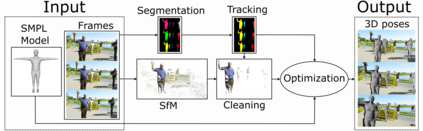

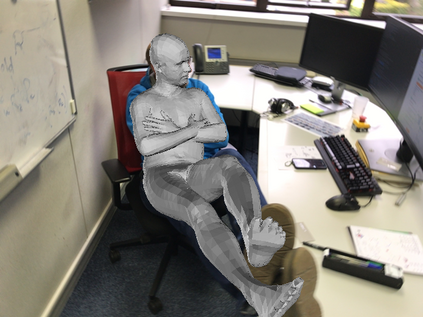

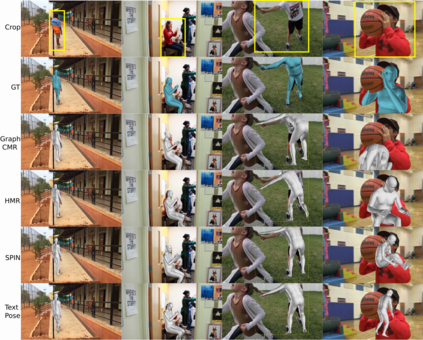

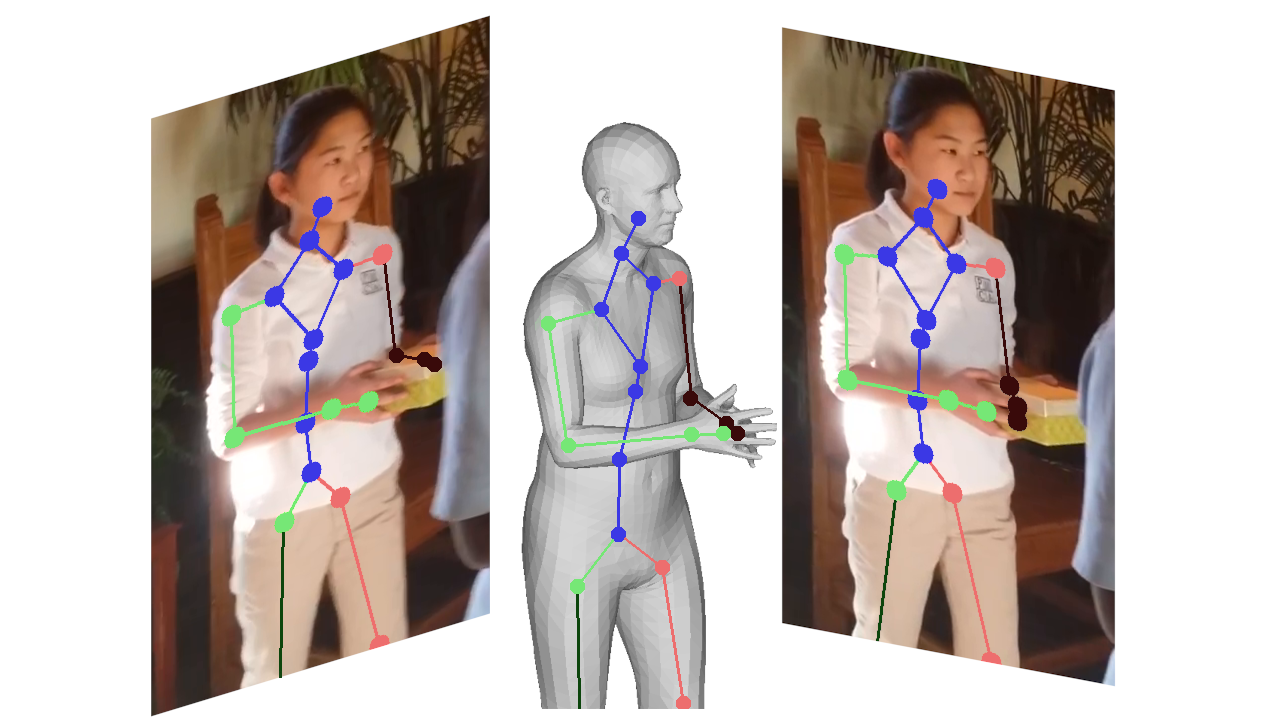

Predicting 3D human pose from images has seen great recent improvements. Novel approaches that can even predict both pose and shape from a single input image have been introduced, often relying on a parametric model of the human body such as SMPL. While qualitative results for such methods are often shown for images captured in-the-wild, a proper benchmark in such conditions is still missing, as it is cumbersome to obtain ground-truth 3D poses elsewhere than in a motion capture room. This paper presents a pipeline to easily produce and validate such a dataset with accurate ground-truth, with which we benchmark recent 3D human pose estimation methods in-the-wild. We make use of the recently introduced Mannequin Challenge dataset which contains in-the-wild videos of people frozen in action like statues and leverage the fact that people are static and the camera moving to accurately fit the SMPL model on the sequences. A total of 24,428 frames with registered body models are then selected from 567 scenes at almost no cost, using only online RGB videos. We benchmark state-of-the-art SMPL-based human pose estimation methods on this dataset. Our results highlight that challenges remain, in particular for difficult poses or for scenes where the persons are partially truncated or occluded.

翻译:从图像中预测 3D 人姿势的预测最近有了很大的改进。 已经引入了甚至能够从单一输入图像中预测形状和形状的新颖方法,这些方法往往依赖SMPL等人体的参数模型。 虽然这些方法的定性结果往往显示在瞬间捕捉到的图像中,但在这种条件下仍然缺乏适当的基准,因为获取地面真相3D在其他地方而不是在运动捕捉室中显示是累赘的。本文展示了一条管道,便于以准确的地面真相制作和验证这样一个数据集,我们用这些数据对最新的3D 人姿势估计方法进行基准。我们利用最近推出的Mannequin 挑战数据集,该数据集包含像雕塑一样在行动中被冻结的人在瞬间拍摄的视频,并利用人们静态和摄影机在运动中准确适应SMPL 3D 模型在顺序上的其他地方进行安装。 总共24,428个有注册身体模型的框框从567 场景中以几乎没有成本选择,仅使用在线 RGB 视频。 我们将基于 3D 人姿势的SMPL 挑战定位作为基准, 部分显示我们的数据显示在特定的图像中, 的图像中仍然显示我们难以 。