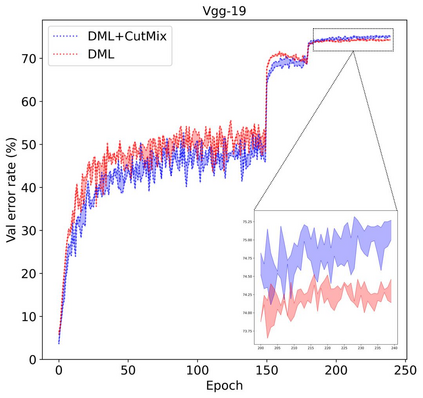

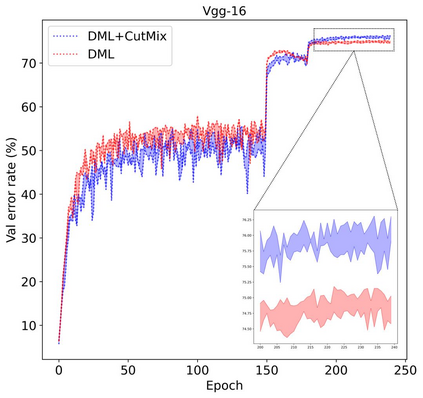

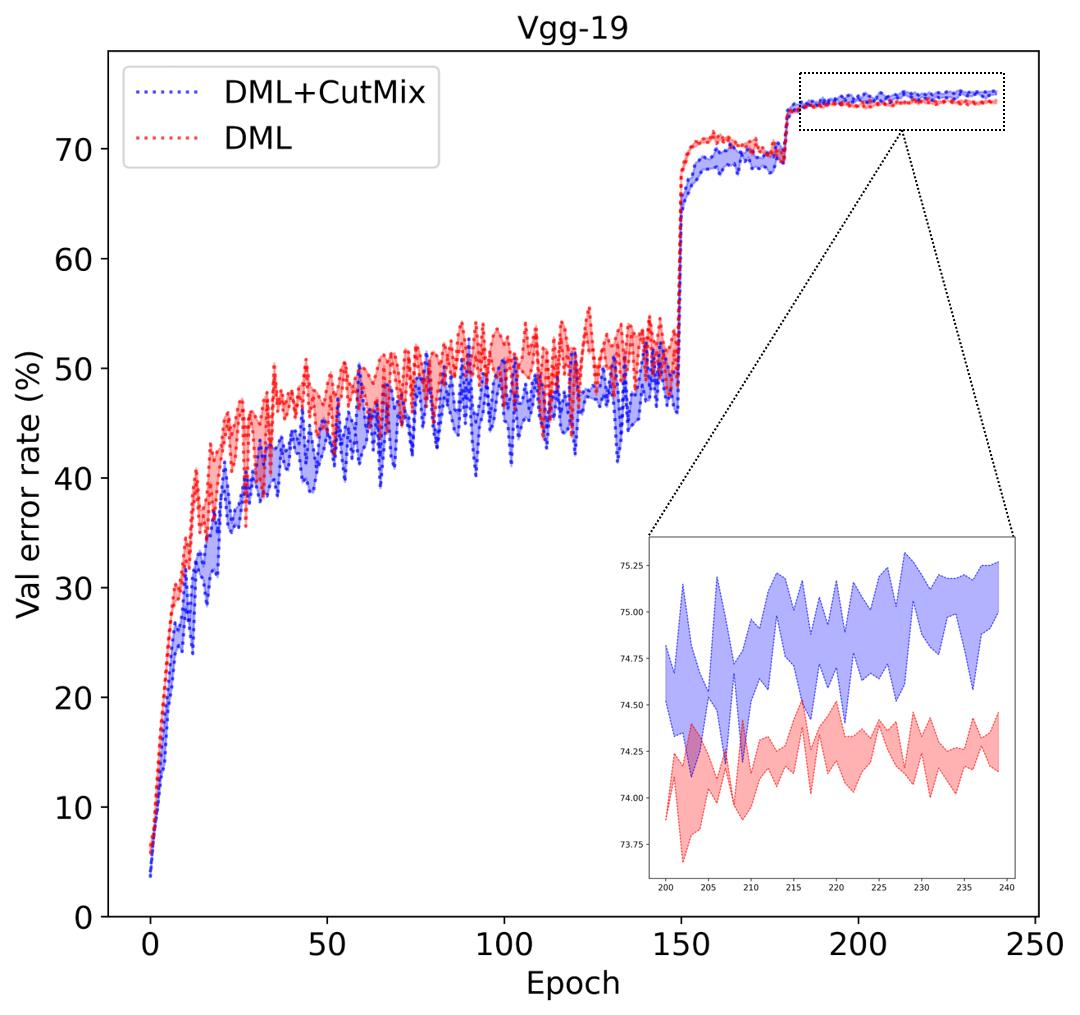

Mixed Sample Regularization (MSR), such as MixUp or CutMix, is a powerful data augmentation strategy to generalize convolutional neural networks. Previous empirical analysis has illustrated an orthogonal performance gain between MSR and conventional offline Knowledge Distillation (KD). To be more specific, student networks can be enhanced with the involvement of MSR in the training stage of sequential distillation. Yet, the interplay between MSR and online knowledge distillation, where an ensemble of peer students learn mutually from each other, remains unexplored. To bridge the gap, we make the first attempt at incorporating CutMix into online distillation, where we empirically observe a significant improvement. Encouraged by this fact, we propose an even stronger MSR specifically for online distillation, named as Cut\textsuperscript{n}Mix. Furthermore, a novel online distillation framework is designed upon Cut\textsuperscript{n}Mix, to enhance the distillation with feature level mutual learning and a self-ensemble teacher. Comprehensive evaluations on CIFAR10 and CIFAR100 with six network architectures show that our approach can consistently outperform state-of-the-art distillation methods.

翻译:混合采样(MixUp 或 CutMix) 等混合采样正规化(MSR ), 是推广进化神经网络的强大数据增强策略。 以往的经验分析已经展示了MSR和常规离线知识蒸馏(KD)之间的正方形性能收益。 更具体地说, 学生网络可以通过MSR参与连续蒸馏的培训阶段而得到加强。 然而, MSR 和在线知识蒸馏( 诸如 MixUp 或 CutMix ) 之间的相互作用仍未被探索。 为了缩小差距, 我们首次尝试将 CutMix 纳入在线蒸馏中, 我们从经验上观察了显著的改进。 受此事实的鼓励, 我们提议了更强的MSR, 专门用于在线蒸馏, 名为 Cut\ textsupershortrent{Mix。 此外, 在 Cut\ textsuperstrut{n}nMix上设计了一个新型的在线蒸馏框架, 目的是用地层相互学习和自编导师加强蒸馏。 对 CICFAR100 的六网络结构进行了全面评价, 展示方法显示我们始终可以展示的方法。</s>